Research Article: 2022 Vol: 25 Issue: 3S

Challenges for the use of key performance indicators for data driven decision-making in higher education institutions in Saudi Arabia

Tayfour Abdalla Mohammed, Jubail University College

Citation Information: Mohammed, T.A. (2022). Challenges for the use of key performance indicators for data driven decisionmaking in higher education institutions in Saudi Arabia. Journal of Management Information and Decision Sciences, 25(S3), 1-13.

Abstract

Higher education institutions (HEIs) continuously collect and compile performance data, however, the efficacy of such data in informing data-driven decision making (DDDM) processes is questioned by both academics and administrators. This paper seeks to explore the perceived challenges facing HEIs in the Kingdom of Saudi Arabia in using Key Performance Indicators (KPI) data as a decision-making support tool. An online survey was administered to collect data from academic and administrative leaders in a public Saudi University. The findings revealed various perceptions of the key challenges facing the university in effectively using KPIs data in making data driven decisions, and that there is no significant difference between these perceptions among the university functions and roles. Future research may investigate the value of investment in KPIs data infrastructure, with more focus on comparative analysis.

Keywords

Higher education; KPI; Data-driven decision making; Challenges.

Introduction

Higher education institutions (HEIs) are working under considerable pressure to collect, analyze, report, and display performance metrics and data to meet the demands of government, policy makers, accreditation agencies, and the public at large for effective governance, transparency, accountability and continuous improvement in education systems outcomes as well as to inform decision-making (Bouwma-Gearhart & Collins, 2015). Consequently, investment in information systems that collect, analyze, and distribute data, is expected to provide an amble opportunity for improving decision making effectiveness regarding institutional functions and structures, as well as meeting accreditation and quality assurance requirements. Yet, many academics and administrators in HEIs have questioned the value and quality of the data generated compared to time, efforts, and the enormous technology infrastructure invested, and they even tend to resist the implementation of data analysis tools (Santoso & Yulia, 2017; Brown, 2020). Higher education (HE) quality assurance commissions in the Kingdom of Saudi Arabia (KSA) such as Education & Training Evaluation Commission (ETEC) and National Center for Academic Accreditation and evaluation (NCAAA), issue a regularly updated lists of key performance indicators (KPIs) representing the minimum data requirements that HEIs in KSA are required to acquire, process, analyze and use to obtain or maintain their institutional or programmatic accreditation. HE quality assurance commissions suggested that such performance indicators are important tools for assessing the quality of educational institutions and monitoring their performance, as they contribute to continuous development processes and decision-making support (ETEC, 2020 & NCAAA, 2020).

With the recent move towards the liberalization of the HE sector in the KSA, and the expected adoption of performance-based funding for colleges and universities, HEIs in the country are encouraged to show more commitment towards transparency, accountability and to provide evidences of performance measurement on key result areas that create value for key stakeholders. The most notable requirements in this regard are the institutional and programmatic KPIs mandated by national accreditation agencies, which are expected to transform HEIs from “the culture of anecdote to the culture of evidence” (Bouwma-Gearhart & Collins, 2015). However, according to Kairuz et al. (2016), performance management and measures including KPIs add to the complex demands of academic work despite a lack of evidence that they are appropriate in the HE sector.

There are amble evidences in the literature that HEIs are increasingly measure their performance using KPIs (Thornton et al., 2020), and despite the plethora of research conducted in KPIs in Saudi higher education, the majority of this research focused on proposing set of KPIs to assist HEIs in performance measuring and monitoring (Abdelsalam, 2018; Badawy et al., 2018; Ismail & Al-Thaoiehie, 2015; Mahmoud et al., 2019; Mati, 2018). However, there is a dearth of research focusing on the utilization of KPIs data in informing the decision making process of HE leaders, how senior academicians & Administrators perceive the challenges associated with the implementation of data-driven decision making (DDDM) in Saudi HE. The purpose of this paper is to question the efficacy and effectiveness of the KPI data generated by HEIs and to explore the perceived challenges facing HEIs in the KSA in using KPIs data as a decision-making support tool. The importance of this research paper will be demonstrated by highlighting, and presenting to the educators, managers and policymakers, the possible ways for creating value to existing KPIs data to enable informed decision-making processes.

In the next section, the literature of KPIs and DDDM in higher education is reviewed. The third section describes the research and data collection methods, while section four provides an in-depth data analysis and results. The last section provides some discussions on the results and draws conclusions as well as, implications for future research.

Literature Review

The interdisciplinary analysis of HE research literature is interesting and challenging as pinpointed by Varouchas et al. (2018), particularly when read against the perception of quality notion between the metrics measurement view and continuous improvement process view. Echoing that notion, we see similar analogy of the tension between the literature focusing on the listing and collection of KPIs metrics for compliance, and the use of the KPIs metrics to make informed decision for future improvements.

As part of this research, the author conducted a critical review of existing literature focusing on collection and use of KPIs data in HE, and the various challenges encountered in this process. The literature review provided the basis for developing the major themes of the research questionnaire used for data collection.

KPIs and Performance Measurement in Higher Education

The term KPIs represent a set of standards of performance measures for operational and strategic areas of institutional performance that are most critical for institutional success (Ogbeifunn et al., 2016). KPIs are used to measure institutional performance in relation to strategic and operational goals and are usually quantifiable measures that reflect factors critical to the success of any HEI (Kairuz et al., 2016). KPIs are also used as a measure of institutional effectiveness in attaining its key objectives (Badawy et al., 2018), and to provide appropriate comparisons of the current performance with historical trends to measure growth and competitive position of HEIs (Suryadi, 2007). Moreover, Escobar and Toledo (2018), and Mati (2018) argued that KPIs provide a solid ground for comparing institutional performance when grouped under input, process and output indicators. The common practice in the HE sector of the KSA is that universities and institutions of HE adopt the strategic and operational KPIs determined by the national accreditation agencies with few additional KPIs set by the top management of the universities.

Kairuz et al. (2016) in their study of Australian HE suggested that the increasing trends towards managerialism and new public management, has created a stressful HE system “obsessed with surveillance” demanding academics to meet certain targets and KPIs. They argued that KPIs create unnecessary stress and pressure for academics and are not relevant for use in higher education sector. This argument is a challenge to the prescriptions given by several HE accreditation agencies, which require HEIs to compile KPIs data for compliance, governance and transparency. As summarized by Mati (2018) compliance with national and international standards of accreditation agencies mandated HEIs to develop performance measurement systems for monitoring and controlling the performance using a predefined set of KPIs for the assessment and evaluation of their academic and administrative activities. In an exploratory study of Canadian universities, Chan (2015) argued that KPIs are intended to be used as a basis for informed decision-making by university key stakeholders; however, evidences suggest that KPIs data is neither effective decision-making support tool, nor appropriate measure of performance and accountability at university levels. Ogbeifunn et al. (2016) described KPIs as “a suitable platform for effective benchmarking” when used in the context of institutions with identical objectives such as HE. For Mati (2018), HEIs are using indicators to monitor and control the performance of their activities and processes, and he suggested that these institutions use a set of metrics which can be classified into three categories: indicators, which are simple quantitative or qualitative means to measure achievement, performance indicators, measure performance against specific strategic goals, and key performance indicators, which are used to measure core activities and processes. Using multiple case study research design Gordon et al. (2017) concluded that KPIs data is best used to assist HEIs in data-driven continuous improvement towards achieving institutional strategic mission and goals. In the words of Schalekamp et al. (2015) the value of data analytics lies in enabling objective evidence-based decision making and avoiding subjective biased judgments not only on strategic level, but also at operational functions & processes, where KPIs enabled HEIs to collect and record data indicators on students, faculty, research, community services, finance and personnel. The advances brought by the implementation of data analytics in academia enabled HEIs to process and save such data on a real-time database for decision making purposes.

Data-Driven Decision Making in HE

The concept “data-driven decision making” (DDDM) is defined by Picciano (2012) as “the use of data analysis to inform courses of action involving policy and procedures…[and]…the development of reliable and timely information resources to collect, sort, and analyze the data used in the decision making process”. It is “a process of consideration of data towards informed decision-making, resulting in action by stakeholders” (Bouwma-Gearhart & Collins, 2015). DDDM or “educated decision-making”, proliferated during the 1980s and 1990s; is currently progressing into a more software enabled concept known as big data analytics. The concept is new and at penetration stage in HE, and will take few years to reach maturity (Picciano, 2012; Attaran et al., 2018).

Data analytics tools are broadly classified under three categories of techniques: descriptive analytics, predictive analytics and prescriptive analytics. These categories represent a trajectory of evolutionary development building on each other-from descriptive to predictive, then to prescriptive-to facilitate the DDDM process (Attaran et al., 2018; Camm et al., 2019).

The evolution of analytics is attributed to the advancement in the software, hardware, networking and other enabling technologies and infrastructures, which allow quicker and intelligent decisions. Descriptive analytics focus on historical data to show trends and patterns for performance monitoring using simple reports, and static data visualization (Camm et al., 2019). KPIs can be classified as part of descriptive analytics, which provides minimum support for decision-makers by enabling performance measurement, improvement and comparisons of programs, departments, and institutions. Effective utilization of educational data can be attained by applying predictive and prescriptive analytics techniques which reduces data and numbers into meaningful information for insightful decision-making in a fast and efficient way (Mahroeian et al., 2017). Santoso & Yulia (2017) suggested that strategic and analytics dashboards will allow universities to track KPIs, and to show trends.

In a survey conducted by Intel Corporation, it has been suggested that by end of 2020, around 40% of new investment in data analytics would be directed to predictive and prescriptive analytics (Attaran et al., 2018).

Belhadj (2019) argued that HEIs have been collecting and tracking tremendous amount of data, and that data has been growing significantly overtime to become a big data. However, the author stressed that such data - including performance data- requires a significant effort to turn it into a useful and meaningful driver to support decision making processes. Lepenioti et al. (2020) also share the same view by stressing that descriptive analytics, which uses historical data and statistics such as KPIs data can only help in reactive actions and decisions, and given the increasing competitiveness in higher education sector, such institutions need to be proactive. Prescriptive and predictive data analytics are efficient tools for proactive decision support through the automation of the decision process and providing recommendations to decision makers (Lepenioti et al., 2020). For Shawahna (2020) KPIs are readily used by policymakers to make informed decisions related to resources allocation, quality improvement, and accountability.

The HE sector relies heavily on institutional and academic performance data for making critical and strategic decisions, and the solution for improving the efficiency of DDDM in Belhadj’s (2019) perspective is by applying business intelligence (BI) in higher education. Managers in higher education sector rely on KPIs data to compare current performance against target levels, however, according to Belhadj (2019) HEIs need to go beyond the comparison between the historic and expected targets and engage in deep educational data analytics to enable DDDM if willing to enhance strategic and operational activities. Moreover, data analytics in HE including learning and academic analytics as well as educational data mining tools may prove to be very useful in leveraging the decision-making power of HE systems (Nguyen et al., 2020; Mahroeian et al., 2017). For Nguyen et al. (2020) data analytics addresses the challenges associated with existing raw KPIs data by making information available at the right time to support institutional decision-making. Nguyen et al. (2020) suggested that over years, universities have collected immense amount of data, and educational data management systems should be upgraded to move from data collection to mining. In a study for predicting students’ academic performance using big data, Waheed et al. (2020), stated that data analytics tools demonstrated its efficacy in devising DDDM policies, and affording higher education to achieve effective decision making. Furthermore, prior studies suggested that data analytics techniques have been applied in higher education to make more efficient, accurate and informed decisions in academic advising (Gutierrez et al., 2020), identifying at-risk students and helping them, predicting student success or failure, students retention and graduation rates, timely intervention for students with high chance of dropout (Attaran et al., 2018), developing effective teaching techniques, analyzing standard assessment techniques and evaluation of curricula (Picciano, 2012). The Covid-19 pandemic, and the spurred shift of HE instruction towards fully online or blended learning provided greater impetus to Saudi higher education to consider implementing big data analytics, to support decision making in this dynamic and evolving sector in the country (Alsmadi et al., 2021).

Daniel (2015) suggested that HEIs should anticipate different challenges associated with the implementation and use of academic and data analytics. The following section will discuss the various challenges for the implementation of DDDM in HEIs.

Challenges for Data-Driven Decision-Making in HEIs

Belhadj (2019) proposed a conceptual framework for educational data-driven decisions and business intelligence in higher education. However, according to Belhadj (2019) the implementation of data analytics and DDDM process in HE, encounters a number of challenges including: Cost affordability, the potential inaccuracy of the data, faculty and staff resistance to new technology adoption, poor computer skills, competence and confidence, lack of data awareness and culture, lack of data access and clean data, many faculty and staff feel that learning how to access and interpret the data is too difficult or time-consuming, and the legal and ethical implications of collecting, storing, and using the data. Moreover, it has been suggested that the use of data informed decision making in HE is not a technological or data challenge anymore, it is an organizational challenge (Schalekamp et al., 2015). According to the authors the symptoms of such organizational challenge manifested for example, in too little stakeholder engagement, unclear data strategy and goals, hidden agendas, lack of leadership commitment and too much focus on gathering data, building models and technology solutions. Other researchers, (Picciano, 2012) have pointed to the challenges related to pressure to meet accreditation and quality assurance requirements, and its time consuming process resulting in data being outdated, challenges relating to technology and human capacity, where the lack of qualified HE staff impeded the efficacy of HEIs to effectively analyze data, and translated the findings into actions, and challenges relating to data adequacy, management and access. Research findings also suggest that KPIs data is not accurate, not real-time and of poor quality, therefore, not relevant for decision making (Attaran et al., 2018; Bouwma-Gearhart & Collins, 2015). Gagliardi et al. (2018) pointed to the absence of the infrastructure and supportive culture needed to collect process and analyze data from different institutional sources, as well as, combating resource constraints excuses. It has also been suggested that, the underlying challenges for DDDM require HEIs to undergo cultural change to shift to a data-driven mindset, improve the quality of data, and adopt reporting and data solutions (Drake & Walz, 2018). Other challenges may include the existence of multiple databases in an often highly siloed HEIs, lack of standardized KPIs, ethical and privacy concerns over students and staff data when shared with other institutions for benchmarking, resistance to change and lack of vision, lack of appropriate financial resources, and slow new technology adoption (Attaran et al., 2018; Mahroeian et al., 2017). Thus, literature review has revealed various aspects of performance measurement in HEI, the concept and infrastructure of DDDM in HEI sector, and the challenges facing HEIs in implementing DDDM processes with emphasis on KPIs data. This paper aims to add to this line of literature by analyzing the perceptions of HE leaders on the use, infrastructure, as well as exploring the challenges facing Saudi HEIs in using KPIs Data for decision making purposes.

Data Collection & Research Methodology

Research reported in this study employed a survey design. The survey was administered online to examine the challenges of using KPIs data to inform decision making process by senior academic and administrative leaders in a public Saudi University College (called the University hereafter). The University was purposely selected on the basis of its experience with strategic planning, quality assurance & accreditations as well as KPIs data collection and analysis (Kiula et al., 2019). A questionnaire derived from major themes in the literature was utilized as the main instrument for collecting data about the items associated with the use of KPIs data in decision making within the University as well as the major challenges facing senior executives in the University in this process. The survey consisted of 39 questions, of which 5 questions were general information, 7 questions regarding the items on the participants’ perception of the objective, development, and use of KPIs data, which were measured using descriptive statistics of the participants’ responses. There were 26 questions on items on the perception of the participants regarding the challenges for the utilization of the KPIs data in decision making process, which were measured on a 5-point Likert scale (1=strongly disagree, 2=disagree, 3=neutral, 4=agree, and 5=strongly agree). The last question was an open question for the participants’ comments.

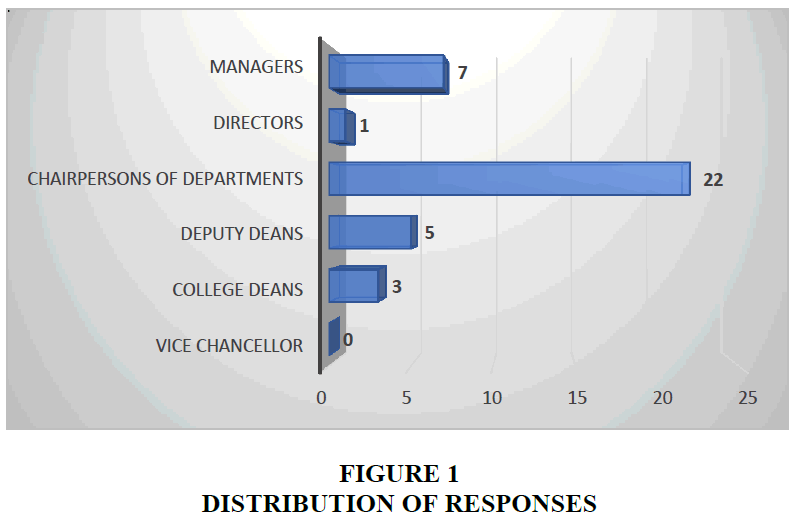

The ultimate goal of the research is to explore perception of the University leaders of the use of KPIs data in decision making and the associated challenges, hence, participants were mainly senior management, and have roles associated with strategic planning & decision-making within the University. Specifically, the survey population included vice chancellor, college deans, deputy deans, chairpersons of departments, directors, and managers (Figure 1).

The participants were briefed on the objectives of the study, and were chosen based on their management level and experience in working with KPIs data. There were 31 close-ended questions in the general information and Likert scale sections which were divided in the analysis into 26 dependent variables and 5 independent variables. The 7 key questions or themes were formulated around the objectives, development and use of KPIs data including: the purpose of collecting KPIs data in the University, the technological solutions and applications used to collect, store and visualize the KPIs data, and the uses of KPIs data within the institution. Participants were provided with open-ended section at the end of the Likert scale section, to elaborate on their responses in a form of qualitative data. The section on the challenges include key questions grouped under: organizational and cultural challenges, technology & infrastructure challenges, human capacity challenges and data quality challenges. The link for the online questionnaire was sent to 50 senior management including 40 males & 10 females (the University has few females in senior management positions), and 38 responses were received providing a 76% response rate. Among the participants there were 8 females completed the survey with 80% response rate and 30 males completed the survey with 75% response rate.

Data Analysis and Results

The data collected was analyzed using IBM SPSS Statistics version 20. Descriptive statistics including percentages, frequencies, and graphical representations were used to summarize the results.

The survey questionnaire was tested for reliability using Cronbach’s alpha. According to Chen (2009), Cronbach’s alpha is one of the most popular methods to assess reliability. It is useful when the measuring tool has many similar questions or items to which the participant can respond (Cooper & Schindler, 2008). The value of alpha measures the internal consistency or homogeneity between the items. According to Raykov (1997), high correlations among the items are associated with high alpha value (Chen, 2009). A value of alpha ranges from 0 to 1 and the higher the alpha the higher the reliability. The reliability was tested and the value of calculated alpha was 0.926 as shown in Table 1 below, which means that the research measurement tool and data generated are highly reliable and consistent.

| Table 1 Reliability Statistics | ||

| Cronbach's Alpha | Cronbach's Alpha Based on Standardized Items | N of Items |

| 0.926 | 0.922 | 31 |

The Perceived Objectives of KPIs Data

When the participants were asked what first comes to their mind when they hear KPIs data? The majority of respondents (71%) revealed that they think of performance measurement, while only 2.6% think about KPIs data as decision making support tool (Table 2).

| Table 2 What first Comes to your Mind when you Hear KPIS Data? | |||||

| Frequency | Percent | Valid Percent | Cumulative Percent | ||

| KPI Data Perception | Academic Accreditation | 4 | 10.5 | 10.5 | 10.5 |

| Strategic Planning | 6 | 15.8 | 15.8 | 26.3 | |

| Decision Making | 1 | 2.6 | 2.6 | 28.9 | |

| Performance Measurement | 27 | 71.1 | 71.1 | 100.0 | |

| Total | 38 | 100.0 | 100.0 | ||

The above finding is consistent with participants’ responses when asked to provide the relative importance of the reasons for preparing KPIs data, where the highest level of importance was given to “to show commitment towards performance improvement”.

The Technological Infrastructure of KPIs Data

The majority of the responses (75%) suggest that Microsoft Excel Spreadsheet is the main application for extracting, analyzing and monitoring the KPIs data, while 15% goes for in-house developed application, and 8% rely on an outsourced software packages. The data revealed that majority of the departments, sections, units, centers, and deanships manually import the students’ information, finance and administrative data to the KPI application used, and there is a lack of direct connectivity of the application used with the database systems in the University. Almost 77% of the responses suggested that senior management in the university monitor and visualize the KPIs through periodic reports, and only 16% are using instant dashboard application. This result may explain why KPIs data is used mostly for performance measurement, - usually conducted periodically either annually, biannually or quarterly- rather than decision making that requires real-time updated data.

The Utilization of KPIs Data

The participants were asked to rate the importance of various uses of KPIs data, and their responses revealed that the highly frequent use of KPIs data is “to set strategic goals and target metrics” followed by “to predict future performance, and set targets”, and in the third place comes the use of KPIs data “to make informed decisions”. Our data also revealed that 72% of responses indicate that the different units or departments within the University share the KPIs data internally or don’t share at all, while only 28% are sharing for external benchmarking.

Principal Component & Factor Analysis

Principal component analysis (PCA) was conducted to investigate the correlations among subsets of responses to enable the researcher to group the twenty six manifested variables into latent variables (Shahid et al., 2018). Prior to conducting the PCA, initial screening of the data was conducted using correlation matrix; this is followed by Barlett’s test of sphericity & Kaiser-Meyer-Olkin (KMO) measure of sampling adequacy. Table 3 below shows that the Barlett’s test of sphericity revealed a P value less than 0.001, and KMO factor of 0.627.

| Table 3 KMO and Bartlett's Test | ||

| Kaiser-Meyer-Olkin Measure of Sampling Adequacy. | 0.627 | |

| Bartlett's Test of Sphericity | Approx. Chi-Square | 748.869 |

| df | 325 | |

| Sig. | 0.000 | |

Moreover, our inspection of the correlation matrix generated from SPSS analysis reveals substantial number of correlations greater than 0.30, and almost 33% of the correlations are significant at the 0.01 level. These results suggest that the data is valid and adequate for PCA & factor analysis.

For factor analysis, the correlation matrix is used to compute the factor scores and extract a factor matrix. The loading of each variable are interpreted to check the underlying structure of the variable (Hair et al., 2019). Table 4 below shows the factor analysis when applying the latent variables criteria, and using the Eigen values indicating that 7 components out of 26 will be retained. These seven variables represent 78.2% of the variance of the 26 variables.

| Table 4 Total Variance Explained & Component Factor Analysis | ||||||||||

| Components | Initial Eigenvalues | Extraction Sums of Squared Loadings | Rotation Sums of Squared Loadings | Communalities | ||||||

| Total | % of Variance | Cumulative % | Total | % of Variance | Cumulative % | Total | % of Variance | Cumulative % | ||

| 1 | 10.621 | 40.851 | 40.851 | 10.621 | 40.851 | 40.851 | 4.207 | 16.182 | 16.182 | 0.738 |

| 2 | 2.621 | 10.080 | 50.931 | 2.621 | 10.080 | 50.931 | 3.354 | 12.901 | 29.083 | 0.763 |

| 3 | 1.990 | 7.652 | 58.583 | 1.990 | 7.652 | 58.583 | 2.962 | 11.394 | 40.477 | 0.804 |

| 4 | 1.669 | 6.417 | 65.000 | 1.669 | 6.417 | 65.000 | 2.922 | 11.239 | 51.716 | 0.824 |

| 5 | 1.254 | 4.822 | 69.822 | 1.254 | 4.822 | 69.822 | 2.662 | 10.239 | 61.954 | 0.811 |

| 6 | 1.166 | 4.483 | 74.305 | 1.166 | 4.483 | 74.305 | 2.147 | 8.258 | 70.212 | 0.749 |

| 7 | 1.007 | 3.872 | 78.177 | 1.007 | 3.872 | 78.177 | 2.071 | 7.965 | 78.177 | 0.869 |

| 8 | 0.780 | 3.000 | 81.177 | 0.795 | ||||||

| 9 | 0.727 | 2.798 | 83.974 | 0.877 | ||||||

| 10 | 0.610 | 2.345 | 86.319 | 0.702 | ||||||

| 11 | 0.591 | 2.274 | 88.594 | 0.817 | ||||||

| 12 | 0.466 | 1.793 | 90.386 | 0.709 | ||||||

| 13 | 0.395 | 1.520 | 91.907 | 0.818 | ||||||

| 14 | 0.367 | 1.412 | 93.318 | 0.840 | ||||||

| 15 | 0.344 | 1.323 | 94.641 | 0.865 | ||||||

| 16 | 0.307 | 1.182 | 95.823 | 0.767 | ||||||

| 17 | 0.253 | 0.973 | 96.796 | 0.841 | ||||||

| 18 | 0.207 | 0.798 | 97.594 | 0.711 | ||||||

| 19 | 0.163 | 0.625 | 98.219 | 0.918 | ||||||

| 20 | 0.125 | 0.479 | 98.699 | 0.646 | ||||||

| 21 | 0.113 | 0.436 | 99.135 | 0.724 | ||||||

| 22 | 0.080 | 0.310 | 99.444 | 0.867 | ||||||

| 23 | 0.074 | 0.283 | 99.727 | 0.778 | ||||||

| 24 | 0.035 | 0.134 | 99.861 | 0.735 | ||||||

| 25 | 0.023 | 0.088 | 99.949 | 0.670 | ||||||

| 26 | 0.013 | 0.051 | 100.000 | 0.686 | ||||||

| Extraction Method: Principal Component Analysis. | ||||||||||

From Table 4, we can see that the factor analysis solution has extracted the factors in order of their importance as explained by the associated variance. According to Hair et al, (2019), the size of communality is a useful indicator for evaluating the amount of variance in each variable that is accounted for by the factor solution. According to Gupta and Gupta (2011) the interpretation of the initial factor loading pattern is difficult and theoretically meaningless; therefore, factors matrix has to be rotated to redistribute variance from earlier matrix to later matrix. However, given the significance level such rotation would not result in a significant change in the explanatory power for the rotated factors.

Analysis of Variance (ANOVA)

This study was devised to explore the perception of the challenges of the use of KPIs data in decision making by the University senior management groups. An analysis of variance (ANOVA) was run to test whether there is no significant difference in the perceptions of the University senior management roles and functions regarding the challenges of the uses of KPIs data in DDDM.

Perception of Challenges between the University Senior Managers

ANOVA is used to find significant relation between the perceptions of senior managers in the University regarding the challenges of using KPIs data in decision making. The senior managers in the university include roles such as college deans, deputy deans, department chairpersons, directors and managers. ANOVA involves calculating estimates of variance between and within groups/roles (Gupta & Gupta, 2011). The Levene statistics for homogeneity of variances for each group show (p-value<0.05) indicating that population variances for each group are approximately equal, hence meeting ANOVA assumption. However, Table 5 below shows that only three items have significantly different variances with a significant level below (0.05).

| Table 5 Test of Homogeneity of Variances P Value Less than 0.05 | ||||

| Levene Statistic | df1 | df2 | Sig. | |

| Personal interest and hidden agenda in the use of KPIs data | 4.552 | 3 | 33 | 0.009 |

| The lack of qualified and skilled staff such as data and statistical analysis specialist | 3.518 | 3 | 33 | 0.026 |

| Lack of institutional data governance and standards | 5.481 | 3 | 33 | 0.004 |

The ANOVA for the perceptions of the University senior management groups revealed that there exists no significant difference between the perceptions of the four groups about the challenges of using KPIs data in decision making. Table 6 shows that the F test and significance values are greater than 0.05 in all variables indicating that there are no significant differences in the perceptions of the University senior management roles regarding the challenges of the uses of KPIs data in DDDM.

| Table 6 Anova Summary Statistics of the University Senior Management Roles (Lower & Upper Scores) | ||||||

| Sum of Squares | df | Mean Square | F | Sig. | ||

| Organizational & Cultural Challenges | Between Groups | 1.432-6.664 | 41 | 0.365-01.930 | 0.257-1.159 | 0.346-0.904 |

| Within Groups | 35.159 - 63.678 | 33 | ||||

| Total | 36.868 - 70.342 | 37 | ||||

| Technological & Infrastructure Challenges | Between Groups | 2.917-12.750 | 4 | 0.729-3.188 | 0.581-2.590 | 0.055-0.678 |

| Within Groups | 34.958-51.030 | 33 | ||||

| Total | 38.842-57.816 | 37 | ||||

| Human Capacity Challenges | Between Groups Within Groups Total |

3.009-10.132 | 4 | 0.752-2.533 | 0.649-1.970 | 0.122-0.632 |

| 38.255-52.687 | 33 | |||||

| 41.263-59.711 | 37 | |||||

| Data Quality Challenges | Between Groups | 1.894-9.104 | 4 | 0.474-2.276 | 0.328-1.555 | 0.209-0.857 |

| Within Groups | 25.901-48.291 | 33 | ||||

| Total | 29.368-57.395 | 37 | ||||

Academicians vs Administrators’ Perception of Challenges of KPIs Data Utilization

In order to analyze the difference between the perceptions of the academicians and administrators regarding the challenges of the use of KPIs data in decision making, ANOVA test was carried out. The findings show no significant difference between the perceptions of academicians and administrators regarding the organizational and cultural challenges, except in one item “Personal interest and hidden agenda in the use of KPIs data” which show a significant difference between the two groups with a significant level score of (0.023) less than (0.05). With regard to technological and infrastructure challenges, it was found that there is a significant difference between the perceptions of the academicians and administrators in two items of analysis: the tremendous cost of technology associated with collecting, developing, analyzing and storing the KPIs data (Sig.=0.012), and there are huge differences in the data formats between the various institutional functions (Sig. = 0.032). In the human capacity challenges, there is a significant difference between the perceptions of the two groups in two items: the lack of qualified and skilled staff such as data and statistical analysis specialist (Sig.=0.021) and, the slow cultural change needed to shift to a data-driven mindset (Sig.=0.023). Surprisingly, the analysis reveals no significant difference between the perceptions of the academicians and administrators regarding the data quality challenges factor, as the perceptions between the two groups score significant values greater than 0.05 in all items.

Discussion

This research has explored and identified some of the challenges facing higher education in the Kingdom of Saudi Arabia, and generally academics, administrators, and various university roles have similar perceptions regarding these challenges. We observed that there are various perceptions of the key challenges facing the university in effectively using KPIs data in making data driven decisions, and that there is no significant difference between these perceptions among the university functions and roles with few exceptions reported in some items. These exceptions centred on the political agenda in the use of KPIs data, the lack of skilled personnel to work with the data, and the fragile data governance & standards. One possible argument would be that senior management at different levels and functions have different explanations regarding institutional resources utilization in favour of DDDM as expressed by one of the senior university officials “having a robust system in place, with clear ownership, transparency, accountability, dedicated and qualified staff we can gain the fruits out of any institutional or departmental KPIs”. Although collecting KPIs data at institutional, departmental and program levels is a common practice in most HEIs in the KSA, however, universities needs to explore possible ways for creating value to existing KPIs data to enable DDDM processes. Therefore, with the awareness of the challenges, HEIs can capitalize on and add value to their KPIs data for effective and better decisions. It is also very essential that the university senior management should remain committed to and buy into the idea and ready to support it (Attaran et al., 2018). This idea was highlighted by Bouwma-Gearhart & Collins (2015), arguing that successful and competitive HEIs rely on effective DDDM, however, the mere collection and provision of data does not necessarily lead to high quality decisions or improvement in educational & learning processes. Provided that university senior management and leaders share common perceptions regarding the challenges for using KPIs data for making informed decisions, it would be easier to engage various stakeholders into the plans and efforts to address the challenges.

Conclusion

With the introduction of the new higher education law, the future of the HEIs in Saudi Arabia is moving towards more performance based educational and managerial activities. Therefore, universities in Saudi Arabia may need to invest in improving the quality of their KPIs data and the underlying infrastructure as well as the cultural change among both academics and administrators. Future research could focus on investigating the value of such investment efforts. Furthermore, this study focuses on data generated from one public university case which might pose some limitations on the generalization of the findings. To overcome this limitation, future research could increase the population of the public institutions studied as well as the diversification of the managerial settings to include private universities in a form of a comparative analysis to increase the internal and external validity of research findings.

References

Abdelsalam, M.M. (2018). Suggestion to Strengthen the Sustainable Competitiveness of the Higher Education Sector in the Kingdom of Saudi Arabia. The Economic Researcher Review, 6(10), 15-35.

Alsmadi, M.K., Al-Marashdeh, I., Alzaqebah, M., Jaradat, G., Alghamdi, F.A., Mustafa A Mohammad, R., Alshabanah, M., Alrajhi, D., Alkhaldi, H., Aldhafferi, N., Alqahtani, A., Badawi, U.A., & Tayfour, M. (2021). Digitalization of learning in Saudi Arabia during the COVID-19 outbreak: A survey. Informatics in medicine unlocked, 25, 100632.

Indexed at, Google Scholar, Cross Ref

Attaran, M., Stark, J., & Stotler, D. (2018). Opportunities and challenges for big data analytics in US higher education: A conceptual model for implementation. Industry and Higher Education, 32(3), 169-182.

Badawy, M., Abd El-Aziz, A.A., & Hesham, H. (2018). Exploring and Measuring the Key Performance Indicators in Higher Education Institutions. International Journal of Intelligent Computing and Information Science, 18(1), 37-47.

Belhadj, M. (2019). BI as a Solution to Boost Higher Education Performance. Finance and Business Economics Review, 3(2), 20-35.

Bouwma-Gearhart, J., & Collins, J. (2015). What we Know about Data-Driven Decision Making in Higher Education: Informing Educational Policy and Practice. In the Proceedings of the 19th International Academic Conference – IISES, Florence.

Brown, M. (2020). Seeing students at scale: how faculty in large lecture courses act upon learning analytics dashboard data. Teaching in Higher Education, 25(4), 384-400.

Indexed at, Google Scholar, Cross Ref

Camm, J.D., Cochran, J.J., Fry, M.J., Ohlmann, J.W., Anderson, D.R., Sweeney, D.J., & Williams, T.A. (2019). Business Analytics (3rd edition), Cengage Learning, Inc., Boston.

Chan, V. (2015). Implications of key performance indicator issues in Ontario universities explored. Journal of Higher Education Policy and Management, 37(1), 41-51.

Indexed at, Google Scholar, Cross Ref

Chen, G.M. (2009). Intercultural effectiveness. In L.A. Samovar, R.E. Porter, & E.R. McDaniel (Eds.), Intercultural communication: A reader (pp. 393-401). Boston, MA: Wadsworth.

Cooper, D.R., & Schindler, P.S. (2008). Business Research Methods (12th ed.). Retrieved from The University of Phoenix eBook Collection database. ISBN: 9780073401751.

Daniel, B. (2015). Big Data and analytics in higher education: Opportunities and challenges. British Journal of Educational Technology, 46(5), 904-920.

Indexed at, Google Scholar, Cross Ref

Drake, B.M., & Walz, A. (2018). Evolving business intelligence and data analytics in higher education. New Directions for Institutional Research, 2018(178), 39-52.

Indexed at, Google Scholar, Cross Ref

Escobar, C.R., & Toledo, M.R. (2018). Innovative Model of Management and University Quality through Results Indicators; the KPI's Cross Impact Matrix of the Universidad Bernardo O’Higgins. Valahian Journal of Economic Studies, 9(23), 69-82.

ETEC (2020). Education & Training Evaluation Commission, Saudi Arabia.

Gagliardi, J., Parnell, A., & Carpenter-Hubin, J. (2018). The analytics revolution in higher education. Change: The Magazine of Higher Learning, 50(2), 22-29.

Indexed at, Google Scholar, Cross Ref

Gordon, L.C., Gratz, E., Kung, D.S., Dyck, H., & Lin, F. (2017). Strategic Analysis of the Role of Information Technology in Higher Education - A KPI-centric model. Communications of the IIMA, 15(1), 17-34.

Gupta, S.L., & Gupta, H. (2011). SPSS 17.0 for Researchers. International Book House PVT. Ltd, New Delhi.

Gutierrez, F., Seipp, K., Ochoa, X., Chiluiza, K., De Laet, T., & Verbert, K. (2020). LADA: A learning analytics dashboard for academic advising. Computers in Human Behaviour, 107, 105826.

Indexed at, Google Scholar, Cross Ref

Hair, J.F., Black, W.C., Babin, B.J., & Anderson, R.E. (2019). Multivariate Data Analysis (8th edition). Cengage Learning EMEA, Hampshire, UK.

Ismail, T.H., & Al-Thaoiehie, M. (2015). A balanced scorecard model for performance excellence in Saudi Arabia's higher education sector. International Journal of Accounting, Auditing and Performance Evaluation, 3(4), 255.

Indexed at, Google Scholar, Cross Ref

Kairuz, T., Andries, L., Nickloes, T., I Truter, I. (2016). Consequences of KPIs and Performance Management in Higher Education. International Journal of Educational Management, 30(6), 881-893.

Indexed at, Google Scholar, Cross Ref

Kiula, M., Waiganjo, E., & Kihoro, J. (2019). The Role of Leadership in Building the Foundations for Data Analytics, Visualization and Business Intelligence. IST-Africa 2019 Conference Proceedings, Paul Cunningham and Miriam Cunningham (Eds), 8-10 May 2019, Nairobi, Kenya.

Lepenioti, K., Bousdekis, A., Apostolou, D., & Mentzas, G. (2020). Prescriptive analytics: Literature review and research challenges. International Journal of Information Management, 50, 57-70.

Indexed at, Google Scholar, Cross Ref

Mahmoud, A.S., Sanni-Anibire, M.O., Hassanain, M.A., & Ahmed, W. (2019). Key performance indicators for the evaluation of academic and research laboratory facilities. International Journal of Building Pathology and Adaptation, 37(2), 208-230.

Indexed at, Google Scholar, Cross Ref

Mahroeian, H., Daniel, B., & Russell, B. (2017). The perceptions of the meaning and value of analytics in New Zealand higher education institutions. International Journal of Educational Technology in Higher Education, 14(35), 1-17.

Mati, Y. (2018). Input resources indicators in use for accreditation purpose of higher education institutions. Performance Measurement and Metrics, 19(3), 176-185.

NCAAA (2020). National Center for Academic Accreditation and evaluation. Education & Training Evaluation Commission, Saudi Arabia.

Nguyen, A., Gardner, L., & Sheridan, D. (2020). Data Analytics in Higher Education: An Integrated View. Journal of Information Systems Education, 31(1), 61-71.

Ogbeifunn, E., Mbohwa, C., & Pretorius, J.H.C. (2016). Developing KPIs for Organizations with Similar Objectives. Proceedings of the 2016 International Conference on Industrial Engineering and Operations Management Kuala Lumpur, Malaysia, March 8-10, 2016.

Picciano, A.G. (2012). The Evolution of Big Data and Learning Analytics in American Higher Education. Journal of Asynchronous Learning Networks, 16(3), 9-20.

Raykov, T. (1997). Scale reliability, Cronbach’s coefficient alpha, and violations of essential tau-equivalence with fixed congeneric components. Multivariate Behavioral Research, 32(4), 329-353.

Santoso, L.W., & Yulia (2017). Data Warehouse with Big Data Technology for Higher Education. Procedia Computer Science, 124, 93-99.

Indexed at, Google Scholar, Cross Ref

Schalekamp, J., Vlaming, M., & Manintveld, B. (2015). Today’s organizational challenge from gut feeling to data-driven decision making. Deloitte, Netherland.

Shahid, H., Alexander, A., & Abdalla, T. (2018). An Exploratory Study for Opening Accounting Undergraduate Program in Saudi Arabia: The Stakeholders’ Perception & Need Analysis. Advances in Social Sciences Research Journal, 5(4), 212-227.

Shawahna, R. (2020). Development of Key Performance Indicators for Capturing Impact of Pharmaceutical Care in Palestinian Integrative Healthcare Facilities: A Delphi Consensus Study. Evidence-Based Complementary and Alternative Medicine, 2020, 7527543.

Indexed at, Google Scholar, Cross Ref

Suryadi, K. (2007). Framework of Measuring Key Performance Indicators for Decision Support in Higher Education Institution. Journal of Applied Sciences Research, 3(12), 1689-1695.

Thornton, C., Miller, P., & Perry, K. (2020). The impact of group cohesion on key success measures in higher Education. Journal of Further and Higher Education, 44(4), 542-553.

Varouchasa, E., Siciliab, M., & Sánchez-Alonsob, S. (2018). Towards an integrated learning analytics framework for quality perceptions in higher education: a 3-tier content, process, engagement model for key performance indicators. Behaviour & Information Technology, 3(10-11), 1129-1141.

Indexed at, Google Scholar, Cross Ref

Waheed, H., Hassan, S., Aljohani, N.R., Hardman, J., Alelyani, S., & Nawaz, R. (2020). Predicting academic performance of students from VLE big data using deep learning models. Computers in Human Behavior, 104, 06189.

Indexed at, Google Scholar, Cross Ref

Received: 12-Jan-2022, Manuscript No. jmids-22-10849; Editor assigned: 14-Jan-2022, PreQC No. jmids-22-10849(PQ); Reviewed: 18-Jan-2022, QC No. jmids-22-10849; Revised: 22-Jan-2022, Manuscript No. jmids-22-10849(R); Published: 28-Jan-2022