Research Article: 2023 Vol: 26 Issue: 5S

Critical Appraisal of Worksystem Models

Roli Dave, National Institute of Industrial Engineering, Mumbai

Vivek Khanzode, National Institute of Industrial Engineering, Mumbai

Rauf Iqbal, National Institute of Industrial Engineering, Mumbai

Vikram Neekhra, Armoured Static Workshop, Ahmednagar

Citation Information: Dave, R., Khanzode,V., Iqbal, R., & Neekhra, V. (2023). Critical Appraisal of Worksystem Models. Journal of Management Information and Decision Sciences, 26 (S5),1-23.

Abstract

Accident causation models (or theories) provides theoretical foundation to safety science, by offering a theoretical framework of failure analysis and prevention. Literature presents worksystem failure analysis models based on three schools of thoughts- (a) Human as cause (b) System as cause (c) System-Person interaction as cause. In this study, various models under these paradigms such as Human-Machine model (1980), Interaction and Coupling Model (1984), Swiss Cheese Model (1990), Dominos theory model (1998), Entropy model (2003), Human error reliability assessment model (1990), Descriptive Human Machine model (2003) and Random cluster model (2017) are systematically appraised. These seminal models examine one or more essential components of worksystem and interactive effects between them: human, machine, workspace, work environment, and work organization. With growing technology and complexity in worksystem, any singular approach is inadequate to evaluate worksystem failures. The evaluation in this study revealed that Leamon’s Human-machine model (1980) is the most appropriate and fundamental worksystem model, that gives holistic explanation of all components of worksystem and inter-component interactions. To strengthen this belief, this paper explains failure analysis of Lion Air-610 air crash (2018) with the Leomon’s Human- machine worksystem model. Some lacunaes were seen in Leamon’s model in light of highly complex and automated worksystem, that demands some future research on worksystem models.

Keywords

Worksystem Model, Failure Analysis Models, Worksystem Failure, Worksystem Interactions, Lion Air-610 Crash, Worksystem Error, Causation.

Introduction

The industrial worksystem has always been important focus areas of ergonomic researchers, for optimization of worksystem, ergonomics, safety and evaluation of incidents and accidents (DeCamp & Herskovitz, 2015). There has been transition in worksystem, credit to changing technology, expanding workspace, performing under varying environment, which is regulated by new organizational guidelines and application of artificial intelligence (Dave & Khanzode (2023); Koeppen, 2012). So, the worksystem models and evaluations methods also need to evolve, matching mobility of worksystem transition. Current worksystem are tightly coupled and increasingly complex, which are marginally being balanced out with technological and organizational solutions (Alter, 2018). The normal accident theory invites the attention towards high-risk technology and events of failures in various worksystems. Beyond redundancies and safety mechanism, an unexpected occurrence makes the worksystem failure inevitable.

There was a time, when the worksystem models, their component, interactions, and their respective design issues gained academic importance. The accident investigations focused on worksystem failure analysis, generally in terms of component failures, time and place of accidents, design flaws and process (manufacturing/ maintenance) lapses and human errors (Hobbs & Williamson, 2003). These analyses are restricted and lack the systemic and holistic causation assessment approach (Alter, 2018; Dave & Khanzode, 2023; Mehrdad et al., 2013).

To analyze worksystem performance and failure causation, the literature of six decades, on worksystem evaluation methods encompasses multiple aspects. Broadly, worksystem evaluations followed three schools of thought (i) Human failure approach (ii) System failure approach and (iii) System-Person interaction approach (Khanzode et al., 2012). In the first school of thought human error has been identified as the main reason of system failure. Models were developed for identification of human error, cause of error and techniques to mitigate the errors. Failures were attributed to human tendency of getting casual with time, taking short breaks and risks by ignoring procedures and warnings (Dhillon & Liu, 2006). Second school of thought emphasized on system failure. According to it, every system ab-initio has failure probabilities and these failures are result of worksystem degradation, occurring with time. The plants and machineries suffer wear and tear, subjected to law of nature argued that the limitations in design are main cause of human error, as lack of design parameter cause the human to commit error.

Bridger (2008) professed the third school of thought. They argue that interactions are important, while evaluating the system performance and failures.

Different models of worksystem evaluation discuss multiple paradigm (Brewer & Hsiang, 2002).The tussle among these paradigms hinders the clarity of approach for effective worksystem design. Moreover, the worksystem failures analysis are limited to post-facto accident analysis, and human (system operator or system designer) is often blamed for worksystem failure (Ji & Zhang, 2012). With highly complex technology and automation, unexpected, unanticipated, and unobstructed interactions are likely to increase the probability of accidents and catastrophes (Marynissen & Ladkin, 2012). This study presents a critical appraisal of various worksystem models, with following objectives: (i) To compare worksystem models offered by different paradigms, and (ii) To assess the relevance of fundamental worksystem model with case illustration.

This critical appraisal of various models highlights the approaches, contribution, and limitations of existing worksystem models. In the process, we argue that Leamon’s Human-machine model, 1980 is the fundamental worksystem model. Further, we examine the utility of Leamon’s model in analyzing worksystem failure through a case illustration of a recent aviation mishap (LionAir-610 air crash). The case illustration supports the premise of fundamentality of Leamon’s Human-machine model. Further, we observe certain limitations in capturing interactions of complex and automated worksystems via Leamon’s Human-machine model, which underscore the need to update the existing Leamon’s Human machine model with new interactions.

Firstly, this article explains the methodology and the appraisal process of different worksystem failure models. The assessed fundamental worksystem model is then validated, with the help of failure analysis of LionAir-610 air crash. Lastly, it bears the summary and concluding remarks of critical appraisal.

Method

‘The purpose of this work is to present a critical appraisal of worksystem models and identify the fundamental worksystem model. This research attempts to analyse a worksystem failure, using the identified fundamental worksystem model. This research is operationalized through following stepwise procedure: (i) Selection of worksystem models, (ii) Critical appraisal of worksystem models (iii) Identification of fundamental worksystem model (iv) Validation of fundamental worksystem through recent case illustration.

Step I Selection of Worksystem Models

The literature considers classicaly three paradigms for the worksystem evaluation- (a) Person as cause (b) System as the cause (c) System-Person interaction as the cause (Khanzode et al., 2012). These paradigms have evolved with comprehensive contributions by seminal models and frameworks.

The paradigm “Person as Cause” considers the human error as the primary reason for worksystem failure. This paradigm was profoundly developed, based on seminal models such as Human Error and Recovery Assessment Model proposed by Kirwan (1992). These models connect the human error as cause of system failures. The paradigm “System as Cause” considers the system as the fundamental cause of worksystem failure. System designers are required to design the robust and mistake-proof systems, anticipating probable operational errors. We found, Random Cluster model proposed by Ronald William Day, Swiss Cheese Model by James Reason 2000, Entropy model by Tania Mol and Interaction and Coupling Model by Perrow profoundly represents the system as cause paradigm. The paradigm “System–Person Interaction as a Cause” considers system-person interaction as the basic tenet of worksystem failure. This paradigm is based on the critical arguments of seminal models such as Human Machine Model by Leamon 1980, and Descriptive Human Machine model proposed by (Bridger, 2008).

Step II Critical Appraisal of Worksystem Model

The models appraised in this study are the ones, classified under three different paradigms of worksystem failure causation viz-Person as cause, System as cause and Person-system interaction. There are five essential components of worksystem, as per the definition stated by ISO 2016. They are: ‘Human’, ‘Machine’, ‘Workspace’, ‘Work Environment’ and ‘Work Organization’. Not all models address all the worksystem components (Human, Machine, Workspace, Work Environment and Work Organization). We used these components as the basis of analysis in our study. A tabulated comparison of all models was done for their approach, contribution, evaluating techniques, appraisal of worksystem components and the limitations.

Step III Identification of Fundamental Worksystem Model

Based on critical appraisal results, the worksystem model that addressed all component of worksystem, as well as covered maximum functioning and analytical details was considered as fundamental model of worksystem.

Step IV Validation of Fundamental Worksystem Through Case Illustration

The fundamental worksystem model was validated through secondary case analysis of major air crash of Lion Air 610 in the year 2018. For this, the case narrative, and inputs of investigation agencies (Indonesia’s National Transport Safety Committee investigation report) were examined(Komite Nasional Keselematan Transportasi Republic of Indonesia, 2019). The events were arranged in chronological order and the ones leading to failure were identified, with the help of facts stated by expert and investigation agencies. We then analyzed these events across worksystem component interaction. As they were responsible for accident, we term them as ‘negative interactions. These negative interactions of worksystem components were further mapped on the fundamental worksystem model. During this process, certain limitations of fundamental worksystem model were grasped.

Critical Appraisal of Worksystem Models

A worksystem comprise of ‘two or more persons with job design (task, skill, knowledge, autonomy, feedback etc.) utilizing software / hardware (machine, tools, program etc.) working in internal environment (light, noise, vibration etc.) / external environment (politics, culture, economic factors etc.) to accomplish the task in organizational structure’ (Hitt, 1998). The worksystem has terms ‘work’, that defines the effort and activities perform by human. The term ‘system’ refers to socio-technical aspects, which can be simple or complex in an organizational structure (Wilson, 2000).

Previously, the Expert Committee of Human Factor Ergonomics introduced the Human-System Interface Technology (HSI), where the human component of worksystem has interface with other components of system. It was used to formulate interface principle, guidelines, and specifications, with an aim to improve the safety, comfort, productivity, and quality of life (Parasuraman, 2000).Thereafter, the macro-ergonomics perspective came into picture, and it appreciated human-organization interface technology (HOI). It was believed that technological sub-systems, human-subsystems, environment-subsystems, and their interactions have impact on worksystem design (Hendrick, 2008).

During 1978 a ‘Select Committee on Human Factor for Future’ was formulated to identify the changing trends of next two decades (1980-2000) and their implications (Hendrick, & Kleiner (2002). It was thought then that the rapid development of technology, communication and miniaturization of components will change the nature of work and human-machine interface. The micro-ergonomics based system design will encounter failure (Hal W. Hendrick, 2008). To effectively accomplish industrial goals, attention towards macro-ergonomics approach will be required.

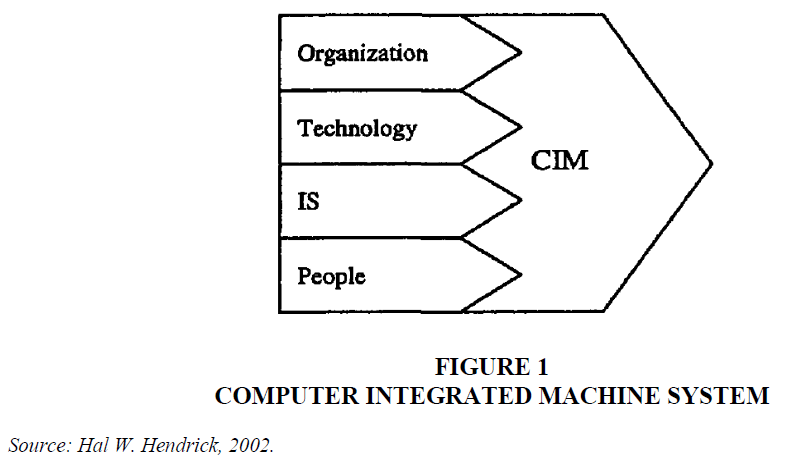

In the era of computer integrated manufacturing (CIM), ‘computer integrated machine-system’ came into picture, where the long-term competitive objectives and changing business goals were achieved by integrating the system of people, organization, and technology with each other (Martin et al., 1990). This mainly consists of three system integration (a) integration of personnel, by assuring effective communication between them, (b) human-computer integration, with suitable interface design and interaction between computers and personnel, and (c) technological integration, by assuring effective interface design and interactions between them. This was part of human-centric approach needed, where technology was integrated with skill and knowledge. However, these concepts failed due to lack of confidence of management in technology, resistance to change and survival threats to organization. The component and framework of CIM is illustrated in figure 1. This framework does not consider workspace, environment component or their interaction towards people and technology. The major emphasis is on person-technology interface.

Figure 1 Computer Integrated Machine System

Source: Hal W. Hendrick, 2002.

(a) System as a Cause

Perrow stated that with industrial advancements, humans have no options, but to live with high-risk technologies, where tightly coupled worksystems have complex interactions and thus larger catastrophic potential. His famous ‘Normal Accident theory’ (NAT) states that ‘Accidents are Inevitable’ in complex and technologically tightly coupled system. Rijpma (1997) compared the Normal accident theory (NAT) and High Reliability Theory (HRT) of Berkley school of thought and said that even safe worksystem design, well defined SOPs and close monitoring cannot avoid the accidents, can only reduce the severity of accidents (Shrivastava et al., 2009). With economic growth and consumer demands, the worksystems have become larger worksystems with sub-systems having multiple interfaces (Parasuraman, 2000). These worksystems have unpredictable interactions resulting in catastrophic failures.

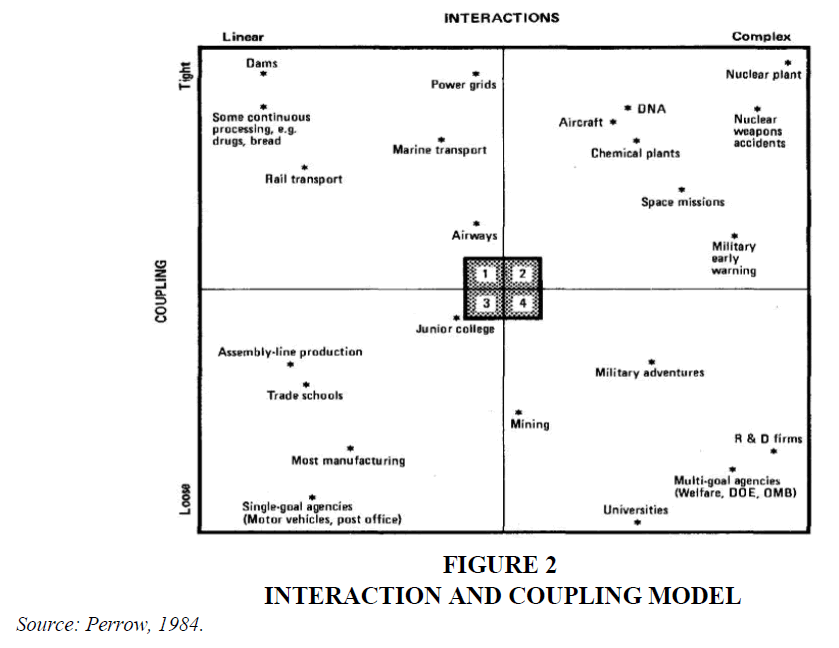

Charles Perrow in his book ´Normal accidents: Living with High-Risk technologies has elaborated the definition of accidents. He has explained the nature of interactions (complex and linear) and the key concept of coupling (lose and tight). The model given in this book is popularly known as Interaction and coupling chart.

This model is depicted as a matrix, where ‘x’ axis has a continuum of complexity in system, from linear to complex and ‘y’ axis ranges from low to tightly coupled system. Based on respective complexity and coupling, various worksystem are plotted on it. These worksystem lie in any of the four quadrangles, which defines their accident potential. For example: the nuclear worksystem having highly complex interactions and tightly coupled have higher catastrophic potential (Park et al., 2013). The tightly coupled worksystem are more time dependent and the sequence of process cannot be altered to accommodate subsystem failures (Yin et al., 2015). Similarly, the complex interactions will have unexpected, unplanned sequences, which are immediately non comprehensible during critical period. However, the model has limitations that there is no precise way to empirically measure the two variables i.e., interactions and couplings.

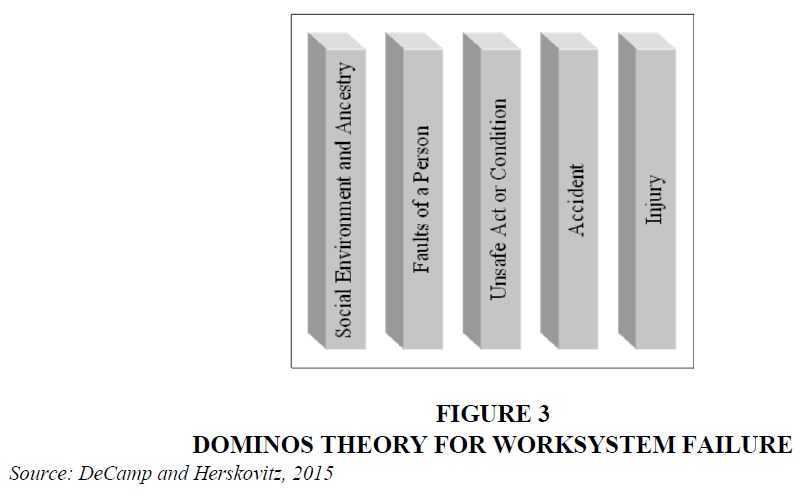

After 1980’s, there was infusion of advanced technology in almost every sector, which led to increased focus on accident causation models. Many thought processes took the limelight. In past, the worksystem failures were due to one reason leading to another reason of failure. The analytical approach followed linear pattern. Henrich explained the ‘Dominos theory’ behind industrial worksystem failures, by using the analogy of dominos falling over one another and creating a chain of events (cited by DeCamp & Herskovitz, 2015). When dominos fall over, each trips the next, enough to push it over and the process continues, until all the connected dominos have fallen. However, if just a single domino is removed, the entire process ceases. Thus it will be applicable only to loosely coupled worksystem figure 2.

Heinrich identified five stages of accident causation. The first stage, the social environment and ancestry, encompasses anything that may lead to producing undesirable traits in people. The second stage, faults of a person, refers to personal characteristics that are conducive to accidents. The manifestations of poor character. Ignorance, such as not knowing safety regulations or standard operating procedures, is also an example of this stage. The third stage, an unsafe act or condition, is often the identifiable beginning of a specific incident. The unsafe acts may arise primarily from aberrant mental processes such as forgetfulness, inattention, poor motivation, carelessness, negligence, and recklessness. This can include action, such as starting a machine without proper warning, or failing to perform appropriate preventative actions. The next stage, logically, is the accident itself. It is something happening, that is undesirable and not intended. The final stage, injury, is the unfortunate outcome of some accidents. Whether an injury occurs during an accident, it is often a matter of chance and not always the outcome. The most important policy implication is to remove at least one of the dominos, which can in turn lead to a healthy subculture, through positive accident prevention training and seminars. An organization may not be able to weed out all the people with undesirable characteristics, but it can have a procedure in place for dealing with accidents, to minimize injury and loss. This model is based on the occurrence of event in linear fashion. It is unable to explain the multidimensional occurrence of events and complex interactions in the worksystem leading to failure. Also, the impact of environment and workspace is missed out in this theory figure 3.

Figure 3 Dominos Theory for Work system Failure

Source: DeCamp and Herskovitz, 2015

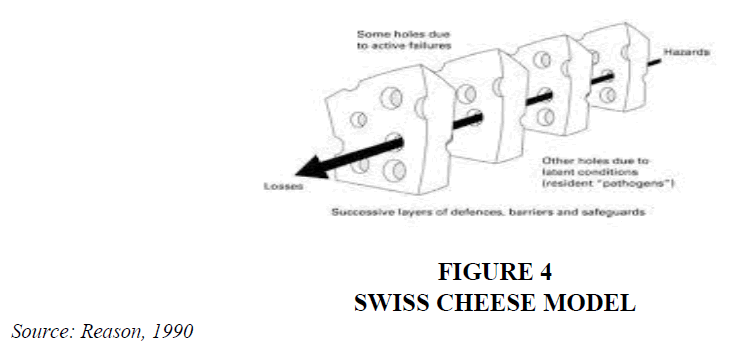

James Reason’s organizational model Reason 1995, 2008, better known as the Swiss cheese model, describes that accidents occur, when several factors line up, some due to active failures and some due to latent conditions. As per this model, failure causing factors are organizational factors and they present themselves in linear manner. A high technology worksystem and high reliability organization has many defenses, barriers, and safeguards, which are like slices of Swiss cheese Reason, 1990, 2000. These may be technological barriers (alarms, working lights and interlocking switches), human barriers (quality inspectors, domain experts, operators etc.), administrative or procedural barriers (process audits, inspections, multiple levels authority protocols etc.). When all organization’s slices (barriers) are stacked together, they represent complete organization’s defense against any risk of failure. Each barrier may have weakness or slackness, which are represented by holes of Swiss cheese. Each piece of cheese has holes in different areas (which is representation of their weak points). Normally, one or more slices of cheese will cover a hole in another slice of cheese. This is symbolic of how some facets of an organization have strengths that can compensate for the shortcomings of others. These slices of cheese keep shifting, and so position of holes also change. And there may be a time, when holes may hazardously line up momentarily, resulting in a hole that goes all the way through the stack of cheese. This symbolizes a weak point, common to all areas of organization, with greatest potential of failure.

According to the Swiss Cheese Model, generally a failure is not resultant of single root cause; but result of combination of factors. They are the result of latent errors, that are intrinsic to a procedure, machine, or system, getting triggered by active errors, that are unsafe human behaviors. These errors are broadly classified as: (a) active error: unsafe act by human (lapses, mistake, procedure violations etc). (b) latent error: strategic decision of concerned authority. These latent conditions are of two types; it can translate into error provoking conditions e.g., unexperienced manpower, inadequacies of equipment, shift work etc.; or it may remain dormant in the system (loose connectors, faulty indicators/ alarm, impractical safety drills etc.) and combine at later stage to create an accident opportunity. Therefore, failure prevention methods should concentrate on the working conditions of human; and must build and reinforce the defenses or barrier. Besnard & Baxter (2003) suggested addition of technical dimension to the Swiss cheese model. They professed that technical aspects must be considered along with Design Error. Qureshi (2008) suggests the holes in the Swiss cheese are constantly moving. He holds that Reason’s model illustrate a static view, whereas the real situation is more dynamic figure 4.

There are some system errors, which are related to design of the worksystem. The falling domino theory, the Swiss cheese model, or Perrow’s models are inefficient to explain them. The Designers face many constraints like budgetary and time limits, lack of sufficient resources and pressures created by manager and client expectations. All these factors will have a bearing on design error (Wieringa & Stassen, 1999). It is imperative to note, that the design teams are not only the ones, who are responsible for design error. The foundation of design planning is based on the inputs of clients, who are generally at manager levels and are not involved with real requirements, as the end users. They may not know the nitty gritty of operation and user interface issues. The user interface error was the major cause of the Three Mile Island nuclear disaster in the United States (Rouse & Morris, 1987). Thus, over reliance on the client input and lack of knowledge of user interface or usability awareness attributes to design failures (Diop et al., 2022). The design developed with limited specifications or inadequate testing are more prone to failures. The end-users’ inputs and their training are important aspects, required to be incorporated by designer (Hocraffer & Nam, 2017).

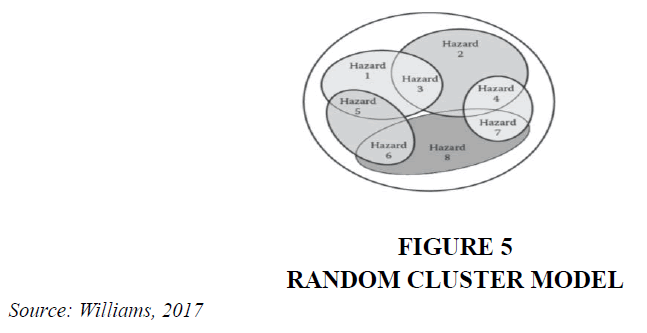

Any of the above aspects or combination of aspects can lead to design failure of worksystem. The ‘Random Cluster Model’ given by (Day, 2017) explains the design error. Figure 5 describes the way in which hazard elements can cluster with other hazard elements in a random manner, to cause a succession of crisis points within a complex project. Each of these crisis points can contribute to the failure of a project, one building on another, but not in a linear sense, unlike the previous models. The Random Cluster model operates in a three-dimensional manner, with hazards linking up with other hazards with cross-over effects in time and space. Thus, this model explains those design error situations, where a number of dissimilar, dysfunctional strands run through the design process and gather into clusters at the wrong time, leading to ultimate worksystem failure.

Design errors can occur while actual designing stage, as well as in operational stage. Whenever end-users have problems using a new design, the blame is generally on lack of training, then on inadequate user interface. This model does not take account of the technological causes of worksystem failure.

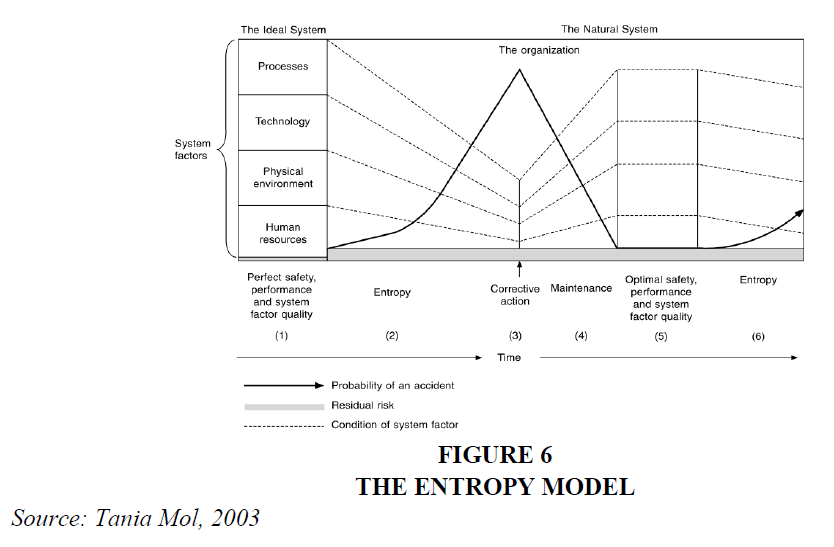

Most worksystem failure analysis models underpins the role of human error in terms of unsafe act, mental and physical condition of human or knowledge/ skill, as major contributing factor of accident/ failure (Hobbs & Williamson, 2003). Tania Mol’s entropy model was a paradigm shift, in the failure causation concept Figure 6. The components of any worksystem i.e., technology, process, human resources, environment etc. degrade with time span and the possibility of failure rises. The term ‘entropy’ means disorganization or degradation in the system. As per this model, any worksystem has two types of risk; one is inherent risk or residual risk, which cannot be eliminated and second is the risk, caused by the system component degradation, hence is known as entropy risk. The entropy risk is defined for degradation of system factors. These system factors are process (work practices), physical environment (structural factors and location), technology (plant and machinery, tools) and human resources (work force). These factors, and model works for all industries, regardless of context. Process also determines the interaction of these factors (Mol, 2003).

Figure 6 The Entropy Model

Source: Tania Mol, 2003

The gray zone illustrated in figure 6 depicts the residual risk in the system. It indicates that every system has inherent potential of failure. The entropy risk is indicated by dotted line for each system factor. The overall entropy risk over a time span is indicated by the curved line. It was further stated that every system may not have the same level of residual risk, it will vary with the type of worksystem e.g., normal manufacturing plant will have lower residual risk than a nuclear plant. Also, the entropy risk will be different for each system factor or system as a whole. All the system factors may not degrade with same rate. Therefore, the calculation of entropy risk was suggested by the author. by rating system factors between 0 at low risk and 10 at highest risk. Entropy risk is product of process risk (p), technology risk (t), physical environment risk (pe) and human resources risk (h).

Entropy risk = p x t x h x pe

This model does not include ‘organization’ and ‘workspace’ as system factors. Risk associated with organizational aspects and workspace layout, design etc. are not considered, while calculating the entropy risk of worksystem. The organizational policies play a significant role towards the worksystem, as management specially affect the human resources. The workplace layout and associated factors, especially to spatially dispersed workspace have significant contribution on worksystem risk.

(b) Person as a Cause

Most worksystem designs followed human centric approach and so the focus of risk assessment and failure analysis was also human centric (Dave & Khanzode, 2023). Literature presents some assessment techniques, based on this school of thought. One of them is ‘human error identification’ (HEI), a part of Human Reliability Assessment (HRA). It determines the impact of human error and error recovery of a worksystem. Another one is probabilistic safety assessment (PSA) techniques, which determines the potential risk of worksystem due to various factors including human error. The error identification is based on the scope of analysis, and it has three major components of error. (a) External error mode (EEM): External manifestation of action leading to error (b) Performance shaping factor (PSF): influence the likelihood of error (time pressure, training etc.) and (c) Psychological error mechanism (PEM): internal manifestation of behavior leading to error (cognitive failure, memory failure, habit pattern etc.). The human error reliability assessment model is based upon three components identification of error, consequences of error and potential error recovery. These are quantified to determine the Human Reliability Assessment (HRA) and Error Reduction Analysis (ERA). Studies have also explained the types of human error: (i) execution error includes slips, lapses and unsafe act (ii) cognitive errors includes misunderstanding, diagnostic and decision-making error (iii) error of commission comprises of unwarranted actions by operators (iv) rule violation attributed to casual approach, negligence and risk-taking behavior. Most worksystem have technology and software-based operations and so the ‘human error identification’ was formulated as software program-based technique. It has a mention of errors leading to worksystem failure.

Researchers have developed various techniques for evaluation of human error, but no single techniques is sufficient to satisfy all the requirement of practitioners. The Human Error and Recovery Assessment System (HERA) developed by Kirwan (1992) is prototype software package, which establishes relationship between ergonomics and human error identification. This software package addresses the skill and rule-based error identification module. The HERA model has following functions:

i. Scoping the error analysis and critical task identification: The scope and depth of analysis is decided based on hazard potentials of error, novelty of plant, nature of operation (normal/ emergency), etc.

ii. Task analysis: Initial task analysis and hierarchical task analysis is undertaken for identification and quantification of error (Caplet, 2007).

iii. Error identification: skill-based or rule-based: This module has nine independent and overlapping checklist for error identification. This includes the mission analysis – i.e., chances of failure irrespective of cause, goal and plan analysis related to individual task, Operational analysis to decide upon the mode of failure, like error of omission or sequential action. Error identification is carried out with analysis of performance shaping factors and psychological error mechanism. The Human Error Identification in Systems Tool HEIST: (Kirwan, 1992) in HERA analyze skill-, rule- and knowledge-based errors. Human Error Hazard and Operability (HAZOP) analysis is not embedded in the software but utilized in human error analysis Figure 7.

Figure 7 Hera Framework for Skill and Rule Based Errors Identification

Source: Kirwan, 1992

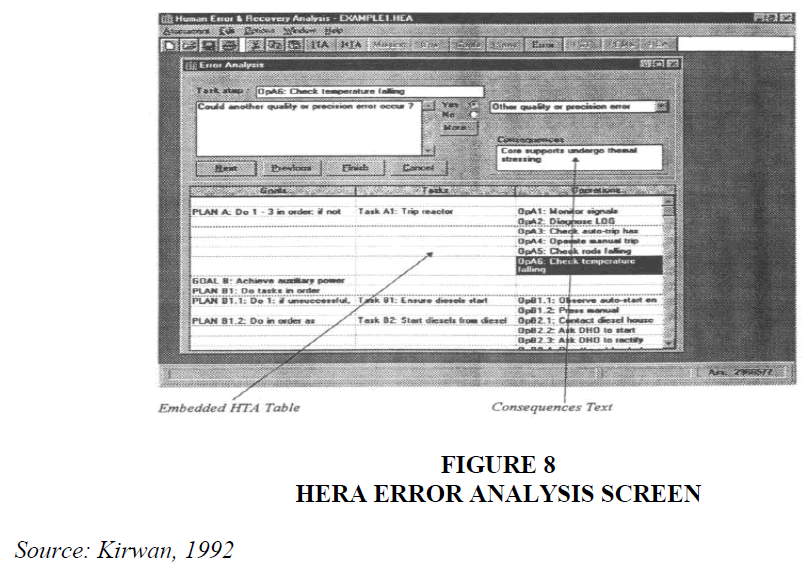

Error Identification: The Skill and Rule-Based Error Analysis module of HERA has been implemented using Visual Basics in a Windows environment. This framework offers more than one attempt to identify errors Figure 8. These divergent approaches help the process of error identification, which is fundamentally a creative process, requiring both deductive and inductive thought processes. Sometimes the same error may be identified twice; the assessor then needs to be careful and during second time, should give more emphasis to understanding of error and its causes (Sagan, 2004).

Figure 8 Hera Error Analysis Screen

Source: Kirwan, 1992

(c) System-Person Interaction Cause

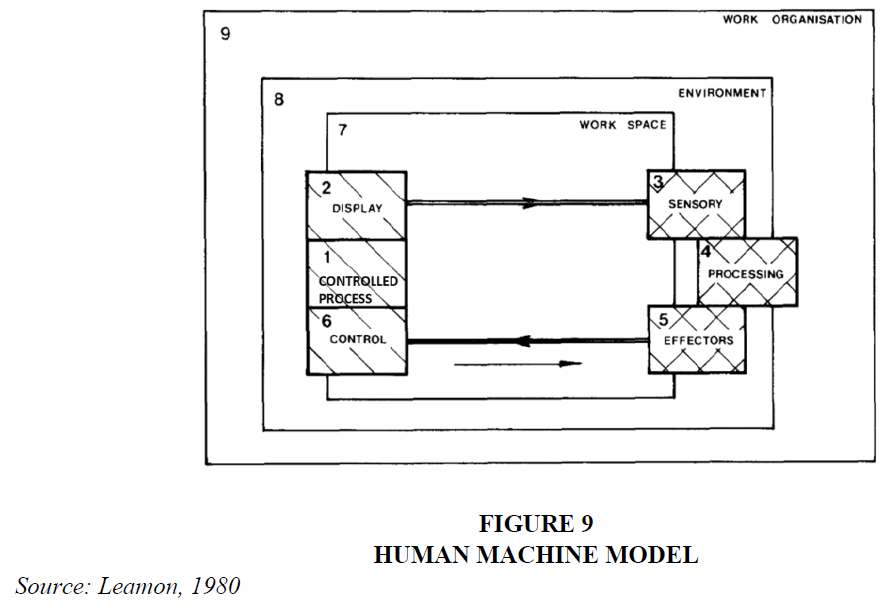

Lemon predicted the possibility of intrinsic interaction of elements within worksystem component. He has proposed Human-Machine model and provided the most fundamental conceptual framework to study the worksystems and their inter-component interaction (Margulies & Zemanek, 1983). However, this model lacks the detailed insight of human-machine interaction and intrinsic interactions of elements of machine component in complex systems. This model incorporates all the nine components of worksystem and clearly defines the boundary of each component and overlapping. He has defined ten interactions between the primary components, however human and machine interactions were not defined. Probably at that time, technology and machine in the industries were at nascent stage of development and role of human was limited to physical need and task were relatively simpler (Kleiner, et al., 2015). In past six decades, the machine component in worksystem has undergone major changes, which will correspondingly have impact on the human component and so the interaction between the human and machine are important (Roli & Khanzode, 2023).

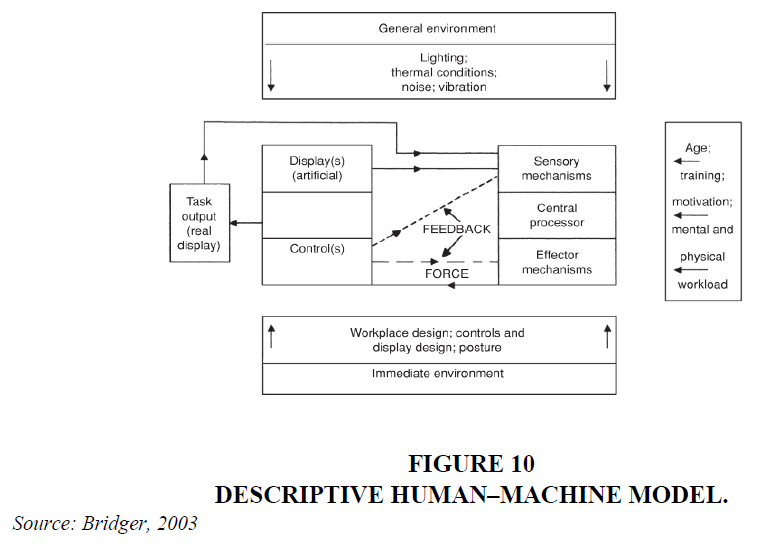

In order to improve the performance of worksystem, Bridger in 2003 had emphasized on interaction between people and machine, and the factors affecting these interactions This can be achieved by designing better interface, which is compatible with task; and designing out the factors, which adversely affects the performance (Bridger, 2008).

A system is defined as set of elements and boundary around them. Human performs the task and machine to generates output. Bridger (2008) discussed that in simple worksystem, there are six possible directional interactions, and out of these four involve human. He worked out the design issues with respect to each interaction for worksystem. He had made a mention about possibility of other component interacting with machine component, like the machine may interact with environment by creating noise that may in- turn affect the human Figure 9.

This model defines the environment in two sub-parts: general environment (light, noise, vibration, and thermal conditions etc.) and immediate environment (workplace design, posture and controls etc). The workspace components are included in the immediate environment. It also includes the psychological components: age, motivation, training, and mental workload, which will directly and indirectly affect the human performance in the worksystem. The feedback system in the model illustrates the interaction of components in the worksystem Figure10.

Comparison Criteria for Worksystem Models

With technological advancement and industrialization, ‘worksystem’ and its connotations became the center of focus for many researchers of human factor engineering. With growing technologies, worksystem failures also increased and so many studies focused on failure analysis, their causations, and theories. Past literature presents few theories or models, relating worksystem failure causations. Broadly, these were classified to be based on three schools of thought: (i) person as a cause, (ii) system as a cause and (iii) person–system interactions as a cause.

There are various methods of failure analysis. Benner in 1985 compared many worksystem accident investigation methods used by US agency and listed out essential characteristic of best suited methods as: Realistic, Definitive, Satisfying, Comprehensive, Disciplining, Consistence, Direct, Functional, Non-casual, Understandable. This comparison gives out quality of outcome of investigation methods. Only two attributes (namely functional and non-causal) related to nature of accident analysis models. An analysis process must be ‘functional’, so as to make the analysis more efficient. It’s important to know which events led to the accident and which ones were unrelated. It also needs to be ‘non-causal’, which means that it should bring out objective description of accident process. Only after completely understanding the accident process, attributes of cause or fault can be assessed. Likewise, Hollnagel (2008) too chose to assess different investigation methods, accordingly to qualities of the method/model, relating to theoretical norms and efficacy. The quality criteria were analytic capability, predictive capability, technical basis, relation to existing taxonomies, practicality, and cost-effectiveness. Similarly, every method of analysis is based on some unique analytical concept. Also, different worksystem have different analytical method/model, which may be unique to respective sector.

However, we argue that worksystem analysis need a holistic approach, which should not just address functional and qualitative characteristics, but should also include the structural knowledge (Caple, 2007; Wilson, 2000). The worksystem is defined as ‘System comprising one or more workers and work equipment acting together to perform the system function, in the workspace, in the work environment , under the conditions imposed by the work tasks’ (ISO6385:2016(E), 2016). Based on the definition of worksystem given by International Standard Organization, five subsystems (components) form the necessary skeletal of worksystem. They are ‘Human,’ ‘Machine’, ‘Environment’, ‘Workspace’ and ‘Organization’. Machine component is equivalent to work equipment stated in definition table 1. The phrase ‘acting together’ can be depicted by term ‘interactions’ given by Perrow. In this study, we chose to analyze and compare various worksystem models in light of their addressal to essential worksystem components and interactions, contributions and limitations. The critical appraisal pointers are presented in the table 2.

| Table1 Comparison of Work system Failure Models | |||||||

| Essential Component | Kirwan (1992) | Reason (2000) | Williams (2017) | Perrow (1984) | Tania Mol (2003) | Leamon (1980) | Bridger (2002) |

| Models Components | Human Error & Recovery Assessment Model | Swiss Cheese Model | Random cluster Model | Interaction & Coupling Model | Entropy Model | Human- Machine Model | Descriptive Human Machine Model |

| Human | ? | ? | ? | ? | ? | ? | |

| Machine | ? | ? | ? | ? | ? | ? | |

| Workspace | ? | ? | |||||

| Environment | ? | ? | ? | ||||

| Work Organization | ? | ? | ? | ? | ? | ||

| Worksystem Interactions | ? | ? | ? | ||||

| Table 2 Critical Appraisal of Worksystem Models | |||||||

| Paradigm | Person as Cause | System as Cause | System –Person Interaction as Cause | ||||

| Model s | Human Error & Recovery Assessment Model Kirwan (1998) | Swiss Cheese Model Reason (1990) | Random Cluster Model Day (2017) | Interactio n Coupling Model Perrow (1984) | Entropy Model Tania Mol (2003) | Human Machine Model Leamon (1980) | Descriptive Human Machine Model Bridger (2003) |

| Human | • Identification of human error by various techniques and assessment by software- based model. | • Analyses the causes of human error | • Human errors have genesis from design error/ system error | - | • Human errors are slips, lapses and deviation form protocols due to casual approach over a period. | • Tactile feedback from control to effector • Sensory analysis of process through the display |

• Parame ters affecting human performance are added: age, motivation, training, mental and physical load |

| Machine | - | • Efficacy of redundanc ies against failure | • Design related errors in the system | • Complexi ty & coupling increasing with advanced technolog y | • Vintage, exploitation and usage over a period of time adversely affects the machine component. | • Process automation would need a qualified workforce. • Need of sophisticate d display though computer- controlled VDU. • Different types of Controls including voice control |

• The real & Artificial display is interacting with senses. • Feedback identified from control to senses and effector as internal interaction |

| Workspace | - | - | - | - | - | • Workspace design and layout governed by anthropome try of working population |

• Control and display layout, posture analysis • Anthropometri cal requirement of workspace. |

| Environment | - | - | - | - | • The environmenta l condition degrades with time and adversely affect human and machine. |

• Auditory, visual, thermal and vibration conditions affects human operator |

• Auditory, visual, thermal and vibration conditions affects human operator |

| Work Organization | • Time pressure, stress, training, team organization assessed as performance factors. | • Administr ate control, SOP & policies to prevent failure |

- | • Applicati on of high- reliability strategies may not be sufficient to prevent failures. |

- | • Technologi cal aspects of vigilance & pacing of work and selection, training, and teamwork | Chang e of human performance with mental load, training and motivation |

| Additional Contribution | • Integ rated software provides rapid analysis. • Guidelines provided to the assessor for selection of technique. |

- | • Cluster of hazards interacts with each other, in three dimension s, resulting into failure. • Interactions lead to failure |

• Catastro phic potential of systems assessed. | • Every worksystem has inherent risk known as residual risk, which cannot be completely eliminated . | • Interactions of components identified. | • Six directional Interactions .identified |

| Limitations | • Same error may be identified twice due to multi- technique approach. • Error due to environment and workspace are acutely neglected. |

• Error factors are more organizatio nal and linear in fashion. • Missed out errors due to environm ental & dimension of technolog ical advancem ent |

• Limited expression of the interaction of component s & other common mode failures | • Environm ental and workspace design not accounted to analyze catastrophi c potential | • Risk associated with organizationa l aspects and workspace layout, design etc. are not included in entropy risk. |

• Effect of an Environme ntal factor not worked out for the machine. • Lack of human- machine interaction. • It is a Static model. |

• Inter- component interactions of worksystem are not discussed in detail. • It is static model |

Out of the all the analytical worksystem models, only Leamon’s Human-Machine worksystem model 1980 and Bridger’s Descriptive Human-Machine worksystem model ,2003 lists out all five component of worksystem and some inter-component interrections. Bridger’s model is modification of Leamon’s model, and though it speaks about ‘organisational’ component features, it doesn’t illustrates it well. In addition, the ‘workspace’ component, though explained as ‘immidiate enviornment’ is placed away from other components. Leamon’s Human-machine worksystem model, on the other hand, illustrates all five component with well defined boundaries and connections, where present. This model also presents ten intercomponent interrections. With all these features, we find it as most appropriate and fundamental worksystem model in technical sectors.

Validation of Fundamental Worksystem through Case Illustration

Though Leamon’s Human-machine worksystem model was formulated in the year 1980, We donot find any accident or failure analysis methods or case analysis in literature, based on Leamon’s model. Hence we set out to validate that Leamon’s model/ concept can be used to analyse a failure case. This is shown with an example of Lion’s air crash(Komite Nasional Keselematan Transportasi Republic of Indonesia, 2019) task analysis presented ahead.

Brief of Case

On 29 Oct 2018, Lion Air flight 610 (Boeing 737, Max 8) crashed into the Java Sea after 11 min of takeoff. The crew had requested to return to Jakarta, just before the crash; however plane never issued a mayday distress call. Black box data revealed malfunctioning of air speed indicator in the last four flights of Lion Air Flight 610 and malfunctioning of the angle of attack (AOA) sensor as main cause of the crash. This caused the plane’s computers to erroneously detect a mid-flight stall. The plane had an anti-stall feature (an automated system, which in such case, will take control of the aircraft and autopilot will sharply point down the plane’s nose, without pilot’s instruction). Due to this, the pilot couldnt control the airplane. An excessive nose-down position brought significant altitude loss, and impact with terrain.

The infiormation and accident details were known to us from the official investigation reports, which are available in the public domain. Events that led to the accident and analysis of its possible inter-component interaction were mapped, based on Leamon’s worksystem model. They are detailed in table 3 As an action to this accident, Boeing issued advisory Bulletin to Airliner, that in case of erroneous input from AOA sensor, the pilot needs to follow existing flight crew procedures. Regulatory authorities mandated the airliners to included these instructions in the flying manuals, but this was enforced only after the crash table 3.

| Table 3 Task Analysis and Corresponding Work system Interactions | ||

| Sr No | Events | Interactions |

| 1 | Previous four flight had repeated defects with AOA sensor and pitot tube | Controlled Process- Environment |

| 2 | Airspeed indicators on pilot and copilot panels displayed a difference of 200 m/s form the previous flight |

Display- Senses |

| 3 | Aircraft was declared fit even after recurring defect without a detailed diagnosis | Organization – Processing |

| 4 | Malfunction of AOA sensor (last flight) and incorrect display to panels was noticed |

Controlled Process – Display |

| 5 | Flight computers erroneously detected a mid-flight stall | Not Explainable |

| 6 | Autopilot abruptly nose-dived the plane to regain speed | Not Explainable |

| 7 | The pilot could not control the flight manually against AOA malfunction and autopilot actions |

Effector-Control |

| 8 | Lack of pilot training for taking manual control in such circumstances and missing of such instructions in pilot operating manuals |

Organization– Processing |

The interactions, that led to worksystem failure are termed as ‘negative interractions’. While mapping negative interactions of Lion 610 air-crash, We realised that some of the interactions were unexplainable by the Human Machine model. A new interaction ‘ Controlled process – Environment’ emerged, which isn’t given by the Leamon Human Machine model, 1980. We decipher that this may be because, leamon’s model was drafted in 1980s, when worksystem advancements were in nascent stage. In these last four decades, they have evolved into complex and tightly coupled worksystem. Hence Further probing and research is delineated, in the light of complex worksystem.

Epilogue

Accident causation models (or theories) form the theoretical foundation of safety science, by providing a theoretical framework of accident analysis and prevention (DeCamp & Herskovitz, 2015). A theory is conceptualized as ‘a unified system of propositions made with the aim of achieving some form of understanding that provides an explanatory power and predictive ability’(Grant et al., 2018). It is also explained as ‘an analytic structure or system that attempts to explain a particular set of empirical phenomena.’ Theoretical models are derived from theories. They may or may not explain the phenomenon related to it. In safety science, the terms ‘theory’ and ‘model’ are used as synonyms (Khanzode et al., 2012).

Literature presents various worksystem models, theories, and framework to assess the worksystem in various industrial contexts. They are based on specific paradigm approach for assessment of worksystem failures. They have given different structural framework to study the functioning, error prediction models and failure investigation methods. Though these approaches are accepted by the environment and have their footprint in the literature, that doesn’t mean that they apply to all worksystem, irrespective of context, time span and mode of failures. In case of failure analysis, models must provide the principles, that can explain how failure happen. Accident models denote sets of axioms, assumptions, beliefs, and facts about accidents, that help understanding and explaining an events.

The purpose of this review was to find a model concepts, that can be applied consistently and uniformly to most worksystem. After appraising many failure analysis models, we decipher that Leamon’s Human-machine worksystem model is the most appropriate model to explain worksystem structure and linkage. Hence it can be considered as fundamental model. Failures causation can be explained with one or series of negative interactions happening in worksystem. Even if the failure cause is purely because of human error or design fault, still this will cause some unwanted and unwarranted interaction, that will lead to failures. We did root cause analysis of few classic failure cases from various sectors (one of it is listed in this paper for better understanding) and found that negative interactions responsible for failures were explainable with Leamon’s Human-machine worksystem model, with some exceptions. Few interactions could not be explained, and it is probably because all these worksystem were highly complexed and tightly coupled. Where complexed worksystem are in question, the failures today defy simple explanations of cause-effect. Probably more elaborate approaches, with better models and more powerful methods are needed. These can form new research objectives of current and future research.

References

Alter, S. (2018). System interaction theory: Describing interactions between work systems. Communications of the Association for Information Systems, 42(1), 9.

Indexed at, Google Scholar, Cross Ref

Besnard, D., & Baxter, G. (2003). Human compensations for undependable systems. School of Computing Science

Brewer, J.D., & Hsiang, S.M. (2002). The'ergonomics paradigm': Foundations, challenges and future directions. Theoretical Issues in Ergonomics Science, 3(3), 285-305.

Indexed at, Google Scholar, Cross Ref

Bridger, R. (2008). Introduction to ergonomics. Crc Press.

Caplet, D.C. (2007). Ergonomics-future directions. Journal of human ergology, 36(2), 31-36.

Indexed at, Google Scholar, Cross Ref

Dave, R. & Khanzode, V. (2023). Mapping of human-centric worksystem interactions in industrial engineering, 27, 3.

Day, R. (2017). Design Error: A Human Factors Approach, I., CRC Press, Boca Raton, FL.

DeCamp, W., & Herskovitz, K. (2015). The theories of accident causation. In Security supervision and management (pp. 71-78). Butterworth-Heinemann.

Indexed at, Google Scholar, Cross Ref

Dhillon, B. S., & Liu, Y. (2006). Human error in maintenance: a review. Journal of quality in maintenance engineering, 12(1), 21-36.

Indexed at, Google Scholar, Cross Ref

Diop, I., Abdul-Nour, G. G., & Komljenovic, D. (2022). A high-level risk management framework as part of an overall asset management process for the assessment of industry 4.0 and its corollary industry 5.0 related new emerging technological risks in socio-technical systems. American Journal of Industrial and Business Management, 12(7), 1286-1339.

Grant, E., Salmon, P.M., Stevens, N.J., Goode, N., & Read, G.J. (2018). Back to the future: What do accident causation models tell us about accident prediction?. Safety Science, 104, 99-109.

Indexed at, Google Scholar, Cross Ref

Hendrick, H.W. (2008). Applying ergonomics to systems: Some documented “lessons learned”. Applied ergonomics, 39(4), 418-426.

Indexed at, Google Scholar, Cross Ref

Hendrick, H.W., & Kleiner, B.M. (2002). Macroergonomics: Theory, methods, and applications. Lawrence Erlbaum Associates Publishers.

Hitt, J.M. (1998). Review of the publishing trends in the proceedings of the human factors and ergonomics society. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, 42 (10), 679-681.

Hobbs, A., & Williamson, A. (2002). Unsafe acts and unsafe outcomes in aircraft maintenance. Ergonomics, 45(12), 866-882.

Indexed at, Google Scholar, Cross Ref

Hobbs, A., & Williamson, A. (2003). Associations between errors and contributing factors in aircraft maintenance. Human factors, 45(2), 186-201.

Hocraffer, A., & Nam, C.S. (2017). A meta-analysis of human-system interfaces in unmanned aerial vehicle (UAV) swarm management. Applied ergonomics, 58, 66-80.

Indexed at, Google Scholar, Cross Ref

Hollnagel, E., & Speziali, J. (2008). Study on developments in accident investigation methods: a survey of the" state-of-the-art.

Ji, C., & Zhang, H. (2012). Accident investigation and root cause analysis method. In 2012 International Conference on Quality, Reliability, Risk, Maintenance, and Safety Engineering, 297-302. IEEE.

Indexed at, Google Scholar, Cross Ref

Khanzode, V. V., Maiti, J., & Ray, P. K. (2012). Occupational injury and accident research: A comprehensive review. Safety science, 50(5), 1355-1367.

Indexed at, Google Scholar, Cross Ref

Kirwan, B. (1992). Human error identification in human reliability assessment. Part 2: detailed comparison of techniques. Applied ergonomics, 23(6), 371-381.

Kleiner, B.M., Hettinger, L.J., DeJoy, D.M., Huang, Y.H., & Love, P.E. (2015). Sociotechnical attributes of safe and unsafe work systems. Ergonomics, 58(4), 635-649.

Koeppen, N. A. (2012). The influence of automation on aviation accident and fatality rates: 2000-2010.

Komite Nasional Keselematan Transportasi Republic of Indonesia. (2019), Aircraft Accident Investigation Report - KNKT 18.10.35.04. Final Report- Aircraft Accident Investigation Report, 8, 1–230.

Margulies, F., & Zemanek, H. (1983). Man's role in man-machine systems. Automatica, 19(6), 677-683.

Indexed at, Google Scholar, Cross Ref

Martin, T., Kivinen, J., Rijnsdorp, J.E., Rodd, M.G., & Rouse, W.B. (1990). Appropriate automation–integrating technical, human, organizational, economic, and cultural factors. IFAC Proceedings Volumes, 23(8), 1-19.

Indexed at, Google Scholar, Cross Ref

Marynissen, H., & Ladkin, D. (2012). The relationship between risk communication and risk perception in complex interactive and tightly coupled organisations: a systematic review. In The Second International Conference on Engaged Management Scholarship.

Mehrdad, R., Bolouri, A., & Shakibmanesh, A. R. (2013). Analysis of accidents in nine Iranian gas refineries: 2007-2011. International Journal of Occupational & Environmental Medicine, 4(4).

Mol, T. (2003). Productive safety management. Routledge.

Parasuraman, R. (2000). Designing automation for human use: empirical studies and quantitative models. Ergonomics, 43(7), 931-951.

Indexed at, Google Scholar, Cross Ref

Park, J., Seager, T. P., Rao, P. S. C., Convertino, M., & Linkov, I. (2013). Integrating risk and resilience approaches to catastrophe management in engineering systems. Risk analysis, 33(3), 356-367.

Qureshi, Z. H. (2008). A review of accident modelling approaches for complex critical sociotechnical systems.

Rijpma, J.A. (1997). Complexity, tight–coupling and reliability: Connecting normal accidents theory and high reliability theory. Journal of contingencies and crisis management, 5(1), 15-23.

Roli, D., & Khanzode, V. (2023). Interpretive Structural Modelling of Interactions in Human Machine Worksystem. Academy of Marketing Studies Journal, 27(S4).

Rouse, W.B., & Morris, N.M. (1987). Conceptual design of a human error tolerant interface for complex engineering systems. Automatica, 23(2), 231-235.

Sagan, S. D. (2004). Learning from normal accidents. Organization & environment, 17(1), 15-19.

Shrivastava, S., Sonpar, K., & Pazzaglia, F. (2009). Normal accident theory versus high reliability theory: a resolution and call for an open systems view of accidents. Human relations, 62(9), 1357-1390.

Wieringa, P.A., & Stassen, H.G. (1999). Human-machine systems. Transactions of the Institute of Measurement and Control, 21(4-5), 139-150.

Wilson, J. R. (2000). Fundamentals of ergonomics in theory and practice. Applied ergonomics, 31(6), 557-567.

Yin, Y.H., Nee, A.Y., Ong, S.K., Zhu, J.Y., Gu, P.H., & Chen, L.J. (2015). Automating design with intelligent human–machine integration. CIRP Annals, 64(2), 655-677.

Indexed at, Google Scholar, Cross Ref

Received: 03-Mar-2023, Manuscript No. JMIDS-23-13713; Editor assigned: 06-Mar-2023, Pre QC No. JMIDS-23-13713(PQ); Reviewed: 22-Mar-2023, QC No. JMIDS-23-13713; Revised: 17-June-2023, Manuscript No. JMIDS-23-13713(R); Published: 26-June-2023