Research Article: 2021 Vol: 24 Issue: 6

Development of a face-mask detection software using artificial intelligence (AI) in python for Covid-19 protection

Ahmed Imran Kabir, United International University

Sriman Mitra, United International University

Jakowan, United International University

Soumya Suhreed Das, Stamford University

Citation Information: Kabirumar, A. I., Mitra, S., Jakowan, & Das, S. S. (2021). Development of a face-mask detection software using artificial intelligence (AI) in python for Covid-19 protection. Journal of Management Information and Decision Sciences, 24(6), 1-15.

Abstract

Infectious diseases like Covid-19 transmits and contaminates via dispersal through aerial medium has become a serious issue nowadays that stimulated increasing the facemask usage. However, in densely populated countries it is very hard for law enforcing organizations to monitor the number of people wearing mask and those who are not wearing any in public. For this reason, a facemask detection model can be developed for security cameras in public places and surveil on people. In this research, researchers developed a prototype of facemask detector using python codes and artificial intelligence. Since Machine cannot understand human language, developers use different types of programming languages for training machine. Here, Python was used with the help of its packages to train models and two separate python files were developed to complete this research; the first one is to develop a mask detector and the other one to combine mask and face detector, combined together to develop facemask detector in real time. Data was collected, analyzed and visualized with python programming language, model was trained using ConvNet (Convolutional Neural Network) and finally an output was received from a raw input, which can detect mask in human face.

Keywords

Artificial intelligence; Machine learning; Python programming language; Covid-19.

Introduction

Science has established that daily works can be effectively done, even accelerated by many degrees by the use of AI (Artificial intelligence). A recent study has established that AI can be used for facial mask presence detection during the Covid-19 pandemic situation. Since wearing a mask is a matter of utmost importance for people to curb the rate of infection by the SARS Cov2 virus, the outcome of the study will definitely produce some positive impact in the global movement of reinforcing wearing a mask in public. This research is based on Artificial Intelligence (AI) with taking the help of deep learning method and machine learning. To conduct the research, the machine needed to be trained to use its own intelligence. However, since machine cannot understand any human languages and emotions, researchers needed to use a programming language. Here, ‘Python Programming Language’ was chosen for completing this research.

The main objectives of the study are:

1. To make a facemask detector prototype.

2. To monitor people in public places that they are following prevention protocol.

3. To make the facemask detection process from manual to automatic.

4. To lower costs and human effort.

5. To maximize the effectiveness of the process.

6. To develop knowledge and experience on machine learning and artificial intelligence

Review of Literature

There is huge amount of previous studies available online that discussed about the Artificial Intelligence and related fields. Most of them showed the factors related to AI like deep learning, machine learning, CNN, ANN, MobileNet, programming languages and so on. As well as The importance of mask usage has been emphasized by entrepreneuring universities in time of Covid-19, according to Salamzadeh and Dana (2020), and others as they conducted qualitative research by interviewing twenty-five experts from different countries in the Middle East, including Iran, Turkey, Iraq, United Arab Emirates, and the respondents were engaged in five online focus group sessions while the findings were coded. While researchers like Altınbaş et al. (2021), investigated if the Patients with COVID-19 under the Risk of Cardiovascular Events, and sclerosis (Ghajarzadeh et al., 2020). In this research, all the factors have been defined related to the thesis that other literature discussed about. While the disruption of various startup businesses due to COVID-19 were discussed in (Salamzadeh & Dana, 2020).

Python Programming Language

Sanner et al. (1999) mentioned that python is an interpreted, object-oriented and interactive as well as simple yet powerful general purpose programming language. He also told that various type of high-level data types are provided by python. Though there are some other objects can be found in python also. In addition, python has various kind of statements that are simple in nature (van Rossum & de Boer, 1991).

Artificial Intelligence

Researchers are always trying to make machine think by itself with the help of AI. AI is used for robotics, which is one of the sub areas of itself. AI can be used in medical fields as well, since Charniak (1985) mentioned that AI is the study of cognitive faculties using computational models. AI refers to provide intelligence to the machine so that it can act like human, solve problems using its own intelligence.

Machine Learning

Machine learning is a method of AI to choose for computer vision, controlling robot, and speech and face recognition and so on. Many AI developers consider that training a system by showing it examples is easier than doing it manually. There is a broad range of machine learning in the field of computer science (Jordan & Mitchell, 2015).

Deep Learning

Deep learning algorithms are a subset of machine learning algorithms. Computer vision, transfer learning, natural language processing etc. are the approaches of deep learning method (Guo et al., 2016). The conventional machine learning techniques had limitations, which Deep learning methods overcame. It advances in solving high-level problems, discovering intricate structures in high-level data, image and speech recognition and many more (LeCun et al., 2015). Deep learning can be successfully applied to analyze image and recognize target. For this, the non-uniformity of the shape, position and size of welding defects have impacts. Before that, it was a complicated task to analyze and evaluate the acquired welding defects images manually (Pan et al., 2020).

Face Detection

To develop a facemask detector, developing two individual detectors is necessary. At first, it is needed to develop a mask detector model individually and then a face detector model. Then both of them needed to be combined in a separate file, which will finally create a facemask detection prototype. Same spatial configuration, large components of non-rigidity and textural differences among faces make face recognition a difficult task, so, it is mandatory to train machine a lot by giving them more and more examples. It has also potential applications in human-computer interfaces and surveillance systems (Sung & Poggio, 1998).

Convolutional Neural Networks

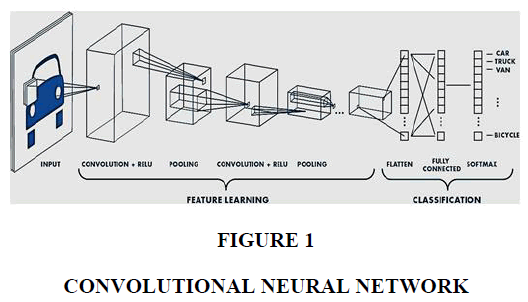

CNN or ConvNet is a class of deep learning or deep neural network. It is used for analyzing visual imagery shown in Figure 1.

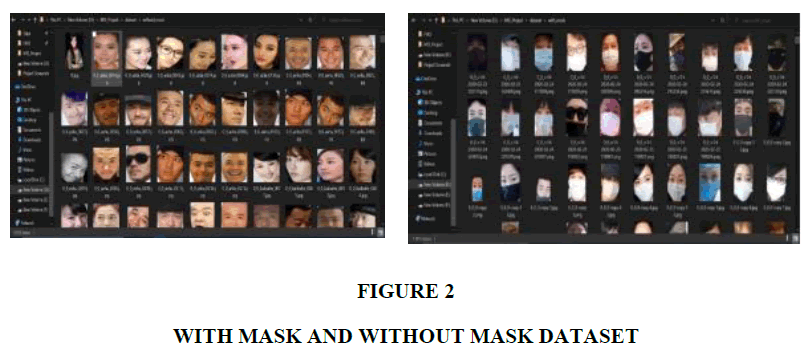

CNN is a simplified way of ANN. CNN analogous to traditional ANN in that they are comprised of neurons that self-optimize through learning (O'Shea & Nash, 2015). Convolutional neural network (CNN) is a part of deep neural network (DNN). In fact, it is one of the most popular among the DNNs. There are no parameters in pooling and non-linearity layers. However, parameters are present in convolutional and fully connected layers (Albawi et al., 2017). The datasets were collected from the GitHub account of ‘Balaji Srinivas’ which has been appreciated in the reference (Srinivas, 2020). There are total 1915 images in the folder named ‘with_mask’. Moreover, the ‘without_mask’ folder contains 1918 images.

Research Methods

This research has been completed with the help of machine learning, precisely the deep learning methods. Here convolutional neural network has been used with a slight change.

Data Analysis Plan

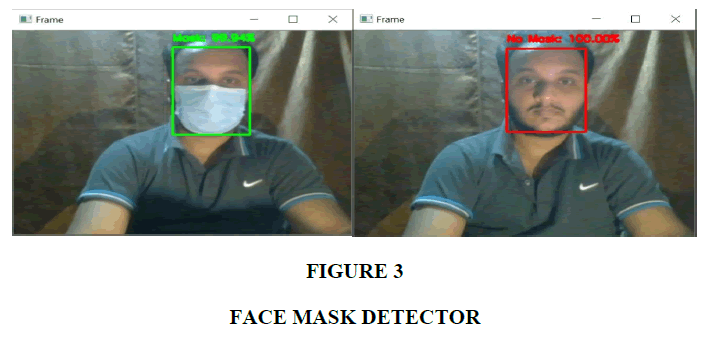

As previously mentioned, the datasets contain different types images divided in two sub-folders. With the help of the data analyzed by Python, at first the machine was trained and a mask detector model was developed. A face detector was collected from online (Srinivas, 2020). After that, both the detectors were combined to develop facemask detector (Figures 2 & 3). After the development is completed, it was implemented into real time using laptop camera.

Research Analysis and Findings

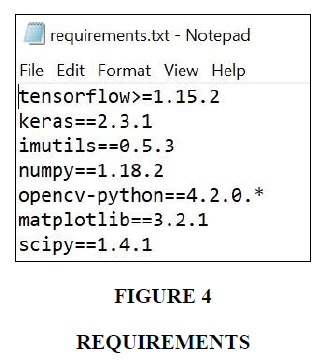

To develop model and use the model in the real time camera, the following dependencies or python packages needed to be installed first shown in Figure 4.

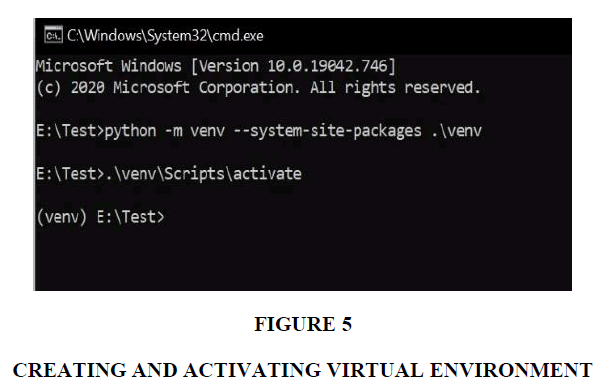

There are two ways to install this package. For installation purpose, a virtual environment needs to be created and activated to the working folder as the following Figure 5.

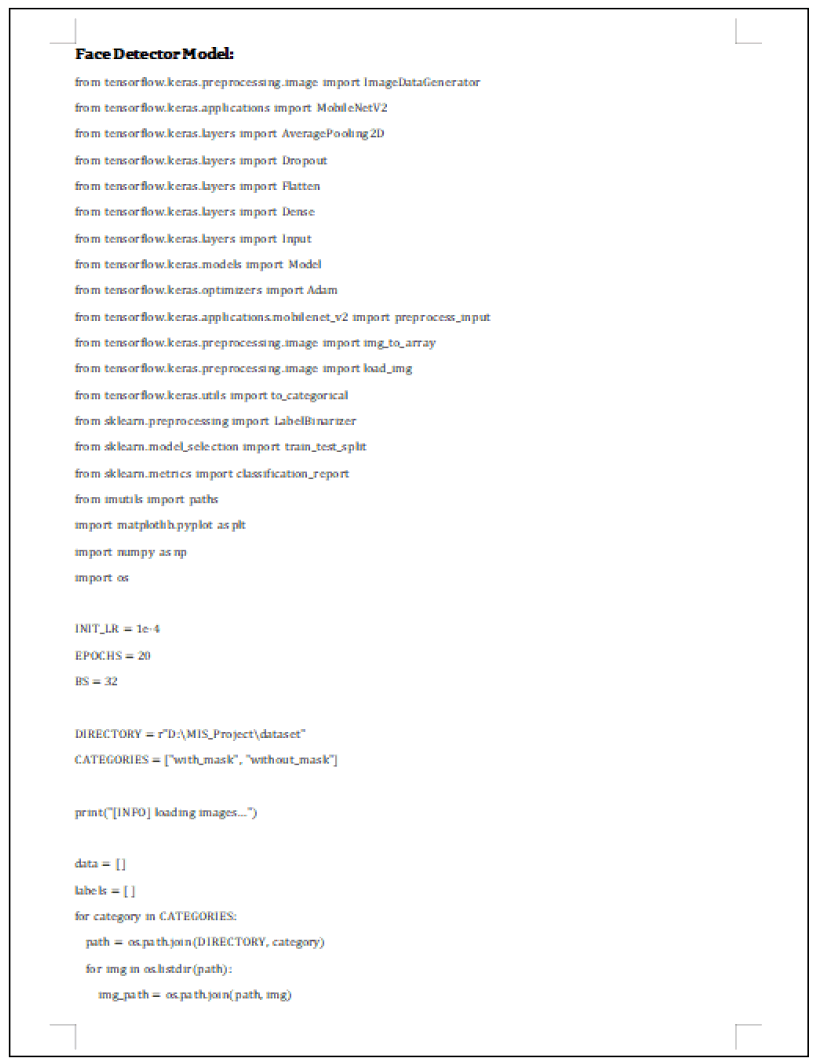

Mask Detector Model

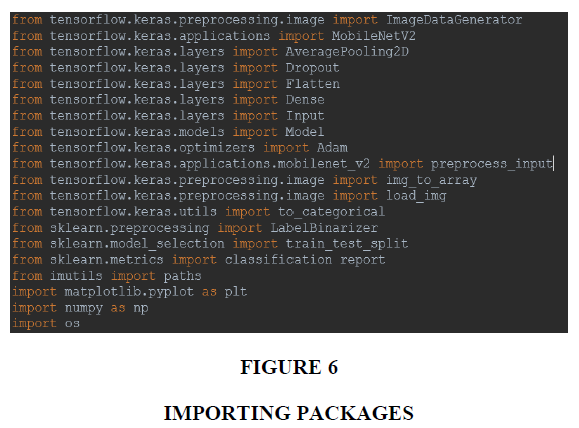

At first all the necessary packages were needed to be imported into the python file. Packages needed are shown in Figure 6 and Figure 7.

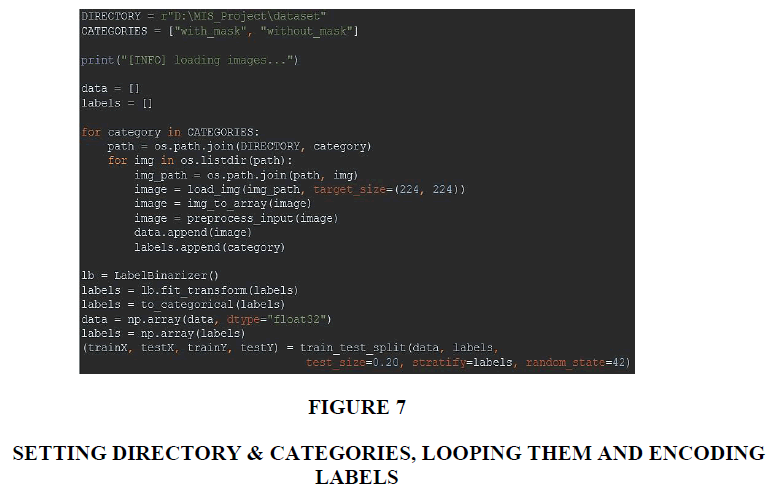

Here the directory was set and mentioned where the dataset folder is present. Therefore, machine can understand the dataset it need to use to run the program. Then categories were set where the values named have been mentioned as “with_mask” and “without_mask” which are the folders present in the DIRECTORY. After that, it has been looped through the CATEGORIES by using for command. By the command ‘os.path.join’ it was tried to loop through categories first. Then all the images were listed down into the particular directory by using ‘listdir’ command as well as images were loaded using the preprocessing function ‘load_img’ of the package ‘keras’ was imported previously as well as gave the images a target size of (224, 224) which is the height and width of the images. Then all the images were converted into array by using another ‘keras’ function called ‘img_to_array’. The ‘mobiletnet’ function of the package called ‘tensorflow’ has been used. That is why researchers used ‘preprocess_input’ function here. After preprocessing the images successfully, they were appended into the previously created ‘data’ list and category into the ‘labels’ list.

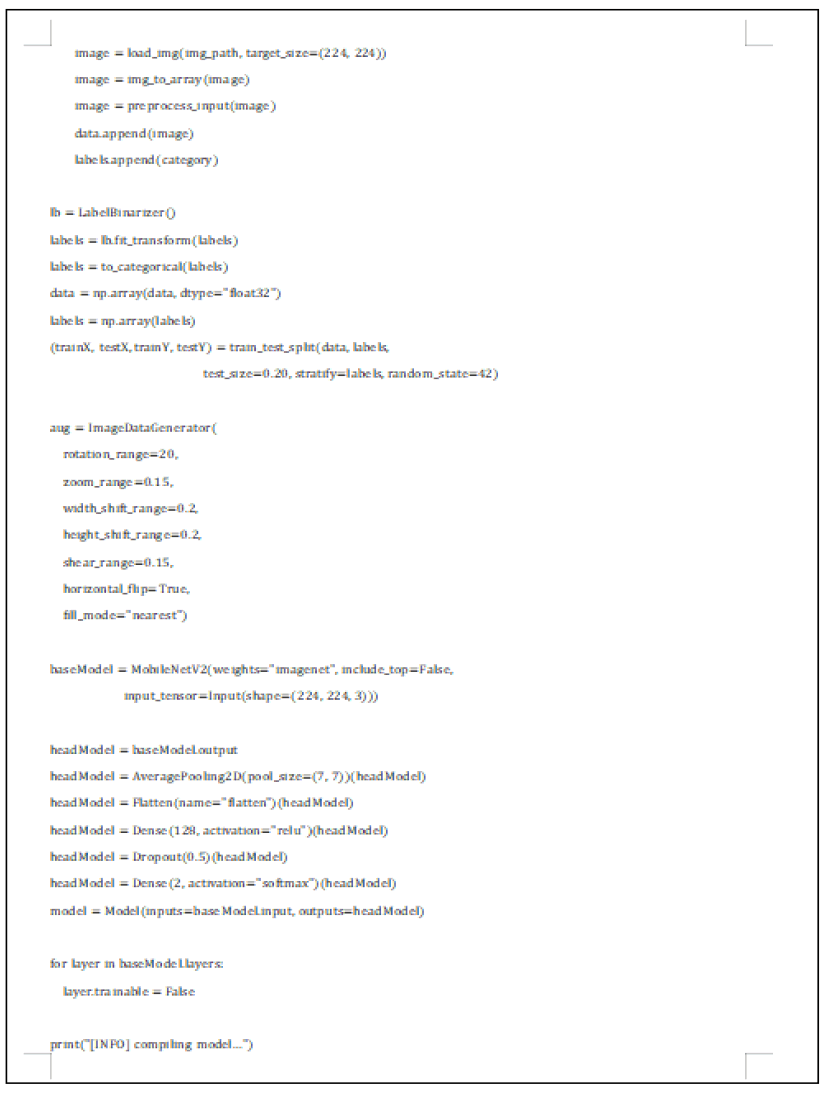

Then ‘train_test_split’ was used to split the training and testing data. Here the test size is set as 0.20, which indicates 20% of the images had been given for testing purpose and the rest 80% for the training purpose. It is always good to give more data for training purpose to get a good test result. Stratification was used into labels which are nothing but classifying the labels and the ‘random_state’ indicates the set of train and test split are being received. It actually does not matter what number is this and does not affect much on the split.

Training Model

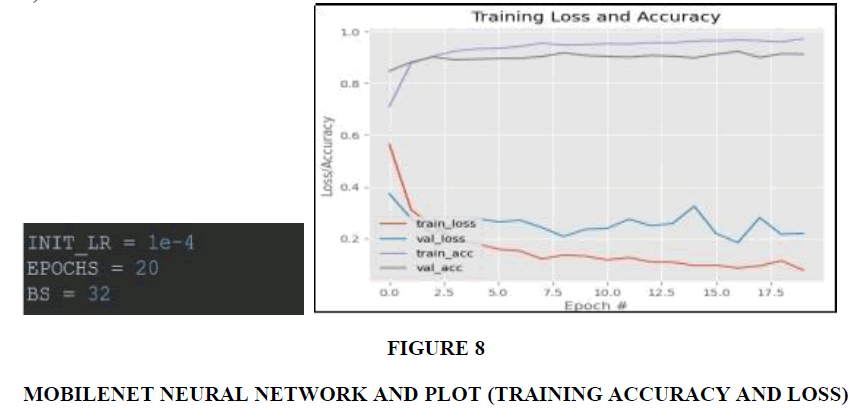

To train the model used convolutional neural network have been used with a slight change. To understand the change, convolutional neural network (CNN) model needs to be understood first. Previously it was discussed briefly. MobileNet, a version of ConvNet has been used (Figure 8).

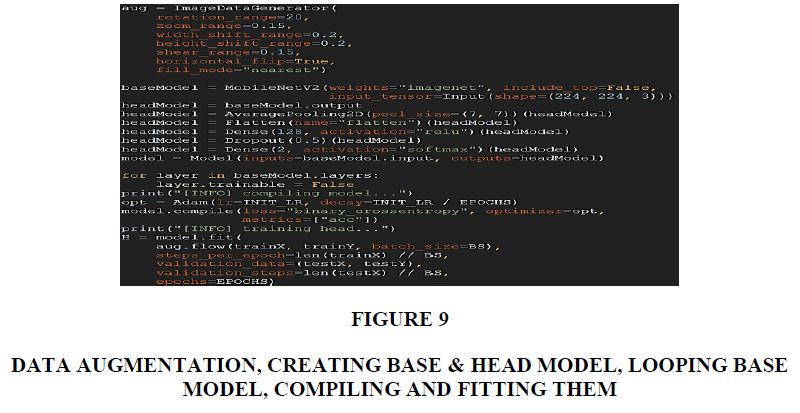

Here, INIT_LR as 1e^(-4) has been provided which is the learning rate of this research. Keeping this rate less helps to calculate the loss properly, and by this getting a better accuracy is much easier. 20 EPOCHS was also been provided and batch size was given as 32. It was previously mentioned MobileNet was used which generated two models; one is the MobileNet model and the output of this model which created a regular model. After creating baseModel, the headModel was created using the output of baseModel. Then the pooling was created by using ‘AveragePooling2D’ function. The size of pooling is 7/7 here. Flatten was added to the layer as well as a Dense layer using 128 neurons, and the activation layer is mentioned here ‘relu’. Relu is used for non-linear model like mine.

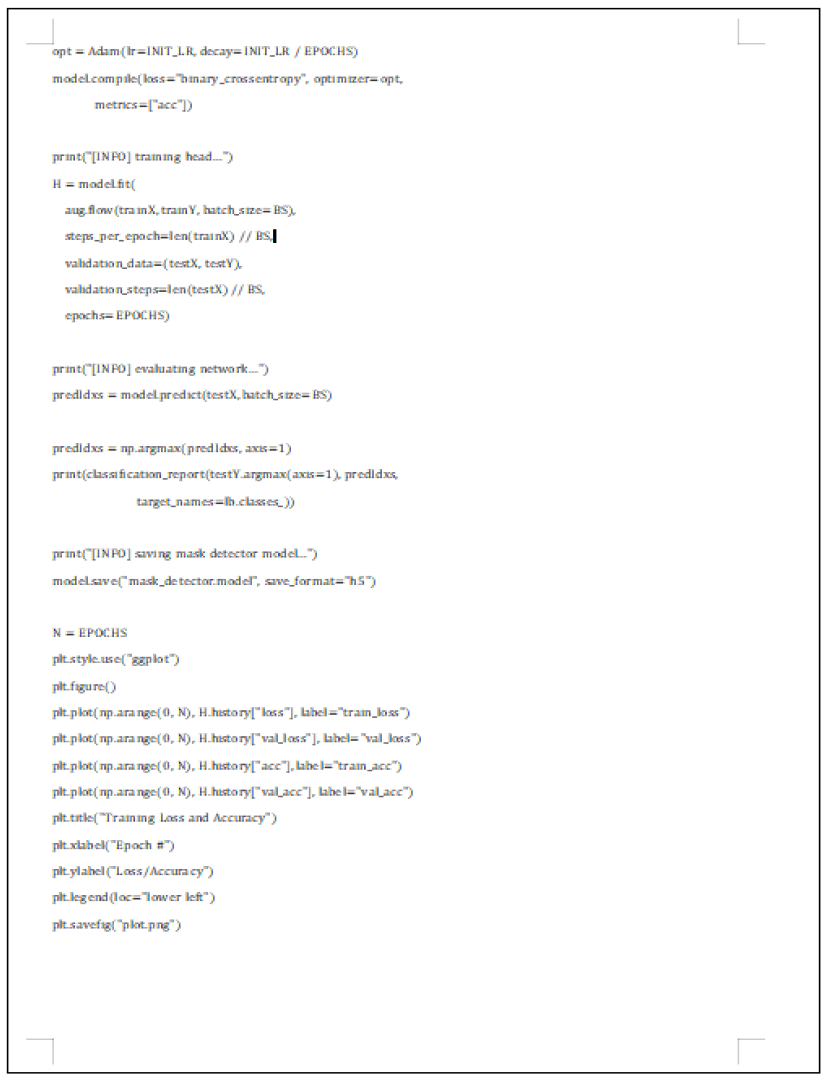

To avoid the overfitting Dropout has been used. Finally, the headModel has been created with two layers (with mask and without mask) and softmax as the activation value because softmax is the best fit for binary models. Now the model function was called. As mentioned previously, baseModel is the input model and headModel is the output model. The baseModel has been frozen by using ‘for’ loop, because it has been used instead of CNN and was to prevent it from running in the training. When both the models are ready, the models were needed to be compiled by giving them the initial learning rate, ADAM optimimizer (a got to optimizer) and tracking the accuracy metrics. Then the model was needed to be fit. A function was created to plot the accuracy and loss of the model by using MATPLOTLIB. A model picture of the plotting is below showing the accuracy and loss of the model file. From the picture beloiw it is quite evident that the accuracy level is quite good and it displays a constantly decreasing loss, which is also positive for the model. Therefore, the model can be said to be validated (Figure 9).

Figure 9 Data Augmentation, Creating Base & Head Model, Looping Base Model, Compiling and Fitting Them

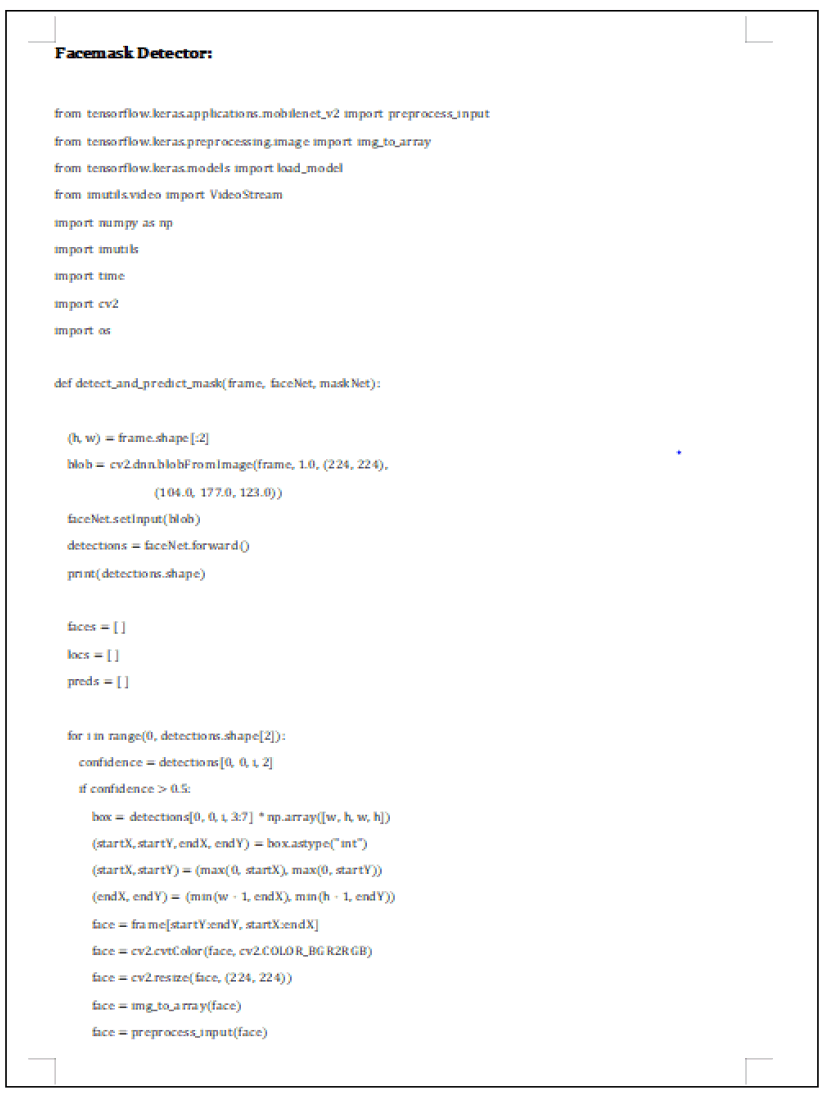

Using models in real time camera

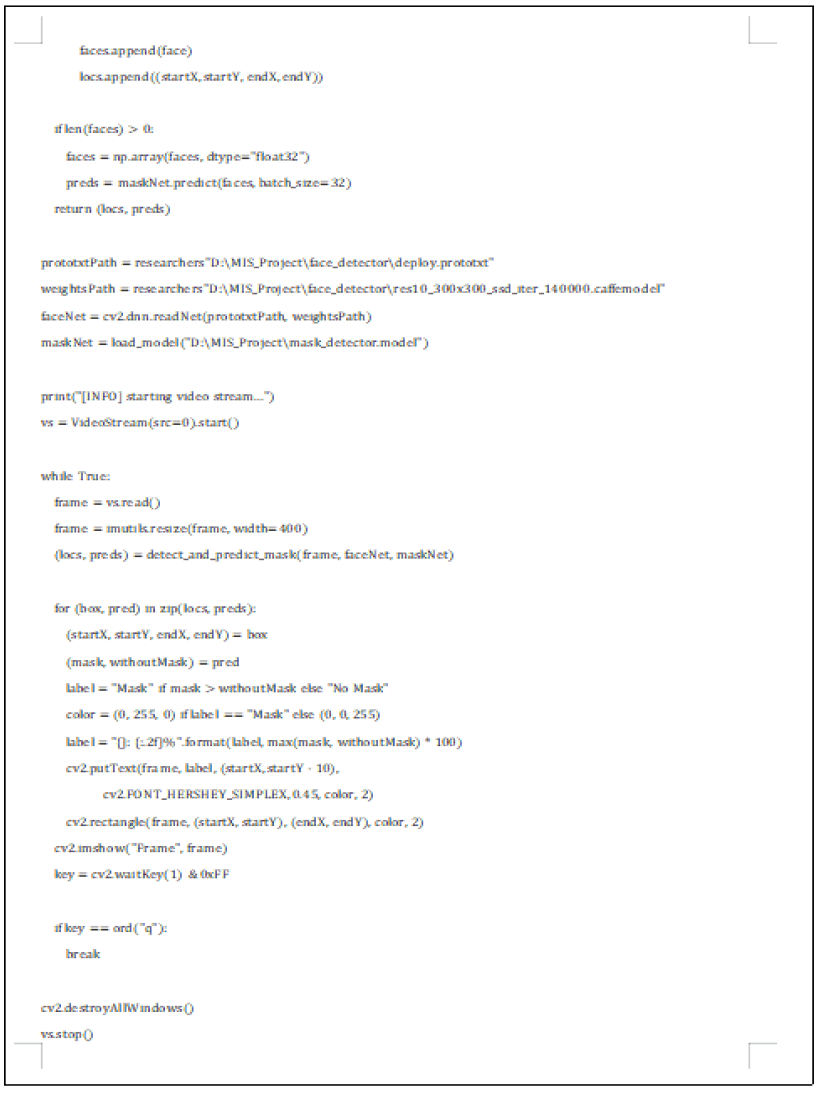

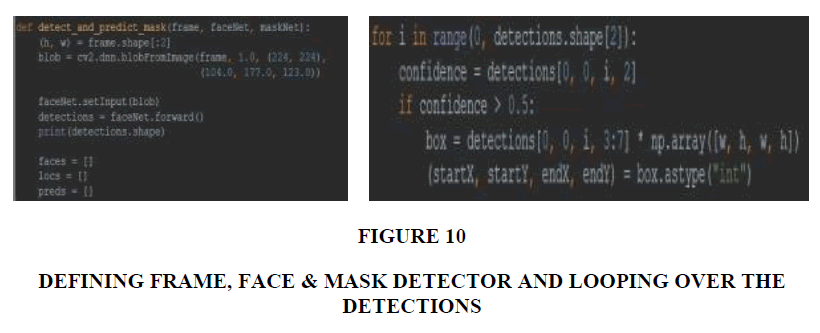

Since the mask detector model have already been built a face detector model needed to be built to be used for both of the detectors to use in real time camera using mask detector model and the face detector a new python file needed to be created to use both the detectors in real time camera. Afterwards, a ‘for’ loop over the detection was created and the confidence level associated with the detection was extracted, which will filter out the weak detection by ensuring minimum confidence level properly. Also, the face ROI was extracted and had been converted it from BGR to RGB channel. Then the face was appended and the box was put into their respective lists.

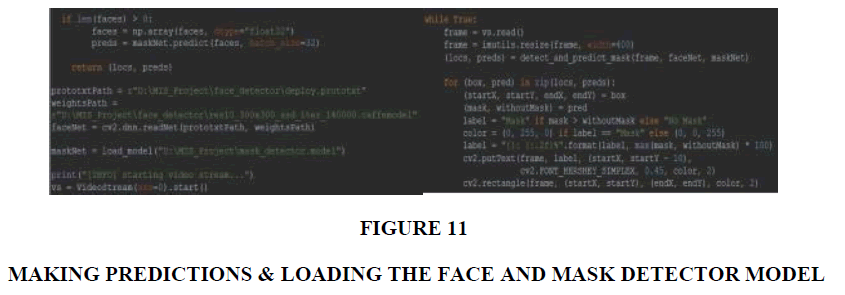

Here, it was ensured that the coordinates of the box would be into the frame and detect the face (Figures 10 & 11).

In addition to that the face detector files and the mask detector model have been loaded, which was previously been created using python coding. A path was given to them and they had been saved as the face detector in ‘faceNet’ variable and mask detector in ‘maskNet’ variable. The ‘faceNet’ variable was created using ‘cv2.dnn.readNet’ function, and the mask detector was loaded using ’load_model’ function Then “[INFO] starting video stream…” was printed to understand what is going on. Then a label was created for the prediction. If the person is wearing a mask it will show ‘Mask’ and if the person is not wearing mask it will show ‘No Mask’. Here, the color of the rectangular box for mask was set as Green (0, 255, 0). For without mask, it will be Red (0, 0, 255). To understand the color, it should be known that machine only understand three basic color, called as BGR. Here, 0 defines completely negative and 255 defines completely positive. Therefore, for making green, Blue (van Rossum & de Boer, 1991) was set as 0, Green (G) as 255 and Red (R) as 0 and it produces completely green color. Same process has been followed for producing red color. Then the label was displayed using format string. It showed the maximum possible percentage for mask or without mask. Here maximum prediction for mask and No Mask will be above 90%, which will take upto 10% of error prediction. For not wearing mask properly, it will show different values also. Here, the output of the frame has been shown and finally was breaking the loop. The ‘q’ button has been set to break the loop.

Conclusion

The focus of this research was to create a prototype of a Face-Mask Detector that might help to develop a real life research based on this research. Researchers tried to achieve highest accuracy possible in the model. By this research, we can understand things related to Machine Learning and Artificial Intelligence. Also, a lot can be learned about the python tools to develop such research. In addition, this research might help other researchers who might think of working on related research. In the end, it can be assumed that the goal of making this research has been fully utilized and achieved. Please see the Appendix for the source code of the AI model.

References

- Albawi, S., Mohammed, T. A., & Al-Zawi, S. (2017). Understanding of a convolutional neural network. Proceedings of 2017 International Conference on Engineering and Technology (ICET), pp. 1-6.

- Altınbaş, Ö., Ertaş, İ. H., Mete, A. Ö., Demiryürek, Ş., Hafız, E., Saracaloğlu, A., Demiryürek, A. T. (2021). Comparison of the N-Terminal pro-Brain Natriuretic Peptide Levels, Neutrophil-to-Lymphocyte, Lymphocyte-to-Monocyte and Platelet-to-Lymphocyte Ratios Between the Patients with COVID-19 and Healthy Subjects; Are the Patients with COVID-19 Under the Risk of Cardiovascular Events? Authorea, Preprints, 2021.

- Charniak, E. (1985). Introduction to artificial intelligence. Pearson Education India.

- Ghajarzadeh, M., Mirmosayyeb, O., Barzegar, M., Nehzat, N., Vaheb, S., Shaygannejad, V., Maghzi, A H. (2020). Favorable outcome after COVID-19 infection in a multiple sclerosis patient initiated on ocrelizumab during the pandemic. Multiple Sclerosis and Related Disorders, 43, 2020.

- Guo, Y., Liu, Y., Oerlemans, A., Lao, S., Wu, S., & Lew, M. S. (2016). Deep learning for visual understanding: A review. Neurocomputing, 187, 27-48.

- Jordan, M. I., & Mitchell, T. M. (2015). Machine learning: Trends, perspectives, and prospects. Science, 349, 255-260.

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521, 436-444.

- O'Shea, K., & Nash, R. (2015). An introduction to convolutional neural networks. arXiv:1511.08458v2.

- Pan, H., Pang, Z., Wang, Y., Wang, Y., & Chen, L. (2020). A new image recognition and classification method combining transfer learning algorithm and mobilenet model for welding defects. IEEE Access, 8, 119951-119960.

- Salamzadeh and L. P. Dana (2020). The coronavirus (COVID-19) pandemic: challenges among Iranian startups. Journal of Small Business & Entrepreneurship,32, 1-24.

- Sanner, M. F. (1999). Python: a programming language for software integration and development. Journal of molecular graphics & modelling, 17(1), 57-61.

- Srinivas, B. (2020). Face Mask Detection. Retrieved from https://github.com/balajisrinivas/Face-Mask-Detection

- Sung, K.-K., & Poggio, T. (1998). Example-based learning for view-based human face detection. IEEE Transactions on pattern analysis and machine intelligence, 20,39-51.

- van Rossum, G., & de Boer, J. (1991). Interactively testing remote servers using the Python programming language. CWi Quarterly, 4, 283-303.

- After completing all the EPOCHS something like the above picture will appear. Following are the codes used to develop this research.