Research Article: 2022 Vol: 25 Issue: 6S

Implication of hybrid learning on student-teacher rapport

Reem Al Qenai, Kuwait Technical College

Randa Diab-Bahman, Kuwait College of Science & Technology

Sapheya Aftimos, Australian University

Citation Information: Al Qenai, R., Diab-Bahman, R., & Aftimos, S. (2022). Implication of hybrid learning on student-teacher rapport. Journal of Management Information and Decision Sciences, 25(S6), 1-19.

Keywords

Covid-19, Online Learning, Decision Making, Hybrid, SIRS

Abstract

During the unexpected pandemic, education systems around the world have experienced many changes. The abrupt switches between the different systems of learning have led to many disruptions in the learning process. Though there is a plethora of information on the implications of online learning and more on conventional in-person education, there is a dearth of information when it comes to the hybrid method – particularly from the students’ point of view. Also, many aspects of the learning process have been investigated previously, including rapport and relationships. Yet, there is still a gap in research which helps practitioners and policy makers identify optimal methods of learning. Thus, this research will be one of the first to investigate the implications of hybrid learning in building student-teacher rapport by comparing it to a fully online system. In this paper, the SIRS-9 was used to measure two Student-Instructor constructs (Rapport & Relationship) to explore the perceptions of undergraduate students (n=238) comparing hybrid & online learning methods. The findings reveal that all the perceptions on online learning had relatively high mean ratings than the perceptions regarding hybrid learning. Thus, it can be concluded that online learning was more effective and successful than hybrid learning. The findings are important as they will help further determine the pros and cons of the different methods of learning to make relevant decisions.

Introduction

Even though there was an extraordinary growth in the Higher Education system, the education of most of the students around the world was disturbed due to COVID 19 pandemic. The sudden spread of the virus resulted in closing universities (Miyah et al., 2022a). Nowadays, universities and colleges are adapting to new learning models and transiting from the traditional classroom methodologies to new schedules and implementations. The uncertainty of the pandemic is still not permitting the educators to identify and decide on the situation in the global level (Zahra & Sheshasaayee, 2022). Today's teaching is full of challenges and opportunities, but also changes. Being an instructor involves and demands new teaching methods, strategies, activities, attitudes, values, and perspectives for the classroom. Relatively, the educational field has confronted the unprecedented opportunities and challenges because of the world multi-polarization and economic globalization, the rapid progress of science and technology and the increasingly fierce competition.

At all educational levels, researcher consistently shows a positive link between student-teacher rapport and student outcomes (Siragosa, 2002; Lammers et al., 2017; Ali, 2020). However, few scales have been developed to measure rapport at the university level and no study has examined the differences between learning methods and student-instructor rapport, particularly between online courses and hybrid learning (Lammer & Gillaspy, 2013). Rapport between students and teachers leads to numerous positive student outcomes, including attitudes toward the teacher and course, student motivation, and perceived learning (Wilson & Ryan, 2013). Moreover, the importance of instructor and student interactions particularly during online education has been proven to be a crucial point for students’ success (Hwang, 2018). Also, Satisfaction and experience of learners is one of the important indicators of their success which can inform institutions' decision making on learning space investments, and that learning competences are found correlated with learners' satisfaction and experience in general and online learning settings (Xiao et al., 2020).

As well, existing research often suggests cautious optimism about synchronous hybrid learning due to their flexible environment compared to fully online or fully on-site instruction, and that this new learning space has several challenges which are both pedagogical and technological in nature as new and emerging research is showing (Raes et al., 2020). As well, building rapport includes less changes and disruptions, both for the students and for the teachers, but hybrid learning causes much turbulence to the overall education process, be it the constant switch from one mode to another and the lack of consistency on several aspects of the educational ecosystem (SOURCE).In this research, the goal is to identify the effects and differences from the students’ point of view of building rapport with their instructors during their recent experience by comparing their exclusively online experience (which took place abruptly/involuntarily during the pandemic forced lock down) to their hybrid experience (which included one some classes on campus and some online). The aim is to explore potential dimensions of more effective causal in the success of the forced online education paradigm implementation, and to gain insight on choosing the best option when faced with such a scenario as per the needs and perceptions of the primary stakeholders – the students. The outcomes shed light on the need to view the educational ecosystem as one which works in synergy and identifies an often-overlooked obstacle.

Scales of Measure

Instrument development has been an ongoing innovative process, where many have contributed to making sure theories being tested are being tested correctly and efficiently providing the most accurate data by having strong and solid theories. If theories are not solid and not developed correctly, then a problem in the data will arise (Bohrnstedt, 2010). In order to covey this; researchers must be able to develop a strong theory in order to develop a solid measurement. When it comes to measuring student-instructor rapport, very few scaled have been developed. The items included in assessing Student-Instructor Relationship (SIRS) include 45 items are included within the scale (Creasey, Jarvis & Knapcik, 2009). Within these items, 11 are connectedness subscales and 34 of them are related to Professor-Student Rapport Scale (Wilson et al., 2010). The SIRS was further simplified into a two-part, 9-scale psychometric scale that determines the student-instructor relationship& rapport known as Student-Instructor Rapport Scale-9 (SIRS- 9; Lammers, 2013). The questionnaire show predictive validity in reference to the student’s perception of their learning experience within the virtual or in-person classroom. Lammers & Gillaspy (2013) developed the 9-item rapport scale based on review of rapport scales in a variety of settings (teacher-child, instructor-student, therapist-client, married couple, employer-employee), as well as connectedness and anxiety.

It is generally viewed that student’s success is strongly connected to the student-instructor rapport (Hattie, 2009; Juvonen, 2006; Wentzel, 2009; Wigfield, Cambria & Eccles, 2012). In reference to further studies, students conveyed that good rapport within a class includes attributes like encouragement, creativity, accessibility, happiness, class discussions, instructor approachability and concern, and fairness (Benson, Cohen & Buskist, 2005). More importantly, studies also indicated that class rapport increases when instructors convey immediacy behaviors as part of the classroom environment (Creasey, Jarvis & Gadke, 2009; Wilson, Ryan & Pugh, 2010). Such immediacy behaviors include “verbal and nonverbal communicative actions that send positive messages of liking and closeness, decrease psychological distance between people, and positively affect student state motivation” (Christophel & Gorham, 1995).

Meanwhile, while evaluating online classes, many researchers stated that instructors faced many challenges in building a positive student-instructor rapport (Allen, Seaman, Lederman & Jaschik, 2012; Sher, 2009). Moreover, they stated that delivery was not so natural as trying to build a relationship with the students was more deliberate (Murphy & Rodriguez-Manzanares, 2012) and a lot of the class time was designated to focusing on technology tools and digital communication (Sull, 2006). Furthermore, such studies that evaluated online classes indicated that instructors posed obstacles where they had to focus on other aspects such as “Recognizing the person/individual”, “Supporting and monitoring”, “Availability, accessibility, and responsiveness”, “Non-text-based interactions”, “Tone of interactions”, and “Non-academic conversation/interaction” (Granitzetal, 2009). Regardless of these findings in relation to online courses, there is still not efficient data collected regarding the matter and more research needs to be conducted (Wilson & Ryan, 2013).

Hybrid vs Online Education

Nowadays, there is increasing interest in hybrid learning across all levels of education and training (Jamison et al., 2014). The concept of hybrid learning or blended learning refers to the combination of an online learning environment by gaining the flexibility of distance or outside of classroom learning face to face classroom conducted by instructors (Dowling et al., 2003; Zitter, 2012).In higher education in particular, hybrid modes of learning have become an increasingly widespread practice worldwide. For an instructor, moving from on-ground to online (or a form of combination), a substantial investment of time and energy is required, along with many specific steps to be followed. This process can lead to high quality course development and can enhance the curriculum reform process as well. The level of face-to face interaction by the instructor is not the same in an online or hybrid course. Relatively, the instructor will have to redesign the course material and structure to ensure each learner is obtaining the correct experience and interaction (Hapke et al., 2021).

Within this context, literature review asserts the significance of establishing rapport between teachers and their students for the sake of fostering a classroom environment that is conducive to learning (Frisby & Martin, 2010a; Sybing, 2019). Especially given the inconsistencies in outcomes of university students, it is crucial for instructors and policy makers to consider teaching practices in the university classrooms as well as policies relevant to teaching and learning in university contexts (Dowling et al., 2003).Universities in the modern world are expected to seek and cultivate new knowledge, provide the right kind of leadership, and strive to promote equality and social justice (Yang, 2020). Accordingly, university instructors’ endeavor to encourage student learning and to build a satisfying relationship with students (Raes, 2022).

Online vs In-Person Implications

The continuing rise in online course offerings, combined with a paradigm shift from instructor-centered to a learned-centered education, stresses new means to devise learning environments and highlights an expanding role for instructors (Mishra et al., 2020). The urgent imperative to ‘move online’, caused by Covid-19 pandemic, has added to the stresses and workloads experienced by university faculty and staff who were already struggling to balance teaching, research, and service obligations, not to mention the work-life balance (Rapanta et al., 2020). The development of online and hybrid programs offers a new dynamic to the curriculum review process and may provide an opportunity to disrupt the inactivity that often-characterized curricula review processes (Intorcia, 2021). Online classes are-by definition – physically isolating, so adopting interaction and engagement can be a challenge. Students tend to withdraw courses when they feel isolated, but one way to counteract that isolation is through positive course interactions with the instructor, which can be a major influence on student success in online courses (Glazier, 2016a).

One of the implications can create tension between institutional demands and individual pedagogical desires can result in some faculty’s challenge to tackle the time-intensive task of experimenting with new technologies and methodologies. There are several barriers to online learning. From the perspective of the quality of learning outcomes of e-learning, the barriers can be classified into four types: learners, instructor, curriculum, and university (Muilenburg & Berge, 2005). However, if it is viewed from the perspective of online learning, the barriers are as follows: technology, online communication with the instructor, online communication with peers, electronic text-based study materials and online learning activities. Online courses are solidly in the mainstream of higher education. Even before year 2022, when COVID-19 pandemic unexpectedly shifted universities to a much greater reliance on online education, the integration of online courses was already well underway (Webb et al., 2005a). However, no known research exists to explain how instructors maintain rapport with online students after it has been initiated. Moreover, it is unknown if the strategies used by online instructors to initiate and to maintain instructor-student rapport are similar or different to hybrid learning (Frisby & Martin, 2010b).

Importance of Relationship & Rapport

Relationships and rapport are important ingredients in the student-instructor interpersonal relationship (Flanigan et al., 2022; Frisby & Buckner, 2018). Rapport is defined as an overall feeling between two people encompassing a mutual, trusting, and pro-social bond (Altman, 1990; Benson et al., 2005; Bernieri, 1998, while student relations are a key element of any learning context. While it is acknowledged that rapport plays an important role in overall learning, little is known about its importance in online education, as the literature is primarily concerned with learning in face-to face contexts (Clark-Ibanez & Scott, 2008). Despite its importance and compared to other classroom variables, little is known about rapport in hybrid classroom environments (Webb et al., 2005b). Hence, the purpose of this study was to examine the importance and impact of building rapport of instructor – student interactions through hybrid learning classrooms. Due to the distinction of instructor-student rapport in educational settings, many questions have dived into the effects of this positive communication behavior on a selection student-related aspects, including motivation (Martin & Bolliger, 2018), academic engagements (Diab-Bahman et al., 2021), academic success (Glazier, 2016b) and academic achievement (Kristin E. Voelkl, 1995).

As well, the role an instructor plays in the classroom is critical to building a user-friendly and engaging learning environment. Instructors take on multiple roles during instruction, and these roles have been generally categorized into two major types: instructional and interpersonal (Park, 2016).The instructional role focuses on delivering subject matter knowledge and providing feedback; the interpersonal role is concerned with being social and engaging students on a personal level. On the other hand, the relationship between an instructor and student has been labeled an interpersonal one, where both instructors and students enter the classroom with relational goals (Frisby & Martin, 2010a). An instructor’s ability to convey interpersonal messages is considered one dimension of instructional communication competence and may serve to achieve relational goals. Asthe classroom is made up of multiple interpersonal relationships which contribute to the construction of a unique society (Kaufmann & Vallade, 2020).

In Lammers (2017) research which took place in a conventional classroom, work showed a positive correlation between rapport scores and final grades using the SIRS-9. They investigated the matter over different times of the semester and saw those students whom rapport decreased across the semester showed significantly lower final grades than students for whom rapport remained stable or increased. Also, Diab-Bahman et al., (2021) found that, during the pandemic, online attendance had a negative correlation with overall grades, meaning that attendance is not necessarily a detrimental factor for positive experiences. Another research which investigated the students’ needs for online learning showed that except cognitive engagement competence, most predictive competences were not significantly associated with hybrid learners' satisfaction and experience but rather cognitive engagement , which is related to the rapport and engagement in the available mix of learning options (Xiao, 2008) Moreover, another possible predictor of student success in an online classroom concluded that individual students’ participation in online activities have significant predictive values toward their final grades, which includes the impact of the perceived relationship between them and their teachers (Park et al, 2019). Moreover, research during the lockdown also revealed that student’s voices were underrepresented significantly and oftentimes dismissed (Diab-Bahman & Al-Enzi, 2020) and those remote conditions had a positive impact on performance (Diab-Bahman, 2021).

Methodology

This study employs a primary quantitative approach as it is descriptive in nature and uses a closed-answer survey. Data collection was conducted using a structured method of gathering feedback from participants through a self-administered survey from local undergraduate students ranging in year of study. This data-oriented approach is best described as a causal-comparative in that it aims to find an impact of an element on a group, or a factor for comparison. Also called the quasi-experimental research, this quantitative research method is used by researchers to conclude cause-effect equation between two or more variables, where one variable is dependent on another independent variable (Chiang et al., 2015).

Instrument

The self-administered online questionnaire consisted of a two-part 9-item rapport scale with a total of 18 questions on a 5-part Likert scale, the Student-Instructor Rapport Scale-9 (SIRS-9), was given to university students taking classes in hybrid form – online and in-person courses. The survey was designed in two languages, English and Arabic, to increase the number of participants and also to reduce language barriers as most locals speak English as a second language. The questions derived for this study were organized categorically to compare the differences in the two experiences given the mentioned variables (online and in-class learning) to address the research objectives. The simplified SIRS-9 measures were chosen as the main instruments for this research as they best fit the objectives which were to identify rapport and relationships between students and teachers. As well, the chosen instruments were simple to read and administer to students, particularly those whose English was a second language.

Sampling

Participants were selected using purposeful sampling, which is a recommended approach in research aiming to report quantitative data. Patton (2001) asserts that the logic and power of purposeful sampling lies in selecting information-rich cases for in-depth study. In this study, we focus on students who are currently experiencing forced online and hybrid methods of education. Tracking the respondents and non-respondents as well as year of study, age and gender, and current university, proved essential to provide a fair representation. Also, a snowball technique was used in that we asked our contacts to forward the survey to their colleagues and friends who qualify to take the survey.

The survey originally aimed to have between 350-400 participants using the snowball sampling technique, which encourages individuals to forward the e-survey to their colleagues who fit the criteria (those who took two consecutive semesters, one of which was fully online followed by a hybrid model where they attended school on and off at various time spans). In total, there was over a 60% response rate. Both males and females from three different private universities in Kuwait took part in this research, ranging in years of study and age as illustrated below. The students were asked to answer the survey twice (side by side) in one go – once based on their perceptions of their online learning experience, and the other based on their perceptions of hybrid learning.

Results

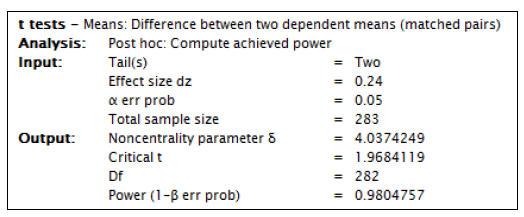

The targeted sample for this study was 350; however, only 283 observations were collected. According to Hair et al., (2014), the minimum acceptable response rate was 60%, and from the outcome above the achieved response rate was 283/350 = 80.86%. Being greater than 60%, it can be confirmed that the sample collected was acceptable (Garson, 2012; Wywial, 2015). To validate the sample size used, the post hoc sample power analysis was then carried out using G*Power (Ahmad, 2018; Newsom, 2018) and the protocol of the analysis is presented below.

For an effect size of 0.24 that was obtained from the main model, together with a 5% margin of error and sample size of 283, the resultant non-centrality parameter was λ=4.04 [tcrit(282)=1.968 and the power was 0.980. The power was greater than the recommended minimum of 0.800, and this confirms that the sample size was adequate.

The data was also tested for missingness and for outliers, and this was done for the overall factors, that is, Student-instructor rapport – Hybrid, Student-instructor rapport – Online, Student-instructor relationship – Hybrid as well as Student-instructor relationship – Online.

Demographic Analysis

For this study, there were three main demographic variables, and these include Gender, Year of Study as well as Age Group and they are summarized herein below.

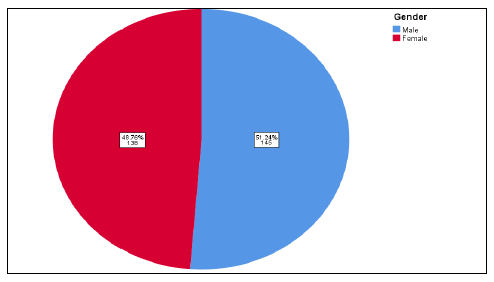

Distribution by Gender

The distribution of respondents by gender is presented in Figure 2.

From the outcome above, the majority of the participants were males (51.24%), while females were 48.76%. While the findings show the dominance of males over females, there was only a marginal difference which was not statistically significant. In this respect, the difference was deemed to be negligible implying that there was no sampling bias given that the proportions were rather consistent with the population proportion as argued by Bryman & Bell (2015).

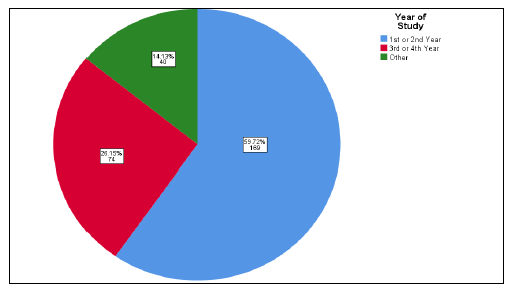

Year of Study

The second demographic attribute that this study considered was the year of study for the students and the corresponding distribution of the students by their year of study are illustrated in the pie chart in Figure 3.

From the outcome, the majority of the students were in the first or second year (59.72%), while the second highest were in the 3rd and 4th year (26.15%), and the least were other students (14.13%). This implies the distribution was skewed and reflects the population distribution.

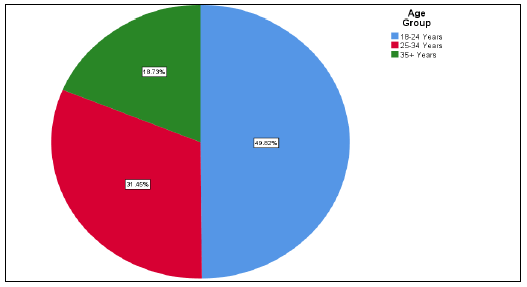

Age Group

The third attribute that was investigated was the age group of the participants and the results are illustrated in the pie chart in Figure 4 below.

The modal category comprised of participants who were aged 18-24 years (49.82%), followed by respondents who were aged between 25 and 34 years (31.45%). The least proportion comprised of respondents who were aged above 35 years (18.73%). The foregoing findings tends to confirm earlier findings that the majority of the participants consulted were younger and were also in lower years of study, implying that the sample collected was representative.

Reliability & Validity

This study comprised of two broad constructs, and these were Student-Instructor Rapport (SIRA) as well as Student-Instructor Relationship (SIRE). These constructs and sub-constructs were measured by 9 measurement items each meaning that they were latent variables (Dimitrov, 2014). According to Grolemund & Wickham (2017), it was imperative to ensure the reliability of the constructs along with the validity of the constructs prior to their use in testing the research hypotheses.

Construct validity tested the extent to which the constructs measures what they are supposed to measure, and this was measured by two approaches, convergent and discriminant validity. Convergent validity tested the degree of agreement by multiple measurement items measuring the same construct, while discriminant validity tested the extent to which each of the constructs diverges from each other (Hoyle, 2012; Ledford & Gast, 2018). The convergent validity was tested by the Average Variance Extracted (AVE) and according to Muthén & Muthén (2017); the minimum threshold for the AVE is 0.60. On the other hand, for the discriminant validity, the Fornell-Larcker criterion was used and according to Thompson (2018), the maximum threshold was 0.85. The results for the construct validity test are presented in Table 1.

| Table 1 Construct Reliability and Validity |

||||||||

|---|---|---|---|---|---|---|---|---|

| Reliability | Convergent Validity | Fornell-Larcker Validity | Discriminant | |||||

| CR | AVE | MSV | MaxR(H) | SIRA-H | SIRA-O | SIRE-H | SIRE-O | |

| SIRA-H | 0.759 | 0.733 | 0.695 | 0.763 | - | |||

| SIRA-O | 0.86 | 0.759 | 0.337 | 0.942 | 0.58 | - | ||

| SIRE-H | 0.948 | 0.778 | 0.312 | 0.924 | 0.568 | 0.529 | - | |

| SIRE-O | 0.707 | 0.755 | 0.653 | 0.892 | 0.437 | 0.441 | 0.557 | - |

Regarding the composite reliability, all the four constructs had a composite reliability statistic greater than the minimum threshold 0.7 and the minimum observed was for Student-Instructor Relationship – Online (SIRE-O) (CR=0.707) meaning that all the constructs were reliable. With respect to the convergent validity, again the Average Variance Extracted (AVE) was calculated and from the outcome, all the constructs had AVEs greater than the minimum threshold of 0.60, with the minimum being for Student-Instructor Rapport – Hybrid (SIRA-H) (AVE = 0.733). This confirms that the convergent validity was not violated. Lastly, to test for discriminant validity, the Fornell-Larckerwas measured, and the results show that none of the coefficients between distinct constructs was greater than 0.85, with the highest coefficient being 0.580 between. These findings do confirm that discriminant validity was not violated. With both the composite reliability and construct validity having not been violated, this validated the constructs for the analysis (Byrne, 2016; Bartolucci, Bacci & Gnadi, 2016).

Construct Reliability

While composite reliability confirmed that the composite constructs were reliable, it was imperative to determine whether all the measurement items were reliably measuring each of the constructs. To achieve this, the total-item correlation coefficient was tested along with the overall Cronbach’s alpha. Pallant (2013); Howitt & Cramer (2017) prescribe the minimum acceptable item-total correlation coefficient at 0.30, and the overall Cronbach’s alpha at 0.70. The results for the reliability test for the construct SIRA are presented in Table 2.

| Table 2 Reliability Test –Student-Instructor Rapport |

|||

|---|---|---|---|

| Corrected Item-Total Correlation | Cronbach's Alpha if Item Deleted | Overall Cronbach’s Alpha | |

| Student-Instructor Rapport (Hybrid) | |||

| Your instructor understands you | 0.647 | 0.88 | 0.818 |

| Your instructor encourages you | 0.684 | 0.877 | |

| Your instructor cares about you | 0.66 | 0.879 | |

| Your instructor treats you fairly | 0.691 | 0.876 | |

| Your instructor communicates effectively with you | 0.694 | 0.876 | |

| Your instructor respects you | 0.605 | 0.883 | |

| Your instructor has earned your respect | 0.646 | 0.88 | |

| Your instructor is approachable when you have questions or comments | 0.636 | 0.881 | |

| In general, you are satisfied with your relationship with the instructor | 0.577 | 0.885 | |

| Student-Instructor Rapport (Online) | |||

| Your instructor understands you | 0.647 | 0.88 | 0.892 |

| Your instructor encourages you | 0.684 | 0.877 | |

| Your instructor cares about you | 0.66 | 0.879 | |

| Your instructor treats you fairly | 0.691 | 0.876 | |

| Your instructor communicates effectively with you | 0.694 | 0.876 | |

| Your instructor respects you | 0.605 | 0.883 | |

| Your instructor has earned your respect | 0.646 | 0.88 | |

| Your instructor is approachable when you have questions or comments | 0.636 | 0.881 | |

| In general, you are satisfied with your relationship with the instructor | 0.577 | 0.885 | |

The overall alpha statistic for Student-Instructor Rapport (Online) was 0.892 while for Student-Instructor Rapport (Hybrid), this was 0.818. Being greater than 0.7, this meant that the construct was reliable. Further, with respect to the corrected item-total correlation, all the items had a coefficient greater than 0.30 implying that all the items were reliable measures. None of the items for the construct was dropped. The reliability test for SIRE is presented in Table 3.

| Table 3 Reliability Test – Student-Instructor Relationship |

|||

|---|---|---|---|

| Corrected Item-Total Correlation | Cronbach's Alpha if Item Deleted | Overall Cronbach’s Alpha | |

| Student-Instructor Relationship (Hybrid) | |||

| The instructors are concerned with the needs of his or her students | 0.73 | 0.904 | 0.916 |

| It’s not difficult for me to feel connected to my instructors | 0.773 | 0.901 | |

| I feel comfortable sharing my thoughts with my instructors | 0.73 | 0.904 | |

| I find it relatively easy to get close to my instructors | 0.799 | 0.899 | |

| It’s easy for me to connect with my instructors | 0.698 | 0.906 | |

| I am very comfortable feeling connected to a class or instructor | 0.492 | 0.919 | |

| I usually discuss my problems and concerns with my instructors | 0.668 | 0.908 | |

| I feel comfortable trusting my instructors. | 0.702 | 0.906 | |

| If I had a problem in my class, I know I could talk to my instructors. | 0.743 | 0.904 | |

| Student-Instructor Relationship (Online) | |||

| The instructors are concerned with the needs of his or her students | 0.485 | 0.706 | 0.741 |

| It’s not difficult for me to feel connected to my instructors | 0.563 | 0.689 | |

| I feel comfortable sharing my thoughts with my instructors | 0.564 | 0.689 | |

| I find it relatively easy to get close to my instructors | 0.477 | 0.707 | |

| It’s easy for me to connect with my instructors | 0.337 | 0.73 | |

| I am very comfortable feeling connected to a class or instructor | 0.327 | 0.732 | |

| I usually discuss my problems and concerns with my instructors | 0.38 | 0.724 | |

| I feel comfortable trusting my instructors. | 0.256 | 0.742 | |

| If I had a problem in my class, I know I could talk to my instructors. | 0.34 | 0.73 | |

From the outcome, Student-Instructor Relationship (Hybrid) had the highest reliability coefficient of α = 0.916, followed by Student-Instructor Relationship (Online) (α=0.741). Since both were greater than 0.70, his meant that both sub-constructs were reliable. Further, none of the items had an item-total correlation coefficient less than 0.30 and in this regard, none was dropped.

Descriptive Statistics

Having confirmed the validity and reliability of the constructs and their respective items, this section further explores the descriptive summaries of the constructs and sub-constructs. As mentioned earlier, the 5-point Likert scale was used ranging from 1 (Not at all) to 5 (Very much so) Garson (2012); Akinkunmi (2019) recommend the use of the mean ratings for the measures of central tendency and the standard deviation for the measures of dispersion. This section explores the distribution of the responses basing on both the mean and standard deviation for each of the research items.

Summary Statistics - Student-Instructor Rapport

Since a 5-point Likert scale was used to evaluate this construct, the midpoint used was, therefore, 3.0. The summary statistics for the Student-Instructor Rapport construct are presented in Table 4 for both the hybrid learning and online learning.

| Table 4 Descriptive Statistics – Student-Instructor Rapport |

|||||

|---|---|---|---|---|---|

| Hybrid | Online | ||||

| N | Mean | SD | Mean | SD | |

| Your instructor understands you | 283 | 3.57 | 1.331 | 3.71 | 1.157 |

| Your instructor encourages you | 283 | 3.65 | 1.272 | 4.07 | .963 |

| Your instructor cares about you | 283 | 3.19 | 1.426 | 4.17 | .924 |

| Your instructor treats you fairly | 283 | 3.49 | 1.319 | 4.16 | .911 |

| Your instructor communicates effectively with you | 283 | 3.38 | 1.318 | 3.90 | 1.057 |

| Your instructor respects you | 283 | 2.93 | 1.386 | 3.82 | 1.102 |

| Your instructor has earned your respect | 283 | 3.21 | 1.343 | 3.79 | 1.208 |

| Your instructor is approachable when you have questions or comments | 283 | 3.71 | 1.246 | 3.87 | 1.080 |

| In general, you are satisfied with your relationship with the instructor | 283 | 3.42 | 1.312 | 3.71 | 1.157 |

The foregoing findings show that all the mean ratings for Student-Instructor Rapport Online learning were greater than the mean ratings for Student-Instructor Rapport Hybrid learning. The highest rating was for whether your instructor cares about you (M=4.17; SD=0.924) among online learners, while the corresponding hybrid learning rating was M=3.19 (SD=1.426). The second highest rating was for whether your instructor treats you fairly (M=4.16; SD=0.911) among online learners, while the corresponding hybrid learning rating was M=3.49 (SD=1.319). The rest of the other items showed a similar pattern.

Summary Statistics - Student-Instructor Relationship

For the student-instructor relationship, again, since a 5-point Likert scale was used to evaluate this construct, the midpoint used was, 3.0. The summary statistics for the Student-Instructor Relationship construct are presented in Table 5 for both the hybrid learning and online learning.

| Table 5 Summary Statistics - Student-Instructor Relationship |

|||||

|---|---|---|---|---|---|

| Hybrid | Online | ||||

| N | Mean | SD | Mean | SD | |

| The instructors are concerned with the needs of his or her students | 283 | 3.31 | 1.460 | 3.73 | 1.238 |

| It’s not difficult for me to feel connected to my instructors | 283 | 3.52 | 1.395 | 3.47 | 1.406 |

| I feel comfortable sharing my thoughts with my instructors | 283 | 3.45 | 1.459 | 3.31 | 1.377 |

| I find it relatively easy to get close to my instructors | 283 | 3.39 | 1.422 | 3.25 | 1.445 |

| It’s easy for me to connect with my instructors | 283 | 3.09 | 1.440 | 4.15 | .980 |

| I am very comfortable feeling connected to a class or instructor | 283 | 3.59 | 1.283 | 3.79 | 1.081 |

| I usually discuss my problems and concerns with my instructors | 283 | 3.78 | 1.280 | 4.19 | .960 |

| I feel comfortable trusting my instructors. | 283 | 3.24 | 1.347 | 4.01 | 1.074 |

| If I had a problem in my class, I know I could talk to my instructors. | 283 | 3.28 | 1.284 | 3.90 | 1.063 |

The foregoing findings also show that virtually all the mean ratings for Student-Instructor Relationship Online learning were greater than the mean ratings for Student-Instructor Relationship Hybrid learning. The highest rating was for whether I usually discuss my problems and concerns with my instructors (M=4.19; SD=0.960) among online learners, while the corresponding hybrid learning rating was M=3.78 (SD=1.280). The second highest rating was for whether It’s easy for me to connect with my instructors (M=4.15; SD=0.980) among online learners, while the corresponding hybrid learning rating was M=3.09 (SD=1.440). The other items also showed a similar pattern.

Inferential Testing

Having reviewed the general descriptive statistics, the study sought to evaluate whether there was a difference in the perceptions among students between online and hybrid learning. The overall descriptive summaries for the two pairs are presented in Table 6 below.

| Table 6 Summary Statistics - Overall |

|||||

|---|---|---|---|---|---|

| Mean | N | SD | SE | ||

| Pair 1 | Student-instructor rapport - Hybrid | 3.39 | 283 | .973 | .058 |

| Student-instructor rapport - Online | 3.95 | 283 | .675 | .040 | |

| Pair 2 | Student-instructor relationship - Hybrid | 3.41 | 283 | 1.063 | .063 |

| Student-instructor relationship - Online | 3.76 | 283 | .681 | .040 | |

The results show that for student-instructor rapport, the overall mean rating was higher for online learning (M=3.95; SD=0.675) than for hybrid learning (M=3.39; SD=0.975). Regarding student-instructor relationship, the overall mean rating was also higher for online learning (M=3.76; SD=0.681) than for hybrid learning (M=3.41; SD=1.063). To test whether these differences were statistically significant or not, since the comparison was being dome for the same students, the paired samples t-test was carried out in lieu of the independent samples t-test (Field, 2018) and the findings are presented in Table 7.

| Table 7 Paired Samples T-Test |

|||||||||

|---|---|---|---|---|---|---|---|---|---|

| Paired Differences | t | df | Sig. (2-tailed) |

||||||

| Mean | SD | SE | 95% CI | ||||||

| Lower | Upper | ||||||||

| Pair 1 | SIRA | -.554 | 1.019 | .061 | -.673 | -.435 | -9.154 | 282 | .000 |

| Pair 2 | SIRE | -.350 | .812 | .048 | -.445 | -.255 | -7.244 | 282 | .000 |

With respect to student-instructor rapport, the mean difference between online and hybrid learning was MD=-0.554; t=-9.15; p<0.05. Since the mean difference was negative and the p was less than 0.05, it meant that the ratings for online learning were higher than hybrid learning and that this difference was statistically significant. On the other hand, with respect to student-instructor relationship, the mean difference between online and hybrid learning was MD=-0.350; t=-7.24; p<0.05. Again, because the mean difference was negative and the p was less than 0.05, it meant that the ratings for online learning were higher than hybrid learning and that this difference was statistically significant. Overall, these findings do confirm that the perceptions of students regarding online learning were better than their perceptions regarding hybrid learning.

Implications & Limitations

Following the rationale above, there were a few limitations that could be addressed in future research. First, the current study recruited students from only three private universities in Kuwait. This suggests that in future research; researchers should engage participants from multiple universities to gain a better understanding of the universality of these rapport relationships. Second, since many instructors teach online and face-to-face, future research should compare instructors’ rapport -related experience across both instructional modalities to gain more insight. Furthermore, the results from this study can be used to understand the most efficient teaching approach. It would be relevant for future studies to consider these contextual differences by focusing on a broader sampling base and greater consistency among stakeholders involved.

Conclusion

This paper sought to present the key findings regarding the sentiments and perceptions of higher education students towards hybrid and online classes with a view to establishing the most effective teaching approach. To achieve this, the SIRS-9 survey with two major constructs were evaluated, that is, Student-Instructor Relationship (SIRE) and Student-Instructor Rapport (SIRA). The participants were asked to rate their perceptions on each of the two constructs with respect to both online learning and hybrid learning. Descriptive summaries of the constructs and items were computed and from the findings, all the perceptions on online learning had relatively high mean ratings than the perceptions regarding hybrid learning. On aggregate, the overall mean rating of both Student-Instructor Relationship and Student-Instructor Rapport was higher for online learning than for hybrid learning. To test whether this difference was statistically significant, the paired samples t-test analysis was done. From the findings, the difference in the mean ratings was found to be statistically significant. Thus, it can be concluded that online learning was more effective and successful than hybrid learning.

References

Ahmad, W.M.A.B.W. (2018). Sample Size Calculation Made Easy Using G*Power. Penerbit USM, Malaysia.

Akinkunmi M.A. (2019). Business Statistics Using R, De|G Press, San Rafael.

Al Otaibi, Y., Diab-Bahman, R., & Aftimos, S. (2022). Student wellbeing & overall performance in higher education: A study of undergraduate students in Kuwait.Journal of Positive Psychology & Wellbeing, 6(1), 2221 – 2237.

Alexander & P.H. Winne (Eds.) Handbook of Educational Psychology (655-674). Mahwah, NJ, US: Lawrence Erlbaum Associates Publishers.

Ali, W. (2020). Online and remote learning in higher education institutes: A necessity in light of COVID-19 pandemic. Higher education studies, 10(3), 16-25.

Crossref, GoogleScholar, Indexed

Allen, I.E., & Seaman, J. (2011). Going the distance: Online education in the United States, 2011. Babson Survey Research Group and Quahog Research Group, LLC.

Crossref, GoogleScholar, Indexed

Allen, I.E., Seaman, J., Lederman, D, & Jaschik, S. (2011). Conflicted: Faculty and online education, 2012. Babson Survey Research Group and Quahog Research Group, LLC.

Altman, I. (1990). Conceptualizing “Rapport.”Psychological Inquiry, 1(4), 294–297.

Arbuckle, J.L. (2008). Amos (Version 17.0.2) [Computer Program]. Chicago: SPSS.

Bartolucci, F., Bacci, S., & Gnadi. M. (2016). Statistical analysis of questionnaires: A unified approach based on R and Stata. CRC Press, BocaRaton, FL

Battistich, V., Solomon, D., Watson, M., & Schaps, E. (1997). Caring school communities.

Beins, B.C. (2011). A brief stroll down random access memory lane: Implications for teaching with technology. In D. S. Dunn, J.H. Wilson, J.E. Freeman, & J.R. Stowell (Eds.) Best Practices for Technology-Enhanced Teaching and Learning, 35-51. New York: Oxford University Press.

Belhekar, V.M. (2016). Statistics for Psychology Using R. Thousand Oaks. CA: Sage.

Benson, T.A., Cohen, A.L., & Buskist, W. (2005). Rapport: Its relation to student attitudes and behaviors toward teachers and classes.Teaching of Psychology, 32(4), 237–239.

Benson, T.A., Cohen, A.L., & Buskist, W. (2005). Rapport: Its relation to student attitudes and behaviors toward teachers. Teaching of Psychology, 32, 237-239.

Bergin, C., & Bergin, D. (2009). Attachment in the classroom. Educational Psychology Review, 21, 141-170.

Bernieri, F.J. (1998). Coordinated movement and rapport in teacher student interaction.Journal of Nonverbal Behavior, 12(2), 120–138.

Crossref, GoogleScholar, Indexed

Best Practices for Technology-Enhanced Teaching and Learning, 53-71. New York: Oxford University Press.

Brief Measure of Student-Instructor Rapport Predicts Student Success

Bryman, A., & Bell, E. (2015). Business Research Methods, University Press, Oxford, New York.

Crossref, GoogleScholar, Indexed

Buskist, W., & Saville, B.K. (2001). Rapport-building: Creating positive emotional contexts for enhancing teaching and learning.APS Observer, 14(3), 12-13.

Buskist, W., Sikorski, J., Buckley, T., & Saville, B.K. (2002). Elements of master teaching. In S.F. Davis & W. Buskist (Eds.), The Teaching of Psychology: Essays in Honor of Wilbert.

Byrne, B.M. (2016). Structural equation modeling with AMOS: Basic concepts, applications, and programming (3rd ed.). Mahwah, NJ: Erlbaum.

Cappelleri, J., Jason Lundy, J., & Hays, R. (2022). Overview of classical test theory and item response theory for the quantitative assessment of items in developing patient-reported outcomes measures.

Crossref, GoogleScholar, Indexed

Clark, R.K., Walker, M., & Keith, S. (2002). Experimentally assessing the student impacts of out-of-class communication: Office visits and the student experience. Journal of College Student Development, 43, 824-837.

Clark-Ibanez, M., & Scott, L. (2008). Learning to Teach Online. Teaching Sociology, 36, 34–41.

Clauser, B.E. (2007). The life and labors of Francis Galton: A review of four recent books about the father of behavioral statistics.Journal of Educational and Behavioral Statistics, 32(4), 440–444.

Costin, C., & Coutinho, A. (2022). Experiences with risk-management and remote learning during the covid-19 pandemic in brazil: Crises, destitutions, and (possible) resolutions. In Secondary Education during Covid-19 Disruptions to Educational Opportunity during a Pandemic. Springer.

Creasey, G., Jarvis, P., & Gadke, D. (2009). Student attachment stances, instructor immediacy, and student-instructor relationships as predictors of achievement expectancies in college student.Journal of College Student Development, 50, 353- 372.

Creasey, G., Jarvis, P., & Knapcik, E. (2009). A measure to assess student-instructor relationships. International Journal for the Scholarship of Teaching and Learning, 3, 1-7.

Crossref, GoogleScholar, Indexed

del Rosario, M.Jr. N., & dela Cruz, R.A. (2022). Perception on the online classes challenges experienced during the COVID-19 pandemic by LSPU computer studies students.International Journal of Learning, Teaching and Educational Research, 21(2), 268–284.

Crossref, GoogleScholar, Indexed

Diab-Bahman, R. (2021). VLEs in a post-COVID world: Kuwait's universities. In Challenges and Opportunities for the Global Implementation of E-Learning Frameworks, 265-283. IGI Global.

Diab-Bahman, R., & Al-Enzi, A. (2020). The impact of COVID-19 pandemic on conventional work settings. International Journal of Sociology and Social Policy.

Crossref, GoogleScholar, Indexed

Diab-Bahman, R., Al-Enzi, A., Sharafeddine, W., & Aftimos, S. (2021). The effect of attendance on student performance: implications of using virtual learning on overall performance.Journal of Applied Research in Higher Education, ahead-of-p(ahead-of-print).

Dowling, C., Godfrey, J.M., & Gyles, N. (2003). Do hybrid flexible delivery teaching methods improve accounting students’ learning outcomes?Accounting Education, 12(4), 373–391.

Crossref, GoogleScholar, Indexed

Dunn, D.S., Wilson, J.H., & Freeman, J.E. (2011). Approach or avoidance? Understanding technology’s place in teaching and learning. In D.S. Dunn, J.H. Wilson, J.E. Freeman, & J.R. Stowell (Eds.) Best Practices for Technology-Enhanced Teaching and Learning, 17-34. New York: Oxford University Press. Educational Psychologist, 32, 137-151.

Field, A.P. (2018). Discovering statistics using SPSS. London, England : SAGE.

Flanigan, A.E., Akcaoglu, M., & Ray, E. (2022). Initiating and maintaining student-instructor rapport in online classes.Internet and Higher Education, 53.

Freeman, D., & Johnson, K.E. (1998). Reconceptualizing the knowledge-base of language teacher education.InSource: TESOL Quarterly, 32(3).

Crossref, GoogleScholar, Indexed

Frisby, B.N., & Buckner, M.M. (2018). Rapport in the instructional context. In M.L. Houser & A.M. Hosek (Eds.), Handbook of instructional communication: Rhetorical and relational perspectives, 2nd ed, 126–137. Routledge.

Frisby, B.N., & Martin, M.M. (2010a). Instructor - Student and student - Student rapport in the classroom.Communication Education, 59(2), 146–164.

Crossref, GoogleScholar, Indexed

Frisby, B.N., & Martin, M.M. (2010b). Instructor - Student and student - Student rapport in the classroom.Communication Education, 59(2), 146–164.

Crossref, GoogleScholar, Indexed

Fullmer, S., & Daniel, D. (2022). Psychometrics. [online] Edtechbooks.org.

Garson, G.D. (2012). Testing statistical assumptions. Asheboro, NC: Statistical Associates Publishing.

Gillespie, M. (2005). Student-teacher connection: A place of possibility. Journal of Advanced Nursing, 52, 211-219.

Glazier, R.A. (2016a). Building rapport to improve retention and success in online classes. Journal of Political Science Education, 12(4), 437–456.

Crossref, GoogleScholar, Indexed

Glazier, R.A. (2016b). Building rapport to improve retention and success in online classes. Journal of Political Science Education, 12(4), 437–456.

Crossref, GoogleScholar, Indexed

Granitz, N.A., Koernig, S.K., & Harich, K.R. (2009). Now it’s personal: Antecedents and outcomes of rapport between business faculty and their students. Journal of Marketing Education, 31, 52-65.

Groenen, P.J.F., & van der Ark, L.A. (2006). Visions of 70 years of psychometrics: The past, present, and future. Statistica Neerlandica, 60(2), 135–144.

Gurung, R.A.R., Daniel, D.B., & Landrum, R.E. (2012). A multisite study of learning in introductory psychology courses.Teaching of Psychology, 39, 170-175.

Hapke, H., Lee-Post, A., & Dean, T. (2021). 3-in-1 Hybrid learning environment. Marketing Education Review, 31(2), 154–161.

Crossref, GoogleScholar, Indexed

Hattie, J.A.C. (2009). Visible learning: A synthesis of over 800 Meta-analyses relating to achievement. New York: Routledge.

Hays, R.D., Morales, L.S., Reise, S.P. (2000). Item response theory and health outcomes measurement in the 21st century.Medical Care, 38(Suppl)II-28II(42).

Crossref, GoogleScholar, Indexed

Helms, J.L., Marek, P., Randall, C.K., Rogers, D.T., Taglialatela, L.A., & Williamson, A.L. (2011). Developing an online curriculum in psychology: Practical advice from a departmental initiative. In D.S. Dunn, J.H. Wilson, J. E. Freeman, & J.R. Stowell (Eds.)

Hoerunnisa, A., Suryani, N., & Efendi, A. (2019). The effectiveness of the use of e-learning in multimedia classes to improve vocational students’ learning achievement and motivation.Kwangsan: Journal of Educational Technology, 7(2), 123.

Crossref, GoogleScholar, Indexed

Howitt, D., & Cramer, D. (2017). Understanding statistics in psychology with SPSS. Boston. Pearson Higher Education.

Hoyle, R.H. (Ed.). (2012). Handbook of structural equation modeling. New York: Guilford Press.

Hwang, A. (2018). Online and hybrid learning. Journal of Management Education, 42(4), 557-563.

Crossref, GoogleScholar, Indexed

Intorcia, E. (2021). Educational (di)stances: Reimagining ELT in hybrid learning-teaching environments. 2, 50–63.

McKeachie, J., & Charles L. Brewer, 27–39. Mahwah, NJ: Lawrence Erlbaum Associates, Inc.

Jackson, D.L., Gillaspy, J.A., & Purc-Stephenson, R. (2009). Reporting practices in confirmatory factor analysis: An overview and some recommendations. Psychological Methods, 14(1), 6-23.

Crossref, GoogleScholar, Indexed

Jamison, A., Kolmos, A., & Holgaard, J.E. (2014). Hybrid Learning: An integrative approach to engineering education.Journal of Engineering Education, 103(2), 253–273.

Crossref, GoogleScholar, Indexed

Jones J.S. (1993). The Galton Laboratory, University College London. In: Keynes M. (eds) Sir Francis Galton, FRS. Studies in Biology, Economy and Society. Palgrave Macmillan, London.

Jones, L.V., & Thissen, D. (2007). A history and overview of psychometrics. Psychometrics, 26, 1–27.

Crossref, GoogleScholar, Indexed

Juvonen, J. (2006). Sense of belonging, social bonds, and school functioning. In P. A.

Kaufmann, R., & Vallade, J.I. (2020). Exploring connections in the online learning environment: student perceptions of rapport, climate, and loneliness.Interactive Learning Environments.

Kavrayici, C. (2021). The relationship between classroom management and sense of classroom community in graduate virtual classrooms. Turkish Online Journal of Distance Education, 22(7), 112–125.

Kolb, A.Y., & Kolb, D.A. (2005). Learning styles and learning spaces: Enhancing experiential learning in higher education. In Management Learning & Education, 4(2).

Crossref, GoogleScholar, Indexed

Kristin E. Voelkl. (1995). School warmth, student participation, and achievement. The Journal of Experimental Education, 63(2), 127–138.

Crossref, GoogleScholar, Indexed

Kupczynski, L., Ice, P., Wiesenmayer, R., & McCluskey, F. (2010). Student perceptions of the relationship between indicators of teaching presence and success in online courses. Journal of Interactive Online Learning, 9, 23-43.

Lammers, W.J. (2012). Student-Instructor rapport: Teaching style, communication, and academic success. Presented at Southwestern Psychological Association, Oklahoma City, OK.

Lammers, W.J., & Gillaspy Jr, J.A. (2013). Brief measure of student-instructor rapport predicts student success in online courses. International Journal for the Scholarship of Teaching and Learning, 7(2), n2.

Crossref, GoogleScholar, Indexed

Lammers, W.J., Gillaspy Jr, J.A., & Hancock, F. (2017). Predicting academic success with early, middle, and late semester assessment of student–instructor rapport. Teaching of Psychology, 44(2), 145-149.

Crossref, GoogleScholar, Indexed

Lammers, W.J., & Gillaspy, J.A.Jr. (2013). Brief measure of student-instructor rapport predicts student success in online courses. International Journal for the Scholarship of Teaching and Learning, 7(2), 16.

Crossref, GoogleScholar, Indexed

Ledford, J.R., & Gast, D.L. (2018). Single case research methodology: Applications in special education and behavioral sciences. New York, NY: Routledge.

Legg, A.M., & Wilson, J.H. (2009). E-mail from professor enhances student motivation and attitudes. Teaching of Psychology, 36, 205-211.

Crossref, GoogleScholar, Indexed

Martin, F., & Bolliger, D.U. (2018). Engagement matters: Student perceptions on the importance of engagement strategies in the online learning environment. Online Learning Journal, 22(1), 205–222.

Crossref, GoogleScholar, Indexed

Maydeu-Olivares, A., Cai, L., & Hernández, A. (2011). Comparing the fit of item response theory and factor analysis models. Structural Equation Modeling: A Multidisciplinary Journal, 18(3), 333–356.

Crossref, GoogleScholar, Indexed

Meyers, S.A. (2008). Working alliances in college classrooms. Teaching of Psychology, 35, 29-32.

Crossref, GoogleScholar, Indexed

Miller, M.D., Linn, R.L., & Gronlund, N. (2013). Reliability. In J.W. Johnston, L. Carlson, & P.D. Bennett (Eds.), Measurement and assessment in teaching, 11th ed, 108-137. Pearson.

Miller, M.D., Linn, R.L., & Gronlund, N. (2013). Validity. In J.W. Johnston, L. Carlson, & P.D. Bennett (Eds.), Measurement and assessment in teaching, 11th ed, 70-107. Pearson.

Mishra, L., Gupta, T., & Shree, A. (2020). Online teaching-learning in higher education during lockdown period of COVID-19 pandemic. International Journal of Educational Research Open, 1, 100012.

Miyah, Y., Benjelloun, M., Lairini, S., & Lahrichi, A. (2022a). Covid-19 impact on public health, environment, human psychology, global socioeconomy, and education. In Scientific World Journal, 2022. Hindawi Limited.

Miyah, Y., Benjelloun, M., Lairini, S., & Lahrichi, A. (2022b). Covid-19 impact on public health, environment, human psychology, global socioeconomy, and education. In Scientific World Journal, 2022. Hindawi Limited.

Moore, J.L., Dickson-Deane, C., & Galyen, K. (2011). E-Learning, online learning, and distance learning environments: Are they the same?Internet and Higher Education, 14(2), 129–135.

Crossref, GoogleScholar, Indexed

Moore, M.G., & Kearsley, G. (2012). Distance education: A systematic view of online learning (3rd Edition). Belmont, VA: Wadsworth Cengage Learning.

Muilenburg, L.Y., & Berge, Z.L. (2005). Students barriers to online learning: A factor analytic study.InDistance Education, 26(1), 29–48.

Crossref, GoogleScholar, Indexed

Murphy, E., & Rodri´guez-Manzanares, M. (2012). Rapport in distance education. International Review of Research in Open & Distance Learning, 13, 167-190.

Muthén, L.K., &Muthén, B.O. (2017). Mplus user’s guide (8th ed.) Los Angeles, CA: Muthén&Muthén

Myers, S.A. (2004). The relationship between perceived instructor credibility and college student in-class and out-of-class communication. Communication Reports, 17, 129- 137.

Myers, S.A., & Claus, C.J. (2012). The relationship between students’ motives to communicate with their instructors and classroom environment. Communication Quarterly, 60(3), 386–402.

Crossref, GoogleScholar, Indexed

Myers, S.A., Martin, M.M., & Knapp, J.L. (2005). Perceived instructor in-class communicative behaviors as a predictor of student participation in out of class communication. Communication Quarterly, 53, 437-450.

Crossref, GoogleScholar, Indexed

Nelson, R.M., & Debacker, T.K. (2008). Achievement motivation in adolescents: The Role of Peer Climate and Best Friends. In Source: The Journal of Experimental Education, 76(2). Winter.

Newsom, J.T. (2018). Minimum Sample Size Recommendations. Psy 523/623. Structural Equation Modeling, Spring 2018.

Nunes, M., Baptista., & Isaias, P. (2018). Proceedings of the international conference E-learning 2018, Madrid, Spain. IADIS Press.

Pallant, J. (2013). SPSS Survival Manual. A step by step guide to data analysis using SPSS, (4th edition). Allen & Unwin,

Park, E., Martin, F., Lambert, R. (2019). Examining Predictive Factors for Student Success in a Hybrid Learning Course.The Quarterly Review of Distance Education, 20(2), 11–27.

Park, M.Y. (2016). Integrating rapport-building into language instruction: A study of Korean foreign language classes.Classroom Discourse, 7(2), 109–130.

Crossref, GoogleScholar, Indexed

Pascarella, E.T. & Terenzini, P.T. (2001). Student-faculty and student-peer relationships as mediators of the structural effects of undergraduate residence arrangement. Journal of Educational Research, 73, 344-353.

Crossref, GoogleScholar, Indexed

Prion, S.K., Gilbert, G.E., & Haerling, K.A. (2016). Generalizability theory: An introduction with application to simulation evaluation.Clinical Simulation in Nursing, 12(12), 546–554.

Raes, A. (2022). Exploring student and teacher experiences in hybrid learning environments: Does presence matter?Postdigital Science and Education, 4(1), 138–159.

Crossref, GoogleScholar, Indexed

Raes, A., Detienne, L., Windey, I., & Depaepe, F. (2020). A systematic literature review on synchronous hybrid learning: Gaps identified. Learning Environments Research, 23(3), 269-290.

Crossref, GoogleScholar, Indexed

Rapanta, C., Botturi, L., Goodyear, P., Guàrdia, L., & Koole, M. (2020). Online university teaching during and after the covid-19 crisis: Refocusing teacher presence and learning activity.Post digital Science and Education, 2(3), 923–945.

Reio Jr., T.G., Marcus, R.F., & Sanders-Reio, J. (2009). Contribution of student and instructor relationships and attachment style to school completion.The Journal of Genetic Psychology, 170, 53-71.

Crossref, GoogleScholar, Indexed

Reis-Bergan, M., Baker, S.C., Apple, K.J., & Zinn, T.E. (2011). Faculty-student communication: Beyond face to face. In D. S. Dunn, J. H. Wilson, J. E. Freeman, & J.R.

Sher, A. (2009). Assessing the relationship of student-instructor and student-student interaction to student learning and satisfaction in web-based online learning environment. Journal of Interactive Online Learning, 8, 102-120.

Siragusa, L. (2002). Research into the effectiveness of online learning in higher education: Survey findings. In Proceedings of the Western Australian Institute for Educational Research Forum.

Spearman, C. (1904). "General intelligence," objectively determined and measured. The American Journal of Psychology, 15(2), 201-292.

Crossref, GoogleScholar, Indexed

Spencer-Oatey, H. (2008). Culturally speaking: Culture, communication and politeness theory. Continuum International Publishing Group.

Stowell (Eds.) Best Practices for Technology-Enhanced Teaching and Learning, 73-85. New York: Oxford University Press.

Sull, E. (2006). Establishing a solid rapport with online students. Online Classroom, 6-7.

Sybing, R. (2019). Making Connections: Student-Teacher Rapport in Higher Education Classrooms.Journal of the Scholarship of Teaching and Learning, 19(5).

Thompson, B. (2018). Exploratory and confirmatory factor analysis: Understanding concepts and applications. Washington, DC: American Psychological Association.

Wang, J., & Wang, X. (2012). Structural equation modeling.

Webb, H.W., Gill, G., & Poe, G. (2005a). Teaching with the case method online: Pure versus hybrid approaches. In Decision Sciences Journal of Innovative Education, 3.

Crossref, GoogleScholar, Indexed

Webb, H.W., Gill, G., & Poe, G. (2005b). Teaching with the case method online: Pure versus hybrid approaches. In Decision Sciences Journal of Innovative Education, 3.

Crossref, GoogleScholar, Indexed

Wentzel, K.R. (2009). Students’ relationships with teachers as motivational contexts. In K. R. Wentzel, & A. Wigfield, (Eds.) Handbook of Motivation at School, 301-322. New York, NY, US: Routledge/Taylor & Francis Group.

Wigfield, A., Cambria, J., & Eccles, J.S. (2012). Motivation in education. In R.M. Ryan (Ed.). The Oxford Handbook of Human Motivation, 463-478. New York, NY, US: Oxford University Press.

Wilson, J.H. & Locker Jr., L. (2007). Immediacy scale represents four factors: Nonverbal and verbal components predict student outcomes.Journal of Classroom Interaction, 42, 4-10.

Wilson, J.H. & Ryan, R.G. (2013). Professor–student rapport scale: Six items predict student outcomes. Teaching of Psychology, 40, 130-133.

Wilson, J.H., & Wilson, S.B. (2007). The first day of class affects student motivation: An experimental study.Teaching of Psychology, 34, 226-230.

Wilson, J.H. (2006). Predicting student attitudes and grades from perceptions of instructor’s attitudes.Teaching of Psychology, 33, 91-95.

Crossref, GoogleScholar, Indexed

Wilson, J.H., & Ryan, R.G. (2012). Developing student-teacher rapport in the undergraduate classroom. In W. Buskist & V. Benassi (Eds.) Effective College and University Teaching: Strategies and Tactics for the New Professoriate, 81-89. Thousand Oaks, CA, US: Sage Publications, Inc.

Wilson, J.H., & Ryan, R.G. (2013). Professor–student rapport scale: Six items predict student outcomes. Teaching of Psychology, 40(2), 130-133.

Wilson, J.H., Naufel, K.Z., & Hackney, A.A. (2011). A social look at student-instructor interactions. In D. Mashek & E. Yost Hammer, Eds.) Empirical Research in Teaching and Learning: Contributions from Social Psychology, 32-50. Wiley-Blackwell Publishing Ltd.

Wilson, J.H., Ryan, R.G., & Pugh, J.L. (2010). Professor-student rapport scale predicts student outcomes.Teaching of Psychology, 37, 246-251.

Crossref, GoogleScholar, Indexed

Wilson, J.H., Smalley, K.B., & Yancey, C.T. (2012) Building relationships with students and maintaining ethical boundaries. In R. E. Landrum & M. A. McCarthy (Eds.) Teaching Ethically: Challenges and Opportunities, 139-150. Washington, DC, US: American Psychological Association.

Wilson, J.H., Wilson, H.B., & Legg, A.M. (2012). Building rapport in the classroom and student outcomes. In Schwartz, B. M. & Gurung, R. A. R. (Eds.). Evidence-Based Teaching for Higher Education, 23-37. American Psychological Association: Washington, D.C.

Wu, M., Tam, H.P., & Jen, T.H. (2016). Classical test theory. Educational Measurement for Applied Researchers, 73–90. Springer.

Wywial, J.L. (2015). Sampling designs dependent on sample parameters of auxiliary variables. Springer, New York

Xiao, J., Han, C.M., & Yuan, C. (2008). Middle Cambrian to Permian subduction-related accretionary orogenesis of Northern Xinjiang, NW China: implications for the tectonic evolution of central Asia. Journal of Asian Earth Sciences.

Xiao, J., Sun-Lin, H.Z., Lin, T.H., Li, M., Pan, Z., & Cheng, H.C. (2020). What makes learners a good fit for hybrid learning? Learning competences as predictors of experience and satisfaction in hybrid learning space. British Journal of Educational Technology, 51(4), 1203-1219.

Crossref, GoogleScholar, Indexed

Yang, Z. (2020). A case for hybrid learning: Using a hybrid model to teach advanced academic reading. InORTESOL Journal, 37.

Zahra, R., & Sheshasaayee, A. (2022). Challenges identified for the efficient implementation of the hybrid E- learning model during COVID-19, 1–5.

Zitter, I. (2012). Hybrid learning environments: Merging learning and work processes to facilitate knowledge integration and transitions.

Crossref, GoogleScholar, Indexed

Received: 17-May-2022, Manuscript No. JMIDS-22-12123; Editor assigned: 20-May-2022, PreQC No. JMIDS-22-12123 (PQ); Reviewed: 04-June-2022, QC No. JMIDS-22-12123; Revised: 11- June -2022, Manuscript No. JMIDS-22-12123 (R); Published: 17-June-2022