Review Article: 2023 Vol: 27 Issue: 3

Chat GPT in HR− Some Dark Shades Too?

Manju Nair, International School of Informatics & Management

Citation Information: Nair, M. (2023). Chat Gpt in Hr- Some Dark Shades Too?. Journal of Organizational Culture Communications and Conflict, 27(3), 1-5.

Abstract

There’s no doubt that tech, such as Chat GPT, is shaping up to disrupt and evolve the working world- and HR is no exception. While speeding up processes, cutting admin time and making people’s working lives easier the tool has left HR with more time to focus on areas where they can add value. Chat GPT helps with creating a solid first draft that provides an easier starting point than a blank screen. But there is a problem- retrieving the source, is it Authentic? Moreover getting significant answers to HR’s typical questions would mean feeding in even more significant information. Is HR as yet ready to share the sensitive information? As sharing this information would mean feeding in information for Chat GPT to process this for somebody else to copy, paste and share? So here it becomes significantly important for HR to think about the potential inappropriate use in their specific context and sensitize their employees as to why they should not upload personal data. Based on a mini review the paper reflects on the aspects HR needs to keep in mind while making use of Chat GPT in its processes.

Keywords

Chat GPT, HR, NLP, Common Sense.

Introduction

The HR department plays a crucial role in managing people and is responsible for the engagement, productivity, and motivation of the company’s workforce. While recruiting new talent, and managing employee benefits, and compensation of employees HR has to deal with many mundane tasks that if otherwise executed by leveraging technology can be done more efficiently. The processes of HR can achieve greater efficiency and effectiveness while leveraging AI tools like Chat GPT which can help streamline processes, automate repetitive tasks and provide real-time employee support and enhance overall employee experience. (Marr, 2023).

With language models and AI going ahead to disrupt as well offer new opportunities and threats to different roles of HR, it becomes vital for HR to understand the far-fetched capabilities of Chat GPT as it probably is going to stay towards advancement and improvement. (Marr, 2023).

Chat GPT is a natural language processing model based on a combination of GPT-2, a transformer-based language model which has been developed by OpenAI. Based on an approach towards transferring learning, Chat GPT has been supervised and reinforced on learnings on the GPT-3 group of large language patterns advanced by OpenAI (George & George, 2023).

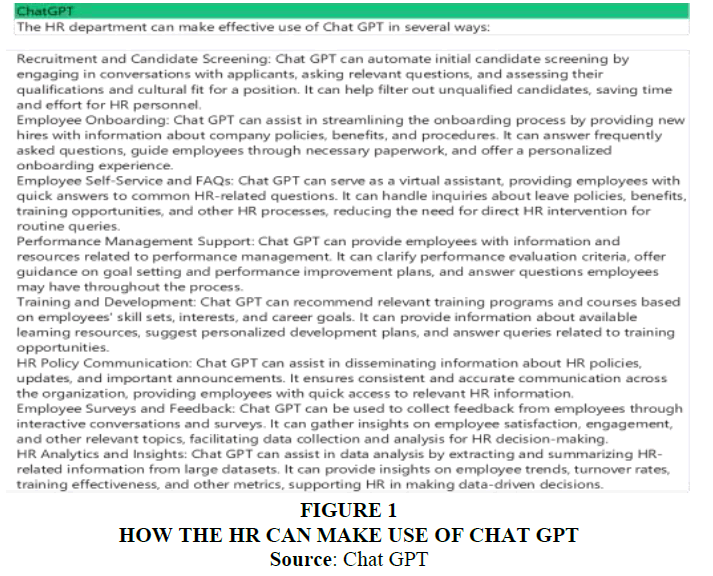

Many outputs can be detailed while looking at chat GPT applications in HR which include Recruitment and candidate screening; employee Self-Service and FAQs; performance management support; training and development; HR policy communication; employee surveys and feedback; HR Analytics and insights.

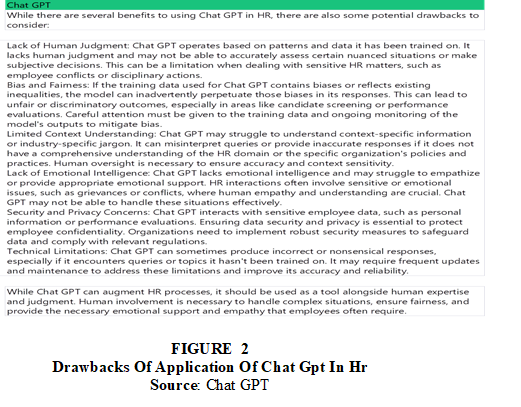

Figure 1 is a reflection of the answer to the question on the application of Chat GPT in HR by the tool itself, while Figure 2 talks about the drawbacks of the applications in HR as described by the tool.

The major drawbacks of chat GPT’s application in HR decisions and processes include lack of discretionary decisions; inability in mitigating biases, especially during performance evaluation; lack of context sensitivity; lack of human empathy; and in some cases lack of accuracy and reliability.

Many procedures have been created to make sure that the biases do not affect users, such as the European Union’s AI Ethics Guidelines or AI Fairness 360 (Ferrara, 2023). The creators of ChatGPT have also secured the model against offensive answers, but there are still many biases that are hidden. (Kocon et al., 2023). Since HR deals with managing people which includes at many times a situational judgment, especially during conflicts or may be disciplinary proceedings the tool needs to be used rationally.

The paper looks at a critical evaluation of the various uses of Chat GPT in HR. While doing so the paper highlights the application of Chat GPT in HR as well as evaluates the drawbacks and how HR has to be cautious while using the tool.

Methodology

With an objective to determine how HR professionals can make use of Chat GPT in its various processes as well as evaluate critically the applications of the tool, the researcher conducted a mini-review. The search terms included ‘chat GPT and HR’; Chat GPT; commonsense and HR in various databases including Web of Science, Scopus, Google Scholar, Proquest, and EBSCO.

While there was considerable work that analyzed Chat GPT from a technical perspective, research perspective highlighting the challenges and to an extent its application in healthcare, marketing, and education, there were hardly a few papers that specifically looked at how HR can leverage using the tool. Hence the researcher drew from papers that were on a broader area to reach a conclusion. The findings include what HR needs to watch out for while making use of the tool so that it can better leverage Chat GPT in its processes.

Results

The core of the most significant areas of research in human resource development and management is meaningfulness (Lips-Wiersma et al., 2022) which in the context of work represents a pragmatic and moral concern for systems, workers, and organizations (Yeoman et al., 2019). It goes beyond the multiple positive outcomes not only at the individual but also at the organizational level (Allan et al., 2019).

Chat GPT has a limited understanding of the context and emotional intelligence thereby inhibiting context sensitivity and accuracy thereon and extending emotional support where need be, which HR is generous with. Language models are prone to generating responses containing human-like biases as well as presenting moral and ethical stances (Schramowski et al., 2022).

ChatGPT is an inexperienced commonsense problem solver, which cannot precisely identify the needed commonsense knowledge for answering a specific question, i.e., ChatGPT does not precisely know what commonsense knowledge is required to answer a question. The capability for computers to comprehend and cooperate with humans in a way that lets them inevitably make depictions of human language and meaning thereby solving higher level NLP tasks referred to as ‘commonsense reasoning’ is one of the particularly challenging aspects of AI, specifically NLP (Saeedi, 2023). The Macquarie Concise Dictionary (1988:185) defines “common sense” as a “sound, practical perception or understanding”. Here better mechanisms for utilizing commonsense knowledge in LLMs, such as instruction following, better commonsense guidance, etc need to be explored. Bian et al. (2023) as HR involve dealing with people.

People generally assume others to have the reasoning and practical knowledge in everyday situations but it requires both natural language understanding and commonsense reasoning, which is considered one of the most challenging problems in the field of NLP.

Discussion

HR can make use of the tool to frame interview questions for particular roles, create email templates for communication with the candidate, or even in writing an employment contract. The tool can be used in recruitment to write job descriptions, write generic content about job openings on social media and company websites as well creating standard operating produce for hiring.

Based on unambiguous instructions specific to the role and statements that look exclusively at the requirements of the role, Chat GPT can successfully create scientific questions as well as give clues on what the interviewer should look for thereby ensuring an unbiased interview preparation. The answers to the interview questions by the candidate can also be a part of the input to arrive at an unbiased decision. The tool also be used for employee engagement, designing training materials, generating FAQs, designing training plans, as well writing scripts for presentations, or better communication (during onboarding, as well as beyond).

The uses are mainly for speeding up writing, researching and content creation which generally is time-consuming and repetitive can now be done in minutes giving HR professionals giving them more bandwidth to focus on the people management challenges on the job.

Though a time-saving tool it doesn’t generate a final product that can prioritize concerns, generate positive relations or decide the significance of the work which signifies meaningfulness. As meaningful work is a positive experience associated with a sense of competence, presence of positive relation with others, significance and purpose of work, and prioritizes concerns on common sense (Tommasi et al., 2023).

Conclusion

But the question remains as to what extent the information provided is correct. Can the AI necessarily tell the difference between a source that is reliable and the opinions or tweets that are shared on social media? Isn’t this enough of a cause for biases to creep in?

The tool cannot explain why it wrote what it wrote or where it got the information from making it ‘block boxed’. So here the very fact that time has been saved is time lost as HR has to cross-check loads of information. Moreover while answering questions on company benefits and policies, pay gaps, employee happiness, benefits and analyzing employee sentiments, and helping reduce attrition it can only leverage data that is publicly available.

So if HR needs to get answers to typical questions, say if X employees are happy, the answer would be a generalized one, and for a company that does not have much information in the public domain the answer will be based on the data that is available and not necessarily catering to the question or an apology as an answer. Hence getting answers to these typical questions would mean inputs in the form of significant amounts of HR’s data much of which shall be highly sensitive and therefore would breach privacy laws. Alarm bells are going off with the very thought as it is a huge risk and red flag. So here it becomes significantly important for HR to think about the potential inappropriate use in their specific context and sensitize their employees as to why they should not upload personal data.

But all said and done, Chat GPT can create a solid first draft that provides an easier starting point than a blank screen. With the Writers Guild of America considering allowing its use in screenwriting HR can use the tool in processes including screening, onboarding, communication, virtual assistance, goal setting, and extending training opportunities. But it's still essential to review information from Chat GPT for accuracy before copying, pasting, and sharing the results.

There’s no doubt that tech, such as Chat GPT, is shaping up to disrupt and evolve the working world and HR is no exception–but no matter how good the technology is, it’s no replacement for people. And HR deals with heads, hands and heart. Though heads and hands are made possible with technology the heart remains to be replaced for which HR remains the answer.

References

Allan, B.A., Batz-Barbarich, C., Sterling, H.M., & Tay, L. (2019). Outcomes of meaningful work: A meta?analysis.Journal of Management Studies,56(3), 500-528.

Indexed at, Google Scholar, Cross Ref.

Bian, N., Han, X., Sun, L., Lin, H., Lu, Y., & He, B. (2023). Chatgpt is a knowledgeable but inexperienced solver: An investigation of commonsense problem in large language models.

Indexed at, Google Scholar, Cross Ref.

Ferrara, E. (2023). Should chatgpt be biased? challenges and risks of bias in large language models.

Indexed at, Google Scholar, Cross Ref.

George, A.S., & George, A.H. (2023). A review of ChatGPT AI's impact on several business sectors.Partners Universal International Innovation Journal,1(1), 9-23.

Koco?, J., Cichecki, I., Kaszyca, O., Kochanek, M., Szyd?o, D., Baran, J., ... & Kazienko, P. (2023). ChatGPT: Jack of all trades, master of none.Information Fusion, 101861.

Indexed at, Google Scholar, Cross Ref.

Lips-Wiersma, M., Bailey, C., Madden, A., & Morris, L. (2022). Why We Don't Talk About Meaning at Work.MIT Sloan Management Review,63(4), 33-38.

Marr, B. (2023). The 7 Best Examples Of How ChatGPT Can Be Used In Human Resources (HR).

Saeedi, S. (2023).Socially Aware Natural Language Processing with Commonsense Reasoning and Fairness in Intelligent Systems.

Schramowski, P., Turan, C., Andersen, N., Rothkopf, C.A., & Kersting, K. (2022). Large pre-trained language models contain human-like biases of what is right and wrong to do.Nature Machine Intelligence,4(3), 258-268. Indexed at, Google Scholar, Cross Ref.

Tommasi, F., Sartori, R., & Ceschi, A. (2023). Scoping out the Common-Sense Perspective on Meaningful Work: Theory, Data and Implications for Human Resource Management and Development.Organizacija,56(1), 80-89.

Indexed at, Google Scholar, Cross Ref.

Yeoman, R. (2023). Meaningful work. InEncyclopedia of Human Resource Management, 258-259.

Indexed at, Google Scholar, Cross Ref

Received: 08-May-2023, Manuscript No. JOCCC-23-13726; Editor assigned: 10-May-2023, Pre QC No. JOCCC-23-13726(PQ); Reviewed: 24-May-2023, QC No. JOCCC-23-13726; Published: 31-May-2023