Research Article: 2021 Vol: 20 Issue: 5

The Impact of Artificial Morality on the Performance of Textile Industry of the ASEAN Countries

Rusdi Omar, Universiti Utara Malaysia

Kamarul Azman Khamis, Universiti Utara Malaysia

Kittisak Jermsittiparsert, Dhurakij Pundit University

Narentheren Kaliappen, Universiti Utara Malaysia

Katarzyna Szymczyk, Czestochowa University of Technology

Abstract

The primary purpose of the article is to examine the artificial morality that is measured by the increase in machine output, increase in the accuracy of the machine, reduce the work hour of the labor and reduce the cost of output, impact on the performance of the textile industry in ASEAN countries. The data were gathered from the textile industry currently operating in the ASEAN countries from 2002 to 2018, and a fixed-effect model was employed to test the hypotheses of the paper but using STATA. The results uncovered a positive link between artificial morality and the textile industry's performance in ASEAN countries. These findings recommended to the policymakers and implementation authorities that they develop and implement the policies regarding improving the businesses' artificial morality that enhance the firm performance.

Keywords

Artificial Morality, Firm Performance, Textile Industry, ASEAN Countries.

Introduction

Counterfeit ethical quality moves a portion of the weight for ethical conduct away from the originators and clients and onto the PC frameworks themselves (Klumpp, 2018). The errand of creating fake good operators becomes particularly significant as PCs are intended to perform with more prominent and more prominent independence. The speed at which PCs execute assignments progressively denies people from assessing whether each activity is performed dependably or morally. Implementing programming specialists with moral basic leadership abilities guarantees PC frameworks that can assess whether each activity per shaped is morally fitting. It merely implies that it will be simpler to utilize PCs morally if affectability to moral and legitimate qualities is essential to the programming (Wallach et al., 2008).

Despite whether the profound fake quality is veritable profound quality, fake operators act in manners with moral results (Lin et al., 2010). This is not just to say that they may cause hurt in any event; falling trees do that. Alternatively, maybe, it is to cause to notice the way that the damages brought about by fake operators might be checked and managed by the specialists themselves (Soebandrija et al., 2018).

We contend that profound fake quality should likewise be drawn closer proactively, as an engineering configuration challenge for unequivocally constructing ethically proper conduct into counterfeit specialists (Haenlein & Kaplan, 2019). The endeavor to plan counterfeit good specialists' powers considers the data required for essential moral leadership and the calculations that might be fittingly applied to the accessible data (Canhoto & Clear, 2019). We get where Wallach et al. (2008) left off when they composed, 'Fundamental to building an ethically excellent specialist is the errand of giving it enough knowledge to survey the impacts of its activities on aware creatures, and to utilize those evaluations to settle on fitting decisions' (Floridi, 1999). Top-down ways to deal with this undertaking include turning unequivocal speculations of good conduct into calculations. Base up approaches include endeavors to prepare or advance specialists whose conduct imitates ethically commendable human conduct (Soltani-Fesaghandis & Pooya, 2018).

Literature Review

Artificial Intelligence

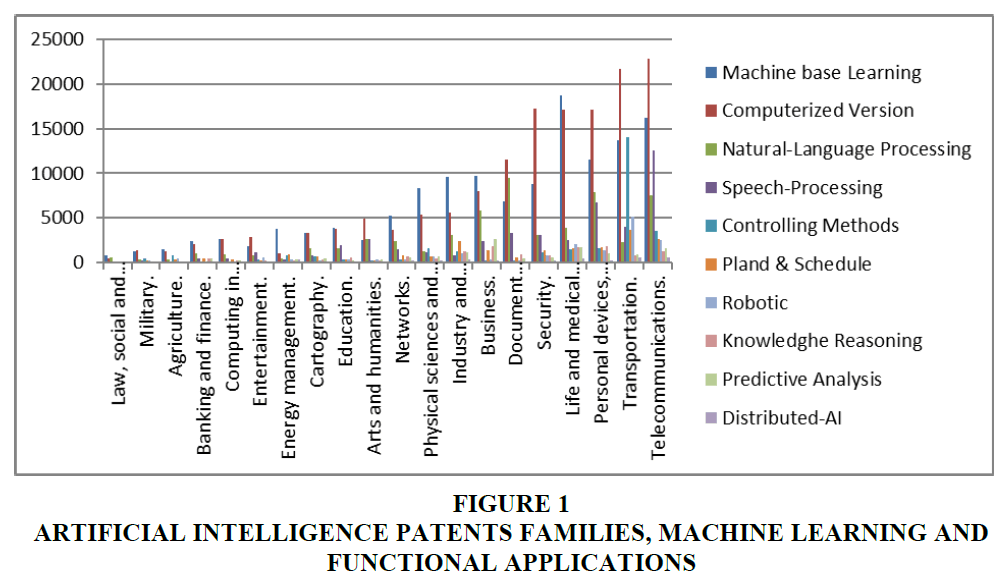

We are living in a global village where distances do not matter (Jermsittiparsert et al., 2013). Cultures are coming closer with every minute's passage, and this all is because of mind-blowing innovations in technology (Hussain et al., 2017; Haseeb et al., 2019). In the running era, the extreme move of innovation is the replacement of human intelligence with artificial. Every innovation has its pros and cons, but we are only looking for a positive effect (Hussain et al., 2018). The future of artificial will strengthen over time (Hussain et al., 2019). It is going to consider that the more and more advancement in Artificial Intelligence is considering one of the factors that cause to reduce human efforts, which ultimately will cause to be more laziness in human (Hussain et al., 2018; Somjai et al., 2020) (Table 1 & Figure 1).

| Table 1 Artificial Intelligence Patents Families, Machine Learning and Functional Applications | ||||||||||

| Machine base Learning | Computerized Version | Natural-Language Processing | Speech-Processing | Controlling Methods | Planed & Schedule | Robotic | Knowledge Reasoning | Predictive Analysis | Distributed-AI | |

| Law, social and behavioral sciences | 781 | 405 | 551 | 122 | 26 | 154 | 38 | 124 | 66 | 24 |

| Military | 1301 | 1344 | 371 | 270 | 444 | 242 | 256 | 111 | 112 | 74 |

| Agriculture | 1431 | 1197 | 292 | 127 | 779 | 283 | 416 | 83 | 139 | 49 |

| Banking and finance | 2369 | 2048 | 1056 | 494 | 88 | 436 | 100 | 395 | 450 | 82 |

| Computing in government | 2584 | 2588 | 939 | 445 | 150 | 381 | 136 | 244 | 214 | 72 |

| Entertainment | 1823 | 2891 | 738 | 1088 | 310 | 200 | 529 | 190 | 134 | 42 |

| Energy management | 3767 | 1057 | 398 | 310 | 735 | 945 | 337 | 188 | 300 | 336 |

| Cartography | 3277 | 3335 | 1611 | 760 | 698 | 698 | 258 | 366 | 426 | 99 |

| Education | 3915 | 3768 | 1643 | 1952 | 285 | 366 | 373 | 533 | 248 | 57 |

| Arts and humanities | 2490 | 4853 | 2670 | 2616 | 238 | 274 | 372 | 204 | 278 | 45 |

| Networks | 5297 | 3660 | 2351 | 1499 | 344 | 790 | 381 | 631 | 571 | 184 |

| Physical sciences and engineering | 8331 | 5398 | 1285 | 1184 | 1541 | 722 | 680 | 445 | 721 | 172 |

| Industry and manufacturing | 9570 | 5574 | 3032 | 799 | 1263 | 2405 | 1074 | 1214 | 1087 | 383 |

| Business | 9710 | 7969 | 5851 | 2423 | 272 | 1382 | 351 | 1821 | 2586 | 190 |

| Document management and publishing | 6842 | 11531 | 9527 | 3292 | 164 | 518 | 222 | 881 | 432 | 84 |

| Security | 8814 | 17236 | 3034 | 3076 | 1163 | 1402 | 794 | 796 | 595 | 244 |

| Life and medical sciences | 18773 | 17099 | 3819 | 2505 | 1495 | 1618 | 1989 | 1699 | 1695 | 429 |

| Personal devices, computing and HCI | 11586 | 17165 | 7921 | 6679 | 1626 | 1664 | 1417 | 1839 | 1070 | 224 |

| Transportation | 13742 | 21745 | 2331 | 3998 | 14031 | 3615 | 5081 | 762 | 867 | 534 |

| Telecommunications | 16202 | 22872 | 7554 | 12550 | 3497 | 2602 | 2477 | 1293 | 1534 | 517 |

We can see in the above reflected diagram that most of the Artificial Intelligence work has performed in the telecommunication sector which might in the most need of it. Furthermore, most work is done in Machine Learning. As it’s all about the communication that’s why machine learning a lot here. It’s one of example IVR’s etc. Second most invested are is Transportation (Chang & Hsu, 2018).

The thought behind approach of top-down to deal with the structure of AMAs is that ethical standards or speculations may be utilized as rules for the choice of morally appropriate activities (Brill et al., 2019). Rule-based ways to deal with man-made brainpower have been fittingly censured for their unsatisfactory quality for giving a general hypothesis of insightful activity (Turkina, 2018). Such approaches have demonstrated to be deficient, powerful for practically any certifiable assignment (Anderson & Anderson, 2007). Subsequently, despite the fact that (we accept) the issues with rule-based methodologies are somewhat solid, it is occupied on yearning creators of AMAs to think about the possibilities and issues inalienable in top-down approaches (Powars, 2005). Explicit issues with top-down ways to deal with counterfeit profound quality (as opposed to general issues with rule-based A.I. for the most part) are best comprehended subsequent to giving cautious thought to the possibilities for building AMAs by executing choice methods that are displayed on express good speculations (Schneider & Leyer, 2019). Applicant standards for change to algorithmic choice techniques go from strict goals and moral codes to socially supported qualities and philosophical frameworks. What about Asimov’s three laws which were chosen considered as triad to this approach .While a large number of similar qualities are clear in contrasting moral frameworks, there are additionally noteworthy vary encase which would make the determination of any specific hypothesis for top-down usage a matter of clear discussion (Hussain et al., 2012).

We won't enter that discussion here-rather; our errand is to be equivalent opportunity pundits, calling attention to qualities and limitations of some significant top-down approaches. Conceptually viewed as, top-down profound quality is all about having a lot of rules to pursue (Riaz et al., 2018). Here and there of reasoning, the rundown of rules is a heterogeneous snatch pack of whatever should be explicitly banished or then again endorsed. A quality of edict models is that they can have specific standards custom-made to specific sorts of morally applicable conduct (one for executing, one for taking, and so on) (Panichayakorn & Jermsittiparsert, 2019). Be that as it may, a significant difficulty with rule models, accordingly imagined, is that the guidelines frequently struggle (Cliff et al., 1993). Such clashes produce computationally recalcitrant circumstances except if there is some further rule or rule for settling the strife. Most charge frameworks are quiet on how clashes are to be settled.

Asimov's underlying approach was to organize the guidelines with the goal that the first law consistently bested the second, which thusly continuously bested the third (Grau, 2006). Sadly, in any case, the initial two of Asimov's unique laws are each adequate to create immovable clash on their possess (Allen et al., 2000). The expansion of the “zeros” law, to ensure humankind overall, is tragically quiet on what considers such damage, and consequently doesn't successfully mediate when there are commonly contrary obligations to avoid mischief to various people, i.e., while ensuring one will make hurt the other what's more, the other way around. Scholars have endeavored to discover increasingly broad or dynamic standards from which even more explicit or specific standards may be inferred. A quality of utilitarianism in a computational setting is its unequivocal pledge to evaluating products and damages (Nawaz & Hassan, 2016).

This is likewise, obviously, a frail ness-for it is an infamous issue of utilitarianism that specific delights and agonies give off an impression of being incommensurable (Dubey et al., 2019). While a few financial experts may think that cash gives a typical measure (how much one is eager to spend to get some great or keep away from some mischief), this is dubious. However, regardless of whether the issue of estimation could be understood, any top-down execution of utilitarianism would have a parcel of processing to do. This is on the grounds that many, if not all, of the outcomes of the accessible other options must be figured ahead of time so as to think about them (Ciampi et al., 2018). Results of acts will go over shifting kinds of individuals from the ethical voting public (individuals, maybe a few creatures, and perhaps even whole eco- frameworks), and numerous auxiliary “far reaching influences” will must be envisioned. The utilitarian AMA may likewise need to choose whether and how to limit impacts in the removed future.

As opposed to utilitarianism, deontological theories center on the thought processes in activity, and require specialists to regard explicit obligations and rights (Kaplan & Haenlein, 2019). The issue doesn't leave for a deontologist on the grounds that consistency between the obligations can commonly just be assessed through their belongings in existence (Yanpolskiy, 2013). Obviously, people apply consequentialist and deontological thinking to commonsense issues without figuring perpetually the utility or good ramifications of a demonstration in every single imaginable circumstance. Our profound quality, similarly as our thinking, is limited by time, limit, and tendency (Syam & Sharma, 2018). In a comparative vein, parameters may likewise be determined to the degree to which a computational framework investigates the outcomes or basic of a particular activity (Anderson & Anderson, 2007).

By what means may we set those breaking points on the alternatives considered by a PC framework, and will the game-plan taken by such a framework intending to a particular test be satisfactory? In people the breaking points of reflection are set by heuristics and emotional controls. The two heuristics and influence can on occasion be silly, yet in addition tend to encapsulate the intelligence increased through understanding. We likely could have the option to execute heuristics in computational frameworks. Proficient sets of accepted rules may be of some assistance in this specific circumstance (e.g., Florida and Sanders 2004 contend that Association of Computing Hardware Code of Ethics might be adjusted for artificial operators). All things considered, heuristic dependable guidelines leave numerous issues of need and consistency understood. The execution of full of feeling controls speaks to a considerably more troublesome test (Yoon & Lee, 2019).

Artificial Intelligence has an effect on Top-Down Approach (Hinks, 2019). By Bottom-Up approach the advancement of AMAs we mean those that don't force a particular moral hypothesis, yet which try to give environments in which suitable conduct is chosen or compensated (Ramos et al., 2008). These ways to deal with the advancement of moral reasonableness involve piecemeal learning through experience, either by oblivious robotic preliminary what's more, disappointment of development, the tinkering of programmers or architects as they experience new difficulties, or then again the instructive advancement of a learning machine (Allen et al., 2005). Every one of these techniques shares a few attributes with the way in which a youthful youngster obtains ethical training in a social setting which recognizes suitable and unseemly conduct without fundamentally giving an unequivocal hypothesis of what considers such (Grau, 2006). Base up techniques hold the guarantee of offering ascend to abilities and measures that are basic to the general structure of the framework, yet they are incredibly hard to advance or create (Floridi, 1999).

Development and learning are loaded up with experimentation-gaining from botches and fruitless procedures (Caputo et al., 2019). This can be a moderate assignment, even in the quickened universe of PC preparing and transformative calculations. Alan Turing contemplated in his great paper “Com- putting hardware and insight” that on the off chance that we could put a PC through an instructive system practically identical to the instruction a youngster gets, “We may trust that machines will in the long run contend with men in all simply educated fields” (Piarce et al., 1996). Apparently, this instructive system may incorporate a good education like the way wherein we people obtain reasonableness in regards to the good ramifications of our activities. In any case, reproducing a youngster's psyche is just one of the methodologies being sought after for planning canny specialists fit for learning (Wallech & Allan, 2006).

Artificial Intelligence and Social Values

The stage for counterfeit life tests (Alife)-i.e., the recreation of development inside PC frameworks-is the unfurling of a hereditary calculation inside generally basically characterized PC environments (Allen et al., 2001). An interesting inquiry is whether a PC or on the other hand Alife may have the option to develop moral conduct (Moar, 2007). The prospect that Alife could bring forth moral operators gets from a renowned theory that a science of sociobiology may offer ascent to an exact record of the developmental source of morals. On the off chance that the foundational estimations of human culture are established in our organic legacy, then it is sensible to assume that these qualities could reappear in a simulation of common choice. The question is as yet extraordinary whether Alife will demonstrate to be useful in the improvement of fake good specialists fit for drawing in the more mind boggling issues that we experience day by day (Anderson & Anderson, 2007).

We ought not to disregard, be that as it may, that development has likewise prompted shameless conduct. Impartial learning stages noticing the restrictions innate in frameworks planned around rule-based morals, Chris Lang suggests “journey morals”, a technique wherein the PC finds out about morals through an endless mission to maximize its objective, regardless of whether that objective is to be “only” or to be “good”. Lang is hopeful, yet can a machine, whose activities are dictated by its past state and sources of info, gain the opportunity to pick between alternatives, to demonstrate itself to a domain, and act as per human qualities? There are two focal difficulties in planning a PC prepared to do ceaselessly questing for a higher profound quality: indicating the objective or objectives of the framework, what's more, empowering an unending progression of crisp true information that grow the space the framework scrutinizes in its mission (Yoshikawa, 1990). In spite of the fact that characterizing the objectives could prompt extensive philosophical difference, the more troublesome test in structuring an ethically praiseworthy operator lies in animating the framework to extend its domain of potential decisions (Floridi, 1999; Moar, 2007). Affiliated learning machines and humanoids on the off chance that ethical quality is fundamentally learned, developed through experience, experimentation, and sharpened through our limit with respect to reason, at that point showing an AMA to be a moral operator may well require a comparable procedure of training (Howe & Matsuoka, 1999). Acquainted learning methods model the training youngsters get as remunerations and discipline, endorsement and dissatisfaction. Finding compelling partners to remunerate and rebuff, appropriate for preparing a robot or PC is a dangerous task. Suggests compensating fair-minded learning machines with more extravagant information when they carry on morally. Recreating tangible torment is likewise an alternative.

The expectation behind a prize and discipline arrangement of good training might be that the kid's revelation of shared moral concerns or standards might be most viably guided by a procedure of disclosure that is guided by guardians and instructors who have in any event a simple comprehension of the direction the learning will follow. Kids normally proceed onward to the following degree of moral incredibly to value the limitations of the reasons which they have recognized. The essential test of permeating an AMA with such a level of knowledge lies in executing input to the calculation framework that goes past a basic paired pointer that an avocation for a demonstration is satisfactory or then again unsatisfactory. On the off chance that no single methodology meets the criteria for designating a counterfeit substance as an ethical operator, at that point a few half breeds will be important. Cross breed approaches represent the extra issues of cross section both differing philosophies and unique designs. Hereditarily obtained inclinations, the rediscovery of fundamental beliefs through experience, and the learning of socially embraced governs all impact the ethical advancement of a youngster`. During youthful adulthood those guidelines might be reformulated into unique rules that guide one's conduct. We ought not be astounded if planning an admirable good specialist will likewise require computational frameworks equipped for incorporating various sources of info what's more, impacts, including top-down qualities educated by an establishment moral sentence structure and a rich appreciation of the setting.

Originators of AMAs can't stand to be hypothetical perfectionists concerning inquiries regarding how to approach moral insight. One focal inquiry is whether frameworks competent of settling on moral choices will require some structure of feelings, cognizance, a hypothesis of brain, and comprehension of the semantic substance of the images, or should be encapsulated on the planet. While we feel that crossover framework without emotional or progressed cognitive resources will be useful in numerous spaces, it will be fundamental to perceive when extra capabilities will be required (Spector, 1987). Epitome is both an objective, what is more, a base up technique that is exceptionally obvious in sub-assumptive and developmental or epigenetic automated design.

The guarantee that other progressed affective and psychological aptitudes will rise through the advancement of complex epitomized frameworks is profoundly theoretical. By and by, encouraging the rise of cutting-edge resources or planning modules for complex emotional and intellectual abilities likely could be required for completely self-governing AMAs. Assessing machine ethical quality as we expressed over, the objective of the order of fake profound quality is to plan fake operators to act as though they are good operators. Questions stay about suitable criteria for assessing viability in this zone. Similarly, as there isn't one widespread moral hypothesis, there is no concurrence on being a good specialist, not to mention an effective fake good operator. This discussion proposed hypotheses are:

H1 There is positive link among the increase in machine output and performance of the textile industry in ASEAN countries.

H2 There is positive link among the increase in accuracy of machine and performance of the textile industry in ASEAN countries.

H3 There is negative link among the decrease in work hours and performance of the textile industry in ASEAN countries.

H4 There is negative link among the decrease in cost and performance of the textile industry in ASEAN countries.

Research Method s

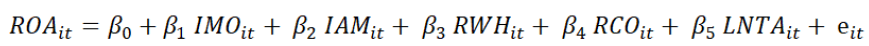

The main determination of the article is to examine the artificial morality that is measured by the increase in machine output (IMO), increase in the accuracy of machine (IAM), reduce the work hour (RWH) of the labor and reduce the cost of output (RCO), impact on the performance that is measured by the ROA of textile industry in ASEAN countries. The size of the textile company that is measured by logarithm of total assets is used as control variable. The data were gathered form the textile industry that are currently operating in the ASEAN countries from 2002 to 2018 and fixed effect model was employed to test the hypotheses of the paper but using STATA. Based on these measurements of variables this study developed the following equation:

Findings

The results include the descriptive statistics, correlation matrix, all the assumptions of regression and Hausman test for checking appropriateness of fixed and random model. The descriptive statistics given below in Table 2 show the detail of the constructs such as minimum values, maximum values, standard deviation and mean.

| Table 2 Descriptive Analysis | |||||

| Variable | Orbs | Mean | Std. Dev. | Min | Max |

| ROA | 170 | 1.618 | 0.567 | -0.179 | 3.437 |

| IMO | 170 | 1.191 | 0.205 | 0.021 | 1.771 |

| IAM | 170 | 0.249 | 0.256 | 0 | 0.846 |

| RWH | 170 | 0.158 | 0.214 | 0 | 0.983 |

| RCO | 170 | 4.974 | 0.841 | 2.862 | 6.399 |

| LNTA | 170 | 10.541 | 0.214 | 201.212 | 321.025 |

The correlation matrix given below in Table 3 show the correlation among variables and multicollinearity assumption and statistics show that variables are correlated negatively as well as positively and no issue with multicollinearity assumption.

| Table 3 Correlation Matrix | ||||||

| Variables | ROA | IMO | IAM | RHW | RCO | LNTA |

| ROA | 1 | |||||

| IMO | -0.173 | 1 | ||||

| IAM | 0.099 | 0.241 | 1 | |||

| RWH | -0.054 | 0.005 | 0.064 | 1 | ||

| RCO | 0.013 | 0.179 | 0.161 | -0.106 | 1 | |

| LNTA | 0.249 | 0.137 | -0.405 | -0.363 | -0.09 | 1 |

The VIF given below in Table 4 check the multicollinearity assumption and statistics show that no issue with multicollinearity assumption that means variables are not highly correlated.

| Table 4 Variance Inflation Factor (VIF) | ||

| VIF | 1/VIF | |

| IMO | 1.579 | 0.633 |

| IAM | 1.241 | 0.806 |

| RWH | 1.217 | 0.822 |

| RCO | 1.169 | 0.855 |

| LNTA | 1.096 | 0.912 |

| Mean VIF | 1.26 | . |

The Skewness and Kurtosis test given below in Table 5 check the normality assumption and statistics show that normality assumption is not proved but this problem does not affected the results due to large data set.

| Table 5 Skewness and Kurtosis Test | |||||

| Variable | Orbs | Pr(Skewness) | Pr(Kurtosis) | adj_chi2(2) | Prob>chi2 |

| ROA | 170 | 0.311 | 0.036 | 5.42 | 0.066 |

| IMO | 170 | 0.208 | 0 | 27.66 | 0 |

| IAM | 170 | 0 | 0.002 | 26.13 | 0 |

| RWH | 170 | 0 | 0 | 64.41 | 0 |

| RCO | 170 | 0 | 0.003 | 20.61 | 0 |

| LNTA | 170 | 0 | 0 | . | 0 |

The Breusch-Pagan test and Wooldridge test are employed to check the homoscedasticity assumption and autocorrelation assumption and statistics show that both assumptions is not proved but this problem does not affected the results due fixed model is used in the study.

The fixed and random model was employed first to test which one appropriate by applying the Hausman test both the models are presented under Table 6 and Table 7.

| Table 6 Fixed Effect Model | ||||||||||

| ROA | Coef. | S.E. | t-values | P>t | L.L. | U.L. | Sig | |||

| IMO | 1.037 | 0.128 | 8.07 | 0 | 0.754 | 1.32 | *** | |||

| IAM | 0.438 | 0.231 | 1.9 | 0.044 | 0.647 | 0.372 | ||||

| RWH | -0.951 | 0.21 | -4.54 | 0.001 | -0.49 | -1.413 | *** | |||

| RCO | -0.668 | 0.217 | -3.078 | 0.002 | -0.844 | -0.109 | *** | |||

| LNTA | 0.014 | 0.003 | 4.53 | 0.001 | 0.007 | 0.021 | *** | |||

| Constant | 1.909 | 0.547 | 3.49 | 0.001 | 0.832 | 2.987 | *** | |||

| R-squared | 0.46 | Prob > F | 0 | |||||||

| Table 7 Random Effect Model | ||||||||

| ROA | Coef. | S.E. | t-value | p-value | L.L. | U.L. | Sig | |

| IMO | 0.95 | 0.188 | 5.06 | 0 | 0.582 | 1.319 | *** | |

| IAM | -0.039 | 0.201 | -0.19 | 0.846 | -0.433 | 0.355 | ||

| RWH | 0.597 | 0.197 | 3.03 | 0.002 | 0.211 | 0.983 | *** | |

| RCO | -0.032 | 0.062 | -0.51 | 0.611 | -0.154 | 0.091 | ||

| LNTA | 0.015 | 0.002 | 8.5 | 0 | 0.012 | 0.019 | *** | |

| Constant | 0.357 | 0.436 | 0.82 | 0.413 | -0.498 | 1.212 | ||

| Overall r-squared | 0.312 | Prob > chi2 | 0 | |||||

| *** p<0.01, ** p<0.05, * p<0.1 | ||||||||

The appropriateness among the fixed and random model is checked by the Hausman test and statistics show that fixed is appropriate because probability value is less than 0.05. Table 8 shows the results of Hausman test.

| Table 8 Hausman Test | |

| Coef. | |

| Chi-square test value | 11.743 |

| P-value | 0.038 |

The regression result with fixed model show that the IMO and IAM have positive and significant link with ROA due to positive sign with beta and t and p values meet the standard criteria. While the RHW and RCO have negative and significant link with ROA due to negative sign with beta and t and p values meet the standard criteria. Table 9 show the regression result with fixed model.

| Table 9 Regression Analysis Fixed Effect Model | ||||||

| ROA | Coef. | S.E. | t-values | P>t | L.L. | U.L. |

| IMO | 1.037 | 0.128 | 8.07 | 0 | 0.754 | 1.32 |

| IAM | 0.438 | 0.231 | 1.9 | 0.044 | 0.647 | 0.372 |

| RWH | -0.951 | 0.21 | -4.54 | 0.001 | -0.49 | -1.413 |

| RCO | -0.668 | 0.217 | -3.078 | 0.002 | -0.844 | -0.109 |

| LNTA | 0.014 | 0.003 | 4.53 | 0.001 | 0.007 | 0.021 |

| _cons | 1.909 | 0.922 | 2.07 | 0.039 | 0.34 | 4.159 |

Discussion and Conclusion

The primary purpose of the article is to examine the artificial morality that is measured by the increase in machine output, increase in the accuracy of the machine, reduce the work hour of the labor and reduce the cost of output, impact on the performance of the textile industry in ASEAN countries. The results uncovered a positive link between artificial morality and the textile industry's performance in ASEAN countries. Due to using artificial intelligence, the machine output and its accuracy increase while the cost of the output and labor hour reduces that lower the overall cost and improve the company's performance.

This study stresses that research on artificial morality is urgently needed from both scholarly and managerial perspectives. It is crucial to resolve the issues that have arisen because of today's technological advancements. Technology and morality in society are inextricably linked, and change is unavoidable. However, a significant change in morality is misery for many people who struggle to adjust to new social norms. As a result, philosophers and social scientists must exercise extreme caution to make the transition as easy as possible.

Limitations and Future Recommendations.

The present article has some directions for upcoming studies and limitations such as this study take only four aspects of the artificial intelligence and future study must add more aspects of the AI in their studies. These findings recommended to the policy makers and implementation authorities that they develop and implement the policies regarding to improve the artificial morality in the businesses that enhance the firm performance.

Acknowledgement

We express our gratefulness to Research Institute for Indonesia, Thailand, and Singapore (UUM-ITS), Ghazali Shafie Graduate School of Government for the support and mentorship in completing this research.

References

- Allen, B., Varner, G., & Zinser, J. (2001). Prolegomena to any future artificial moral agent. Journal of Experimentl and Theoreticl Artificial Intelligance, 13 (4), 256-265.

- Allen, B., Wallach, W., & Smit, I. (2005). Why machine ethics? IEEI Intelligant System, 21 (4), 12-17.

- Allen, C., Varner, G., & Zinser, J. (2000). Prolegomena to any future artificial moral agent. Journal of Experimental & Theoretical Artificial Intelligence, 12 (3), 251-261.

- Anderson, M., & Anderson, S.L. (2007). Machine ethics: Creating an ethical intelligent agent. AI Magazine, 28 (4), 15-17.

- Brill, T.M., Munoz, L., & Miller, R.J. (2019). Siri, Alexa, and other digital assistants: A study of customer satisfaction with artificial intelligence applications. Journal of Marketing Management, 35 (15), 1401-1436.

- Canhoto, A.I., & Clear, F. (2019). Artificial intelligence and machine learning as business tools: A framework for diagnosing value destruction potential. International Journal of Machine Learning and Cybernetics, 3(33), 63-83.

- Caputo, F., Cillo, V., Candelo, E., & Liu, Y. (2019). Innovating through digital revolution: The role of soft skills and Big Data in increasing firm performance. International Journal of Machine Learning and Cybernetics, 12(12), 12-23.

- Chang, T.M., & Hsu, M.F. (2018). Integration of incremental filter-wrapper selection strategy with artificial intelligence for enterprise risk management. International Journal of Machine Learning and Cybernetics, 9(3), 477-489.

- Ciampi, F., Marzi, G., & Rialti, R. (2018). Artificial intelligence, big data, strategic flexibility, agility, and organizational resilience: A conceptual framework based on existing literature. International Journal of Production Economics, 3(6), 14-20

- Cliff, D., Husbands, P., & Harvey, I. (1993). Explorations in evolutionary robotics. Adaptive Behavior, 2(1), 73-110.

- Dubey, R., Gunasekaran, A., Childe, S.J., Bryde, D.J., Giannakis, M., Foropon, C., Hazen, B.T. (2019). A study of manufacturing organisations. International Journal of Production Economics, 5(7), 12-22.

- Floridi, L. (1999). Information ethics: On the philosophical foundation of computer ethics. Ethics and Information Technology, 1(1), 33-52.

- Grau, C. (2006). There is no" I" in" robot": Robots and utilitarianism. IEEE Intelligent Systems, 21(4), 52-55.

- Haenlein, M., & Kaplan, A. (2019). A brief history of artificial intelligence: On the past, present, and future of artificial intelligence. California Management Review, 61(4), 5-14.

- Haseeb, M., Hussain, H., Slusarczyk, B., & Jermsittiparsert, K. (2019). Industry 4.0: A Solution towards Technology Challenges of Sustainable Business Performance. Social Sciences, 8(5), 184.

- Hinks, T. (2019). Artificial Intelligence and the UK Labour Market: Questions, methods and a call for a systematic approach to information gathering. International Journal of Machine Learning and Cybernetics, 10(5), 77-89

- Howe, R.D., & Matsuoka, Y. (1999). Robotics for surgery. Annual Review of Biomedical Engineering, 1(1), 211-240.

- Hussain, M.S., Mosa, M.M., & Omran, A. (2017). The mediating impact of profitability on capital requirement and risk taking by pakistani banks. Journal of Academic Research in Economics, 9(3), 433-443.

- Hussain, M.S., Mosa, M.M., & Omran, A. (2018). The impact of owners behaviour towards risk taking by Pakistani Banks: Mediating role of profitability. Journal of Academic Research in Economics, 10(3), 455-465.

- Hussain, M.S., Musa, M.M., & Omran, A. (2019). The impact of regulatory capital on risk taking by pakistani banks. SEISENSE Journal of Management, 2(2), 94-103.

- Hussain, M.S., Musa, M.M.B., & Omran, A.A. (2018). The impact of private ownership structure on risk taking by pakistani banks: An Empirical Study. Pakistan Journal of Humanities and Social Sciences, 6(3), 325-337.

- Hussain, M.S., Ramzan, M., Ghauri, M.S.K., Akhtar, W., Naeem, W., & Ahmad, K. (2012). Challenges and failure of Implementation of Basel Accord II and reasons to adopt Basel III both in Islamic and conventional banks. International Journal of Business and Social Research, 2(4), 149-174.

- Jermsittiparsert, K, Sriyakul, T., & Rodoonsong, S. (2013). Power(lessness) of the state in the globalization era: Empirical proposals on determination of domestic paddy price in Thailand. Asian Social Science, 9(17), 218-225.

- Kaplan, A., & Haenlein, M. (2019). On the interpretations, illustrations, and implications of artificial intelligence. Business Horizons, 62(1), 15-25.

- Klumpp, M. (2018). Automation and artificial intelligence in business logistics systems: Human reactions and collaboration requirements. International Journal of Logistics Research and Applications, 21(3), 224-242.

- Lin, A., Abnay, S., & Bekay, G. (2010). Robot ethics: Mapping the issues for a mechanized world. Artificil Intelligance, 178(9), 942.

- Moar, G. (2007). The nature, importance, and difficulty of machine ethics. IEEE Intelligant System, 22(5), 13-16.

- Nawaz, M.A., & Hassan, S. (2016). Investment and Tourism: Insights from the Literature. International Journal of Economics Perspectives, 10(4), 581-590.

- Panichayakorn, T., & Jermsittiparsert, K. (2019). Mobilizing organizational performance through robotic and artificial intelligence awareness in mediating role of supply chain agility. International Journal of Supply Chain Management, 8(5), 757-768.

- Piarce, Jashua, & Hanry. (1996). Computer ethics: The role of personal, informal, and formal codes. Journel of Bussiness Ethiics, 15(3), 420-431.

- Powars, T. (2005). Prospects for a Kantian machine. IEE Intelligant Systams, 22(5), 43-53.

- Ramos, C., Augusto, J.C., & Shapiro, D. (2008). Ambient intelligence—the next step for artificial intelligence. IEEE Intelligent Systems, 23(2), 15-18.

- Riaz, Z., Arif, A., Nisar, Q.A., Ali, S., & Sajjad, M. (2018). Does Perceived Organizational Support influence the Employees Emotional labor? Moderating & Mediating role of Emotional Intelligence. Pakistan Journal of Humanities and Social Sciences, 6(4), 526-543.

- Schneider, S., & Leyer, M. (2019). Me or information technology? Adoption of artificial intelligence in the delegation of personal strategic decisions. Managerial and Decision Economics, 40(3), 223-231.

- Soebandrija, K.E.N., Hutabarat, D.P., & Khair, F. (2018). Sustainable industrial systems within kernel density analysis of artificial intelligence and industry 4.0. Earth and Environmental Science, 12(4), 23-31.

- Soltani-Fesaghandis, G., & Pooya, A. (2018). Design of an artificial intelligence system for predicting success of new product development and selecting proper market-product strategy in the food industry. International Food and Agribusiness. Management Review, 21(7), 847-864.

- Somjai, S., Jermsittiparsert, K., & Chankoson, T. (2020). Determining the initial and subsequent impact of artificial intelligence adoption on economy: A macroeconomic survey from ASEAN. Journal of Intelligent & Fuzzy Systems, 39(4), 5459-5474.

- Spector, P.E. (1987). Method variance as an artifact in self-reported affect and perceptions at work: myth or significant problem? Journal of Applied Psychology, 72(3), 438-444.

- Syam, N., & Sharma, A. (2018). Waiting for a sales renaissance in the fourth industrial revolution: Machine learning and artificial intelligence in sales research and practice. Industrial Marketing Management, 69(8), 135-146.

- Turkina, E. (2018). The importance of networking to entrepreneurship: Montreal's artificial intelligence cluster and its born-global firm element AI: Taylor & Francis. 3(18), 401-428

- Wallach, W., Allen, C., & Smit, I. (2008). Machine morality: bottom-up and top-down approaches for modelling human moral faculties. Industrial Marketing Management, 22(4), 565-582.

- Wallech, W., & Allan, C. (2006). A new field of inquiry. Artificial Intelligence. International Journal of Healthcare Management, 12(3), 218-225.

- Yanpolskiy, R. (2013). Leakproofing Singularity-Artificial Intelligence Confinement Problem. Journal of Conciousnass Studies JCS, 2(12), 217-223.

- Yoon, S.N., & Lee, D. (2019). Artificial intelligence and robots in healthcare: What are the success factors for technology-based service encounters? International Journal of Healthcare Management, 12(3), 218-225.

- Yoshikawa, T. (1990). Foundations of robotics: Aalysis and control. International Journal of Healthcare Management, 23(8), 112-128