Review Article: 2023 Vol: 27 Issue: 5

Student Satisfaction Towards Online Education: Eeduqual Model Development and Validation

VV Devi Prasad Kotni, GITAM Deemed-to-be University

Citation Information: Prasad Kotni, V.V.D. (2023). Student satisfaction towards online education: eeduqual model development and validation. Academy of Marketing Studies Journal, 27(5), 1-12.

Abstract

COVID-19 has prompted educational institutions worldwide to investigate innovative techniques promptly. Most institutions have moved to an online approach using Zoom, Blackboard, Google Meet, Webex, and Microsoft Teams during this time. With today's technological breakthroughs, web content can be created in various ways. To make learning productive and successful, it is critical to consider the preferences and perceptions of learners while building online courses. Any endeavour to improve the efficacy of online learning must consider the users' perspectives. According to studies, students have positive and negative attitudes about online learning. According to several studies, the instructor's engagement with students significantly influences students' opinions of online learning. This research aims to propose, validate and test the eEduQual model for evaluating the quality of online teaching. The eEduQual model was proven to be statistically fit with five constructs and fifteen scale items. The constructs are Teaching (T), Learning (L), Technology (G), Teaching Aids (A), and Pedagogy (P).

Keywords

Online Teaching, E-Education, Covid-19, EFA, CFA, Model Testing, Model Validation.

Extended Abstract

Design/methodology/approach: The eEduQual model has been developed and tested with primary data collected from 384 students in Post-Graduation programs. A set of 25 statements (scale items) was prepared based on the literature review. First EFA was executed on the primary data (student satisfaction towards the quality of electronic education) collected on these scale items and extracted five factors. On these five constructs, CFA was executed and to validate the model discriminant validity and convergent validity were also done, at last, the model was found to be significant.

Purpose: Theis research aims to propose, validate and test the eEduQual Model for evaluating the quality of online Education. The gap that this research fill is proposing an eEduQual (electronic education quality) model to evaluate the quality of online teaching and validate the model by executing with primary data collected from higher education students who experienced online teaching during the Covid-19 lockdown. Findings: The eEduQual Model model consists of five factors. The factors are named: Teaching (T), Learning (L), Technology (G), Teaching Aids (A), and Pedagogy (P). Each Construct consists of thres items which will measure the quality of e-Education on a five or seven-point scale.

Research limitations/implications: The validation of this model is done with postgraduate students only, it can be tested with undergraduate students also. The responded students are from technical and professional knowledge domains. The model can also be used for engineering, science, and art students.

Practical implications: Online educators can use this eEduQual model to evaluate the quality of their online education programs. Online education firms like coursera can utilize this scale while they take feedback from online learners towards online education. The online trainers of various training programs, EDPs, and MDPs can use this scale for determining the quality of their online sessions.

Social implications: After covid-19, all over the world universities and educational and training institutions started exploring opportunities to deliver their education and training programs online. Not only at higher education levels, but even at primary and secondary education levels the possibility of online education has been considered a good choice. so evaluating the quality of online education programs is always a challenge. The eEduQual scale proposed in this study can emerge as a solution.

Originality/value: This is one of the foremost studies conducted so far wherein a model has been designed and tested with primary data collected directly from the postgraduate students. This scale is an original contribution from the author.

Introduction

The COVID-19 outbreak has caused educational institutions around the globe to close, jeopardizing academic calendars. To keep scholastic activities continuing, most educational institutions have moved to online learning tools. However, issues concerning e-learning preparedness, design, and effectiveness remain unresolved, especially in developing countries such as India, where technical constraints such as device suitability and broadband availability pose a significant obstacle. Schools in China and a few other affected countries were closed in early February 2020 due to the expanding smog.

By mid-March, however, nearly 75 nations had killed or declared the closure of educational institutions. As of March 10th, one out of every five students was absent from school due to COVID-19 school and institution closures globally. According to UNESCO, by the end of April 2020, 186 nations would have enforced national closures, impacting 73.8 percent of all enrolled pupils. (UNESCO, 2020). Even if the only ways to stop the spread of COVID-19 are confinement and social isolation, the closing of educational facilities has had a negative effect on many students.

Because of the indefinite closing of schools and colleges, educational institutions and students are trying with strategies to complete their required syllabi within the time frame established by the academic schedule. These constraints have surely caused discomfort while also sparking new instances of educational innovation, including the use of digital inputs. Given the sluggish pace of change in academic institutions, which continue to employ millennia-old lecture-based teaching methods, ingrained institutional prejudices, and outmoded classrooms, this is a silver lining on a dark cloud.

Nonetheless, COVID-19 has led educational institutions all over the globe to explore novel techniques as soon as possible. During this period, most institutions have shifted to an online strategy, utilizing Blackboard, Zoom, Google Meet, Webex, and Microsoft Teams.

With today's technological advances, online material can be produced in a variety of methods. When developing online classes, it is crucial to consider the preferences and views of learners in order to ensure effective and productive learning. The learner's decision is related to their readiness or willingness to engage in collaborative learning, as well as the factors that affect online learning readiness. The findings from the study of related literature will be summarized in the part on literature review.

This study fills a void by providing an eEduQual (electronic education quality) model to assess the quality of online education and validating the model using raw data gathered from higher education students who encountered online instruction during the Covid-19 shutdown. The purpose of this study is to suggest, validate, and test the eEduQual model for assessing the quality of online education.

Review of Literature

Any effort to enhance the efficacy of online learning must consider the viewpoints of the users. Students have both favorable and bad views toward online learning, according to research. Several studies have found that the instructor's interaction with students has a substantial impact on students' perceptions of online learning.

Warner et al. (1998) pioneered the concept of online learning readiness in the Australian professional education and training industry. They defined online learning readiness in three ways: (1) students' preference for the method of delivery over face-to-face classroom instruction; (2) students' confidence in using electronic communication for learning, which includes competence and trust in the use of the Internet and computer-based communication; and (3) students' ability to engage in autonomous learning.

Several studies, including McVay (2001), refined the concept by creating a 13-item measure that looked at student behavior and mood as predictors. Smith et al. (2003) then conducted an exploratory study to assess McVay's (2000) online readiness evaluation and created a two-factor framework, "Comfort with e-learning" and "Self-management of learning." Additional study was later performed to operationalize the concept of online learning readiness. (Smith, 2005).

Self-directed learning (Lin and Hsieh, 2001), motivation for learning (Fairchild et al., 2005), learner control (Reeves, 1993), computer and internet self-efficacy (Hung et al., 2010), and online communication self-efficacy (Roper, 2007) were identified as factors influencing readiness for online learning by researchers.

After reviewing the previous studies, as mentioned in table 1 in the domain of online teaching and online learning, twenty-five items/variables (attributes of online teaching) provided in table 2 were chosen for this study after a comprehensive review of the literature and current publications, to find what makes online teaching qualitative.

| Table 1 Review of Literature – Attributes of Online Teaching | ||

| S.No | Author (s) | Online Teaching Attribute |

| 1 | Smith (2005) | readiness for online learning |

| 2 | Lin and Hsieh (2001) | self-directed learning |

| 3 | Fairchild et al. (2005) | motivation for learning |

| 4 | Reeves (1993) | Learner control |

| 5 | Hung et al. (2010) | computer and internet self-efficacy |

| 6 | Roper (2007) | online communication self-efficacy |

| 7 | Swan et al. (2000) | consistency in course design |

| 8 | Hay et al.(2004) | critical thinking ability and information processing |

| 9 | Hay et al. (2004) | rate of interactivity in the online setting |

| 10 | Kim et al. (2005) | the flexibility of online learning |

| 11 | Kim et al. (2005) | chances of engaging with teachers and peers in online learning settings |

| 12 | Kim et al. (2005) | social presence |

| 13 | Lim et al. (2007) | academic self-concept |

| 11 | Wagner et al. (2002) | competencies required to use the technology |

| 15 | Sun and Chen (2016) | well-structured course content |

| 16 | Sun and Chen (2016) | well-prepared instructors |

| 17 | Sun and Chen (2016) | advanced technologies |

| 18 | Gilbert (2015) | Feedback and clear instructions |

| 19 | Vonderwell (2003) | delay in responses |

| 20 | Petrides (2002) | scepticism of their peers’ supposed expertise |

| 21 | Lim et al (2007) | lack of a sense of community and/or feelings of isolation |

| 22 | Song et al.(2004) | problems in collaborating with the co-learners, technical problems |

| 23 | Muilenburg and Berge (2005) | issues related to instructor |

| 24 | Laine (2003) | higher student attrition rates |

| 25 | Serwatka (2003) | the need for greater discipline |

| Table 2 Prepared Statements Based on Attributes of Online Teaching | |

| S.No. | |

| 1 | Effective teaching takes place in online sessions like in offline sessions. |

| 2 | The faculty is accessible for clarifications after online session. |

| 3 | The faculty members are delivering e-sessions as interactive sessions. |

| 4 | The quality of teaching is increased in e-sessions. |

| 5 | Evaluation of learners (like assignments, projects etc) is effective during e-sessions. |

| 6 | The Online Teaching Ads (like PPTs, Whiteboard, Images etc) used by the faculty members are appropriate. |

| 7 | The Audio / Video content of the e-session is appropriate. |

| 8 | The faculty members are providing sufficient reading kit. |

| 9 | The teaching aids are able enhance the learning experience. |

| 10 | The teaching aids are suitable for the content of the lesson. |

| 11 | Duration of e-sessions are adequate. |

| 12 | The pedagogy used in e-session is appropriate as per the lesson content. |

| 13 | E-Sessions are enabling discussion among the learners along with the teacher. |

| 14 | Different pedagogical methods like lecturing, group discussion are effective in e-sessions. |

| 15 | The online quizzes, online polls, attendance etc during e-session are appropriate. |

| 16 | The quality of the e-session video is appropriate. |

| 17 | The quality of the e-session audio is appropriate. |

| 18 | The e-Session details (like meeting-id, password, pin etc) are informed to the students well in advance. |

| 19 | The session recordings are delivered for those who missed the e-sessions. |

| 20 | Data Signal is always a challenge for attending e-sessions. |

| 21 | The learning outcomes are achieved after attending e-session. |

| 22 | After attending the e-session, adequate knowledge was delivered. |

| 23 | E-sessions are able to enhance the skills related to the courses. |

| 24 | E-sessions are able to develop right attitude required by the courses. |

| 25 | E-sessions are able to develop employability skills among the learners. |

Methods and Materials

The study is based on primary data collected from 384 students of an Indian city of Visakhapatnam pursuing higher education courses in various institutions and the students who are pursuing programs Master of Technology, Master of Business Administration and Master of Computer Applications. These students are chosen based on purposive sampling. The survey sample size is calculated at 384 students (as per Krejcie and Morgan (1970) table). A structured questionnaire was designed based on the items collected from the review of literature and interviews from retail managers. The questionnaire consists of various dimensions of online teaching basing on 25 items framed as shown in the table 1. The reliability test Cronbach alpha value=0.75 is found to be more them 0.7 the threshold value. The both the statistical tools i.e. Exploratory Factor Analysis (EFA) and Confirmatory Factor Analysis (CFA) were used to validate the eEduQual model.

Results

Exploratory Factor Analysis (EFA)

In order to perform factor analysis on the data from eEduQual, as shown in Table 3, a reliability test called "Cronbach's Alpha" was performed, and the Alpha value was found to be 0.750 (where 0.7 is the recommended level: (Bernardi, 1994), indicating that the data was 75 percent reliable, indicating that it could be used for further analysis, and the remaining 25% could contain errors.

| Table 3 KMO and Bartlett's Test | ||

| Kaiser-Meyer-Olkin Measure of Sampling Adequacy. | .843 | |

| Bartlett's Test of Sphericity | Approx. Chi-Square | 3751.098 |

| df | 528 | |

| Sig. | .000 | |

On the data of online teaching qualities, the KMO and Bartlett test was used to evaluate additional data validity for factor analysis. As shown in Table 3, the KMO measure of sample adequacy was determined to be.843 (where the suggested threshold value is.5 (Hair et al., 1998), indicating that analysis can proceed. The chi-square value discovered in the Bartlett's Test of Sphericity was 3751.098 at df = 528 and significant at the.000 level, indicating that the statistical method "factor analysis" may be employed to the data of online teaching qualities.

Factor Analysis was executed on the data of online teaching attributes with varimax rotation and principal component analysis as extraction method. After executing factor analysis, the 25 variables are formed into five factors as presented in the Table 4. The formed factors with Eigen Value more than 1 are only extracted (Eigen Value more than 1 is recommended level: (Hair et al., 1998) and three factors were formed with Eigen Value more than 1. The first factor “Teaching (T)” is formed with Eigen Value 4.234 and the variance explained is 12.830%. The second factor “Learning (L)” is formed with Eigen Value 3.295 and the variance explained is 11.918%. The third factor “Technology (G)” is formed with Eigen Value 3.288 and the variance explained is 9.962%. The fourth factor “Teaching Aids (A)” is formed with Eigen Value 2.700 and the variance explained is 8.183%. The fifth factor “Pedagogy (P)” is formed with Eigen Value 2.173 and the variance explained is 6.585%The total cumulative variance explained by all the three factors is 49.478%. The rotated component matrix with factors along with their associate items are presented in the table 5.

| Table 4 Total Variance Explained | |||||||||

| Component | Initial Eigenvalues | Extraction Sums of Squared Loadings | Rotation Sums of Squared Loadings | ||||||

| Total | % of Variance | Cumulative % | Total | % of Variance | Cumulative % | Total | % of Variance | Cumulative % | |

| 1 | 7.574 | 22.952 | 22.952 | 7.574 | 22.952 | 22.952 | 4.234 | 12.830 | 12.830 |

| 2 | 3.295 | 9.983 | 32.935 | 3.295 | 9.983 | 32.935 | 3.933 | 11.918 | 24.747 |

| 3 | 2.231 | 6.761 | 39.697 | 2.231 | 6.761 | 39.697 | 3.288 | 9.962 | 34.710 |

| 4 | 1.652 | 5.008 | 44.704 | 1.652 | 5.008 | 44.704 | 2.700 | 8.183 | 42.893 |

| 5 | 1.575 | 4.774 | 49.478 | 1.575 | 4.774 | 49.478 | 2.173 | 6.585 | 49.478 |

| 6 | 1.322 | 4.006 | 53.483 | ||||||

| 7 | 1.206 | 3.655 | 57.138 | ||||||

| 8 | 1.049 | 3.178 | 60.315 | ||||||

| 9 | .998 | 3.025 | 63.340 | ||||||

| 10 | .921 | 2.792 | 66.132 | ||||||

| 11 | .843 | 2.556 | 68.688 | ||||||

| 12 | .806 | 2.443 | 71.131 | ||||||

| 13 | .758 | 2.297 | 73.428 | ||||||

| 14 | .707 | 2.142 | 75.570 | ||||||

| 15 | .665 | 2.015 | 77.585 | ||||||

| 16 | .648 | 1.962 | 79.547 | ||||||

| 17 | .606 | 1.835 | 81.382 | ||||||

| 18 | .584 | 1.771 | 83.153 | ||||||

| 19 | .556 | 1.684 | 84.837 | ||||||

| 20 | .503 | 1.525 | 86.362 | ||||||

| 21 | .484 | 1.468 | 87.830 | ||||||

| 22 | .467 | 1.416 | 89.245 | ||||||

| 23 | .440 | 1.334 | 90.580 | ||||||

| 24 | .418 | 1.265 | 91.845 | ||||||

| 25 | .390 | 1.182 | 93.027 | ||||||

| 26 | .372 | 1.127 | 94.153 | ||||||

| 27 | .353 | 1.070 | 95.223 | ||||||

| 28 | .321 | .972 | 96.195 | ||||||

| 29 | .306 | .927 | 97.122 | ||||||

| 30 | .298 | .904 | 98.026 | ||||||

| 31 | .264 | .799 | 98.824 | ||||||

| 32 | .210 | .635 | 99.460 | ||||||

| 33 | .178 | .540 | 100.00 | ||||||

| Table 5 Rotated Component Matrixa | |||||

| Items | Components | ||||

| Teaching (T) | Learning (L) | Technology (G) | Teaching Aids (A) | Pedagogy (P) | |

| T1 | .795 | ||||

| T2 | .780 | ||||

| T3 | .704 | ||||

| L1 | .764 | ||||

| L2 | .733 | ||||

| L3 | .679 | ||||

| G1 | .756 | ||||

| G2 | .699 | ||||

| G3 | .671 | ||||

| A1 | .810 | ||||

| A2 | .796 | ||||

| A3 | .645 | ||||

| P1 | .753 | ||||

| P2 | .629 | ||||

| P2 | .608 | ||||

Rotation Method: Varimax with Kaiser Normalization.

a. Rotation converged in 6 iterations.

Confirmatory Factor Analysis (CFA)

Reliability and Validity Analyses of Measures

In order to examine the internal consistency of the items shown in table 6, reliability of the scale used in the study was tested by using Cronbach’s’ alpha method for each dimension and overall together. Items with Cronbach’s ’coefficients greater than 0.7 were only retained in the study. Cronbach’s’ coefficients of all the five constructs were found between the range of 0.82 to 0.921. Finally, 15 items were included and retained for the analysis.

| Table 6 Eeduqual Scale Items | ||

| Item No. | Teaching (T) | Item Code |

| 1 | The faculty members are delivering e-sessions as interactive sessions. | T1 |

| 2 | The quality of teaching is increased in e-sessions. | T2 |

| 3 | Evaluation of learners (like assignments, projects etc) is effective during e-sessions. | T3 |

| Item No. | Learning (L) | Item Code |

| 1 | The learning outcomes are achieved after attending e-session. | L1 |

| 2 | After attending the e-session, adequate knowledge was delivered. | L2 |

| 3 | E-sessions are able to enhance the skills related to the courses. | L3 |

| Item No. | Technology (G) | Item Code |

| 1 | The quality of the e-session video is appropriate. | G1 |

| 2 | The quality of the e-session audio is appropriate. | G2 |

| 3 | The e-Session details (like meeting-id, password, pin etc) are informed to the students well in advance. | G3 |

| Item No. | Teaching Aids (A) | Item Code |

| 1 | The Online Teaching Ads (like PPTs, Whiteboard, Images etc) used by the faculty members are appropriate. |

A1 |

| 2 | The Audio / Video content of the e-session is appropriate. | A2 |

| 3 | The faculty members are providing sufficient reading kit. | A3 |

| Item No. | Pedagogy (P) | Item Code |

| 1 | The pedagogy used in e-session is appropriate as per the lession content. | P1 |

| 2 | E-Sessions are enabling discussion among the learners along with the teacher. | P2 |

| 3 | Different pedagogical methods like lecturing, group discussion are effective in e-sessions. | P3 |

Model Validation

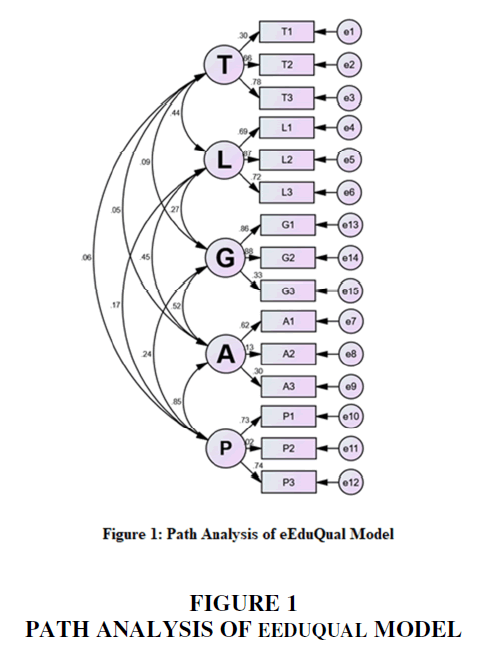

Confirmatory Factor Analysis was executed for eEduQual model to examine the convergent and discriminant validity of the constructs using IBM AMOS software. Table 7 shows the results of CFA. Figure 1 shows the path diagram of eEduQual Model. The model achieved its minimum statistical requirements in order to be qualified as a statistically fit. The model validation results are as follows. The model fit indices values are found to be within the acceptance limits suggested by Hair et al., (2010). The values of absolute fit measures indices of measurement model were found as “Chi-Square”=319.60 at “df”=80 and “p”=0.000, “Chi-Square/df”=3.989, “Goodness of Fit Index (GFI)”=.879 and “Root Mean Square of Approximation (RMSEA)”=.195, “Adjusted Goodness of Fit Index (AGFI)”=.879, “Normed Fit Index (NFI)”=.755, “Incremental Fit Index (IFI)” =.805, “Tucker–Lewis Index (TLI)” =.738 and “Comparative Fit Index (CFI)” =.800. The values of incremental fit measures were also found in the limit of acceptance. The standardized factor loadings of the model were significant. The lowest loading found to be 0.30 and highest loading is found to be 0.88. Finally, it can be concluded that eEduQual model is found to be fit with the data collected towards online teaching attributes.

| Table 7 eEduQual Model | |||||||

| Covariances | Estimate | S.E. | C.R. | P | Correlation | ||

| Teaching (T) | <--> | Learning (L) | .337 | .099 | 3.402 | *** | .443 |

| Teaching (T) | <--> | Technology (G) | .025 | .057 | .430 | *** | .045 |

| Teaching (T) | <--> | Pedagogy (P) | .027 | .041 | .665 | *** | .055 |

| Teaching (T) | <--> | Teaching Aids (A) | .082 | .067 | 1.216 | *** | .094 |

| Learning (L) | <--> | Technology (G) | .787 | .185 | 4.264 | *** | .450 |

| Learning (L) | <--> | Pedagogy (P) | .260 | .122 | 2.131 | *** | .166 |

| Learning (L) | <--> | Teaching Aids (A) | .771 | .207 | 3.727 | *** | .275 |

| Technology (G) | <--> | Pedagogy (P) | .956 | .151 | 6.343 | *** | .849 |

| Technology (G) | <--> | Teaching Aids (A) | 1.041 | .211 | 4.927 | *** | .516 |

| Pedagogy (P) | <--> | Teaching Aids (A) | .436 | .142 | 3.078 | *** | .242 |

According to the acceptable index, the Average Variance Explained (AVE) should be greater than the sum of the squared correlations between the latent variables and all other variables. Because the value of AVE's square root is higher than the inter-construct correlations coefficient, the discriminant validity is proven. (Hair et al., 2006). Furthermore, discriminant validity existed when each variable measurement indicator had a low association with all other variables except the one with which it must be formally related. (Aggarwal et al., 2020). The results show that the measurement model achieved good discriminate validity along with convergent validity.

As shown in the table 7, the covariance among the different dimensions of the eEduQual model are found to be significant at .000 level.

Between Teaching (T) and Learning (L), the covariance estimate found to be .337, at standard error .066, critical ratio or t-value 3.402, with correlation coefficient .443.

Between Teaching (T) and Technology (G), the covariance estimate found to be .025, at standard error .057, critical ratio or t-value .430, with correlation coefficient .045.

Between Teaching (T) and Pedagogy (P), the covariance estimate found to be .027, at standard error .041, critical ratio or t-value .665, with correlation coefficient .055.

Between Teaching (T) and Teaching Aids (A), the covariance estimate found to be .082, at standard error .067, critical ratio or t-value 1.216, with correlation coefficient .094.

Between Learning (L) and Technology (G), the covariance estimate found to be .787, at standard error .185, critical ratio or t-value 4.264, with correlation coefficient .450.

Between Learning (L) and Pedagogy (P), the covariance estimate found to be .260, at standard error .122, critical ratio or t-value 2.131, with correlation coefficient .166.

Between Learning (L) and Teaching Aids (A), the covariance estimate found to be .771, at standard error .207, critical ratio or t-value 3.727, with correlation coefficient .275.

Between Technology (T) and Pedagogy (P), the covariance estimate found to be .956, at standard error .151, critical ratio or t-value 6.343, with correlation coefficient .849.

Between Technology (T) and Teaching Aids (A), the covariance estimate found to be 1.041, at standard error .211, critical ratio or t-value 4.927, with correlation coefficient .516.

Between Pedagogy (P) and Teaching Aids (A), the covariance estimate found to be 0.436, at standard error .142, critical ratio or t-value 3.078, with correlation coefficient .242.

Overall it can be concluded that the eEduQual model is found to be statistically fit. The developed scale is presented in the Annexure Table 1.

Implications

Online educators can use this eEduQual model to evaluate the quality of their online education programs. Online education firms like coursera can utilize this scale while they take feedback from online learners towards online education. The online trainers of various training programs, EDPs, and MDPs can use this scale for determining the quality of their online sessions. After covid-19, all over the world universities and educational and training institutions started exploring opportunities to deliver their education and training programs online. Not only at higher education levels, but even at primary and secondary education levels the possibility of online education has been considered a good choice. so evaluating the quality of online education programs is always a challenge. The eEduQual scale proposed in this study can emerge as a solution.

Conclusion

The major finding of this study is construction of eEduQual Model, testing and validating it with a primary data collected from postgraduate students who attended online classes during the covid-19 period. The eEduQual model consists of five constructs where each construct is consisting of three scale items which will often determine the quality of online teaching-learning process. The five constructs are named as: Teaching (T), Learning (L), Technology (G), Teaching Aids (A), and Pedagogy (P).

It can be noticed that the model will try to determine the online education quality through the perception of students towards teaching quality (integrative sessions, teaching quality and evaluation), learning quality (learning outcomes, knowledge and skill enhancements), quality of technology (audio, video, login details), quality of teaching aids (PPTs, audio/video contents, reading materials) and finally quality of pedagogy (pedagogy appropriateness, peer discussion, pedagogical tools).

Limitations and Scope for Further Research

The validation of this model is done with postgraduate students only, it can be tested with undergraduate students also. The responded students are from technical and professional knowledge domains. The model can also be used for engineering, science, and art students. This model concentrated on only five dimensions (constructs) of the online teaching i.e. teaching, learning, technology, teaching aids and pedagogy but in real world there may be more dimensions. The study was conducted only in higher education but the same study can be conducted in other sectors also like secondary education, primary education etc. The Study taken into consideration only three programs MTech, MBA, MCA but there can be studies in other programs also like BTech, BBA, BCA, BA etc. The study can be further extended to other disciplines like medical sciences, arts, law, pharmacy etc.

Annexure - 1

| Annexure Table 1 eEduQual Scale | ||||||

| Item No. | Teaching (T) | Strongly Agree [5] | Agree [4] | Slightly Agree [3] | Disagree [2] | Strongly Disagree [1] |

| 1 | The faculty members are delivering e-sessions as interactive sessions. | |||||

| 2 | The quality of teaching is increased in e-sessions. | |||||

| 3 | Evaluation of learners (like assignments, projects etc) is effective during e-sessions. | |||||

| Item No. | Learning (L) | |||||

| 1 | The learning outcomes are achieved after attending e-session. | |||||

| 2 | After attending the e-session, adequate knowledge was delivered. | |||||

| 3 | E-sessions are able to enhance the skills related to the courses. | |||||

| Item No. | Technology (G) | |||||

| 1 | The quality of the e-session video is appropriate. | |||||

| 2 | The quality of the e-session audio is appropriate. | |||||

| 3 | The e-Session details (like meeting-id, password, pin etc) are informed to the students well in advance. | |||||

| Item No. | Teaching Aids (A) | |||||

| 1 | The Online Teaching Ads (like PPTs, Whiteboard, Images etc) used by the faculty members are appropriate. | |||||

| 2 | The Audio / Video content of the e-session is appropriate. | |||||

| 3 | The faculty members are providing sufficient reading kit. | |||||

| Item No. | Pedagogy (P) | |||||

| 1 | The pedagogy used in e-session is appropriate as per the lesson content. | |||||

| 2 | E-Sessions are enabling discussion among the learners along with the teacher. | |||||

| 3 | Different pedagogical methods like lecturing, group discussion are effective in e-sessions. | |||||

References

Aggarwal, A., Chand, P.K., Jhamb, D., & Mittal, A. (2020). Leader–member exchange, work engagement, and psychological withdrawal behavior: the mediating role of psychological empowerment. Frontiers in psychology, 11, 423.

Indexed at, Google Scholar, Cross Ref

Bernardi, R.A. (1994). Validating research results when Cronbach's alpha is below. 70: A methodological procedure. Educational and Psychological Measurement, 54(3), 766-775.

Indexed at, Google Scholar, Cross Ref

Fairchild, A.J., Horst, S.J., Finney, S.J., & Barron, K.E. (2005). Evaluating existing and new validity evidence for the Academic Motivation Scale. Contemporary Educational Psychology, 30(3), 331-358.

Indexed at, Google Scholar, Cross Ref

Gilbert, B. (2015). Online learning revealing the benefits and challenges.

Hair, J.F., Anderson, R.E., Tatham, R.L., & Black, W.C. (1998). Multivariate data analysis. englewood cliff. New jersey, USA, 5(3), 207-2019.

Hair, J. F., Black, W. C., Babin, B. J., Anderson, R. E., & Tatham, R. L. (2006). Multivariate data analysis 6th Edition.

Hay, A., Hodgkinson, M., Peltier, J.W., & Drago, W.A. (2004). Interaction and virtual learning. Strategic change, 13(4), 193.

Indexed at, Google Scholar, Cross Ref

Hung, M.L., Chou, C., Chen, C.H., & Own, Z.Y. (2010). Learner readiness for online learning: Scale development and student perceptions. Computers & Education, 55(3), 1080-1090.

Indexed at, Google Scholar, Cross Ref

Kim, K.J., Liu, S., & Bonk, C.J. (2005). Online MBA students' perceptions of online learning: Benefits, challenges, and suggestions. The Internet and Higher Education, 8(4), 335-344.

Indexed at, Google Scholar, Cross Ref

Krejcie, R.V., & Morgan, D.W. (1970). Determining sample size for research activities. Educational and psychological measurement, 30(3), 607-610.

Indexed at, Google Scholar, Cross Ref

Laine, L. (2003). Is E-Learning E-ffective for IT Training?. T+ D, 57(6), 55-60.

Lim, D.H., Morris, M.L., & Kupritz, V.W. (2007). Online vs. blended learning: Differences in instructional outcomes and learner satisfaction. Journal of Asynchronous Learning Networks, 11(2), 27-42.

Indexed at, Google Scholar, Cross Ref

Lin, B., & Hsieh, C.T. (2001). Web-based teaching and learner control: A research review. Computers & Education, 37(3-4), 377-386.

Indexed at, Google Scholar, Cross Ref

McVay, M. (2000). How to be a successful distance learning student: Learning on the Internet. (No Title).

McVay, M. (2000). Developing a web-based distance student orientation to enhance student success in an online bachelor’s degree completion program. Unpublished practicum report presented to the Ed. D. Program, Nova Southeastern University, Florida.

Muilenburg, L.Y., & Berge, Z.L. (2005). Student barriers to online learning: A factor analytic study. Distance education, 26(1), 29-48.

Indexed at, Google Scholar, Cross Ref

Petrides, L. A. (2002). Web-based technologies for distributed (or distance) learning: Creating learning-centered educational experiences in the higher education classroom. International journal of instructional media, 29(1), 69.

Reeves, T.C. (1993). Pseudoscience in computer-based instruction: the case of learner control research. Journal of computer-based instruction, 20(2), 39-46.

Roper, A. R. (2007). How students develop online learning skills. Educause Quarterly, 30(1), 62.

Serwatka, J.A. (2003). Assessment in on-line CIS courses. Journal of Computer Information Systems, 44(1), 16-20.

Indexed at, Google Scholar, Cross Ref

Smith*, P.J. (2005). Learning preferences and readiness for online learning. Educational psychology, 25(1), 3-12.

Indexed at, Google Scholar, Cross Ref

Smith, P.J., Murphy, K.L., & Mahoney, S.E. (2003). Towards identifying factors underlying readiness for online learning: An exploratory study. Distance education, 24(1), 57-67.

Indexed at, Google Scholar, Cross Ref

Song, L., Singleton, E.S., Hill, J. R., & Koh, M.H. (2004). Improving online learning: Student perceptions of useful and challenging characteristics. The internet and higher education, 7(1), 59-70.

Indexed at, Google Scholar, Cross Ref

Sun, A., & Chen, X. (2016). Online education and its effective practice: A research review. Journal of Information Technology Education, 15.

Swan, K., Shea, P., Fredericksen, E., Pickett, A., Pelz, W., & Maher, G. (2000). Building knowledge building communities: Consistency, contact and communication in the virtual classroom. Journal of Educational Computing Research, 23(4), 359-383.

Indexed at, Google Scholar, Cross Ref

Vonderwell, S. (2003). An examination of asynchronous communication experiences and perspectives of students in an online course: A case study. The Internet and higher education, 6(1), 77-90.

Indexed at, Google Scholar, Cross Ref

Warner, D., Christie, G., & Choy, S. (1998). Readiness of VET clients for flexible delivery including on-line learning. Brisbane: Australian National Training Authority.

Wagner, R., Werner, J., & Schramm, R. (2002, August). An evaluation of student satisfaction with distance learning courses. In Annual Conference on Distance Learning, University of Wisconsin, Whitewater, WI.

Received: 21-Mar-2023, Manuscript No. AMSJ-23-13405; Editor assigned: 22-Mar-2023, PreQC No. AMSJ-23-13405(PQ); Reviewed: 08-Apr-2023, QC No. AMSJ-23-13405; Revised: 20-May-2023, Manuscript No. AMSJ-23-13405(R); Published: 15-Jul-2023